Introduction

Deep learning has revolutionized artificial intelligence (AI), enabling breakthroughs in various fields, from image recognition to natural language processing. However, training deep neural networks presents several challenges, one of the most significant being the vanishing gradient problem. This issue can hinder the performance of deep models, leading to slow convergence or even complete failure to learn. Understanding the vanishing gradient problem, its causes, and potential solutions is crucial for improving deep learning models.

What is the Vanishing Gradient Problem?

The vanishing gradient problem occurs during backpropagation when gradients become extremely small as they propagate through layers. This primarily affects deep networks, making it difficult for earlier layers to update their weights effectively. As a result, these layers learn very little, leading to poor overall performance.

Backpropagation relies on the chain rule of differentiation to update weights in neural networks. When gradients shrink excessively, weight updates become negligible, slowing down or halting learning in deep layers. This issue is particularly prominent in networks with many layers, as errors diminish exponentially while moving backward through the model.

What is Exploding Gradient?

The exploding gradient problem occurs when gradients grow uncontrollably large during backpropagation. Instead of shrinking (like in vanishing gradients), they blow up exponentially, causing unstable training, NaN values, and weight divergence.

Example: In RNNs, multiplying large weight matrices repeatedly across long sequences can lead to exploding gradients.

Why Exploding Gradient Occurs?

- Deep Networks: Just like vanishing gradients, repeated multiplications through many layers can make gradients blow up.

- Improper Weight Initialization: Large weight values push activations into unstable regions.

- High Learning Rate: Overshooting during weight updates causes gradient values to diverge.

- Recurrent Neural Networks (RNNs): Long sequences → repeated multiplications → exponential growth of gradients.

Vanishing Gradient Problem in Deep Learning

Definition: Gradients shrink exponentially as they move backward through layers.

Impact: Early layers learn very little → network underfits.

Activation Functions Responsible: Sigmoid and tanh (derivatives saturate near 0).

Deeper Networks = Higher Risk.

Why is the Vanishing Gradient Problem Significant?

Prevents very deep neural networks from being trainable.

Early layers fail to extract meaningful features (especially harmful in computer vision & NLP).

Slows down convergence → wastes computation and resources.

Historically blocked the development of very deep models, until ReLU, Batch Normalization, and ResNets solved it.

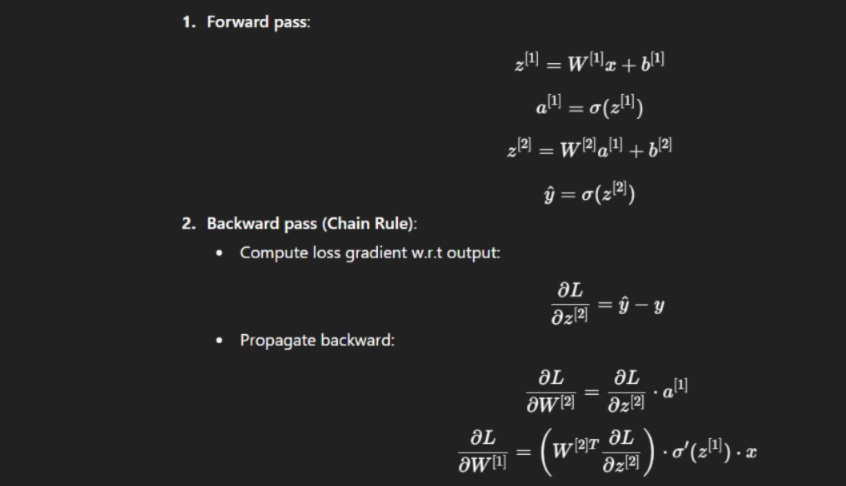

How to Calculate Gradients in Neural Networks?

Gradients are calculated using backpropagation, which applies the chain rule of calculus.

If σ′(z[1]) is very small (as in sigmoid), the product shrinks → vanishing gradient.

If W[2] is very large, the product explodes → exploding gradient.

Causes of the Vanishing Gradient Problem

Several factors contribute to the vanishing gradient problem, including the choice of activation functions, weight initialization methods, and network depth. Understanding these causes is essential to finding effective solutions.

1. Activation Functions

The choice of activation function plays a crucial role in gradient propagation. Sigmoid and hyperbolic tangent (tanh) functions are common in deep learning but are also the primary culprits behind the vanishing gradient problem.

- Sigmoid Function: The sigmoid activation function squashes input values into a small range between 0 and 1. However, its derivative is very small for extreme input values (near 0 or 1). When used in deep networks, the gradient can become so small that weight updates are ineffective.

- Tanh Function: The tanh function ranges between -1 and 1, but it suffers from the same issue as the sigmoid function. For large positive or negative inputs, its gradient approaches zero, causing the same problem in deep networks.

2. Weight Initialization

Improper weight initialization can exacerbate the vanishing gradient problem. If weights are initialized with very small values, the network’s activations can shrink, leading to smaller gradients during backpropagation. Similarly, large weight values can push activation outputs to the saturation regions of activation functions, further diminishing gradients.

3. Network Depth

Deep neural networks inherently suffer more from the vanishing gradient problem due to the repeated application of the chain rule. As gradients propagate backward through many layers, they multiply, leading to an exponential decrease. The deeper the network, the more likely it is for gradients to vanish before reaching earlier layers.

Solutions to the Vanishing Gradient Problem

Despite the challenges posed by the vanishing gradient problem, several techniques have been developed to mitigate its effects. These include the use of alternative activation functions, improved weight initialization strategies, and specialized architectures designed to maintain gradient flow.

1. Using ReLU and Its Variants

One of the most effective ways to combat the vanishing gradient problem is by replacing traditional activation functions with the Rectified Linear Unit (ReLU) and its variants.

- ReLU (Rectified Linear Unit): Defined as , the ReLU function does not squash values into a limited range. This helps maintain gradients even in deep networks.

- Leaky ReLU: Unlike standard ReLU, which outputs zero for negative values, Leaky ReLU allows a small negative slope, ensuring that neurons do not become inactive.

- Parametric ReLU (PReLU): A variant of Leaky ReLU where the negative slope is learned during training, making it more adaptable to different scenarios.

These activation functions help alleviate the vanishing gradient problem by ensuring that gradients remain substantial enough for effective learning.

2. Better Weight Initialization Techniques

Proper weight initialization can significantly improve gradient flow and prevent vanishing gradients. Several methods have been proposed to achieve this:

- Xavier (Glorot) Initialization: This method ensures that the variance of activations remains the same across layers, preventing gradients from vanishing or exploding.

- He Initialization: Specifically designed for ReLU and its variants, He initialization accounts for the non-linearity of the activation function, ensuring a more stable gradient flow.

By adopting these techniques, networks can maintain more stable gradients and learn more effectively.

3. Batch Normalization

Batch normalization (BN) is a widely used technique to stabilize and accelerate training. It normalizes activations within each mini-batch, preventing extreme values from pushing activations into saturation regions.

- BN ensures that inputs to each layer have a consistent distribution, reducing the likelihood of vanishing gradients.

- It allows the use of higher learning rates, speeding up training and improving generalization.

4. Residual Networks (ResNets)

Deep residual networks (ResNets) introduce shortcut connections, allowing gradients to bypass multiple layers. These skip connections help maintain gradient flow, effectively solving the vanishing gradient problem in very deep networks.

- ResNets enable training of extremely deep networks (e.g., 100+ layers) without degradation in performance.

- By allowing gradients to flow through skip connections, ResNets ensure that even earlier layers receive useful updates.

5. Gradient Clipping

Gradient clipping is a simple yet effective technique that restricts gradients to a specific range. Although it is more commonly used to prevent exploding gradients, it can also be useful in stabilizing training and preventing extremely small gradients.

Build and Train a Model for Vanishing Gradient Problem

The best way to understand the vanishing gradient problem is to experiment with a deep network that uses activation functions like sigmoid or tanh.

Example: Sigmoid Network in TensorFlow/Kerasimport tensorflow as tffrom tensorflow.keras.models import Sequentialfrom tensorflow.keras.layers import Denseimport numpy as np

#Generate simple input data

X = np.random.randn(1000, 10)y = (np.sum(X, axis=1) > 0).astype(int)

#Build deep model with sigmoid activations (prone to vanishing gradient)

model = Sequential()for _ in range(10): # Deep network with many layersmodel.add(Dense(128, activation='sigmoid'))model.add(Dense(1, activation='sigmoid'))

#Compile model

model.compile(optimizer='sgd', loss='binary_crossentropy', metrics=['accuracy'])

# Train model

history = model.fit(X, y, epochs=20, batch_size=32, verbose=1)

Here’s what happens:

With 10 sigmoid layers, gradients diminish as they backpropagate.

Training will be very slow, and accuracy may stagnate.

How to Fix It:

Switch to ReLU activations and He initialization:

model = Sequential()

for _ in range(10):

model.add(Dense(128, activation='relu', kernel_initializer='he_normal'))

model.add(Dense(1, activation='sigmoid')) # Output layer still sigmoid

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

history = model.fit(X, y, epochs=20, batch_size=32, verbose=1)

With ReLU, gradients flow better → training stabilizes and accuracy improves.

Practical Implications

Addressing the vanishing gradient problem is essential for training deep neural networks effectively. Whether in computer vision, speech recognition, or reinforcement learning, deep models benefit significantly from improved gradient flow.

- Computer Vision: Modern architectures such as ResNets and DenseNets have become standard for image recognition tasks, ensuring that deep networks remain trainable.

- Natural Language Processing (NLP): Techniques like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs) have been developed to counteract vanishing gradients in sequential data processing.

- Reinforcement Learning: Policies trained in deep reinforcement learning rely on stable gradients for effective learning, making solutions to this problem crucial in AI-driven decision-making.

Conclusion

The vanishing gradient problem remains one of the most significant challenges in deep learning. However, with the advent of new activation functions, better weight initialization techniques, and advanced network architectures like ResNets, researchers and engineers have found effective ways to mitigate its effects. Understanding and implementing these solutions allows for more stable and efficient training of deep neural networks, unlocking their full potential across various domains.

By addressing the vanishing gradient problem, deep learning continues to push the boundaries of artificial intelligence, leading to more powerful and capable models in the years to come.

FAQ’s

What is the cause of vanishing gradient problem?

The vanishing gradient problem is caused when activation functions like sigmoid or tanh squash values into small ranges, making gradients shrink during backpropagation and preventing deep neural networks from learning effectively.

How can vanishing gradient be prevented?

The vanishing gradient can be prevented by using ReLU or its variants, applying batch normalization, adopting residual connections (ResNets), and carefully initializing weights to maintain stable gradient flow in deep networks.

Can ReLU cause vanishing gradients?

ReLU largely prevents vanishing gradients by keeping positive gradients intact, but it can suffer from the “dying ReLU” problem, where neurons output zero for all inputs, effectively stopping gradient flow for those neurons.

How to detect a vanishing gradient?

You can detect a vanishing gradient by observing very slow or stalled training, extremely small weight updates, and gradients that approach zero in deeper layers when monitored during backpropagation.

Does LSTM solve the vanishing gradient problem?

Yes, LSTMs help solve the vanishing gradient problem by using gates and memory cells that allow gradients to flow over long sequences, preserving information and enabling effective learning in deep recurrent networks.