Diffusion models have revolutionized the world of generative AI, enabling high-quality image generation, video synthesis, and more. Unlike GANs, diffusion models work by iteratively removing noise from a sample to create realistic outputs.

Diffusion models are a class of generative models that have recently become on

e of the most powerful tools in artificial intelligence for generating high-quality synthetic data such as images, videos, and audio. Unlike traditional approaches like GANs (Generative Adversarial Networks) or VAEs (Variational Autoencoders), diffusion models work by simulating a gradual process: starting from pure noise and iteratively refining it until a meaningful and realistic sample emerges.

At their core, diffusion models are inspired by thermodynamic diffusion processes—the same physical principle that explains how molecules spread from areas of high concentration to low concentration. In the context of machine learning, diffusion models perform a two-phase process:

- Forward Process: A clean image (or data sample) is progressively corrupted by adding Gaussian noise in multiple steps until it becomes nearly random noise.

- Reverse Process: The model learns to reverse this noise-adding process, reconstructing the original data from the noisy sample step by step.

This step-by-step denoising approach makes diffusion models incredibly stable during training and capable of generating outputs with remarkable detail and fidelity. These qualities have positioned diffusion models as a cornerstone in the next generation of generative AI systems.

Why Are Diffusion Models Important in AI?

- They produce state-of-the-art results in image generation.

- Support stable training, unlike GANs.

- Can be adapted to text-to-image synthesis.

Why Are Diffusion Models Popular?

The rise of diffusion models has been dramatic, thanks to their robust training, better image diversity, and state-of-the-art performance in creative AI applications. They power some of the most advanced tools in the industry today, including Stable Diffusion, DALL·E 2, and MidJourney, which can generate photorealistic images from text descriptions.

Unlike GANs, which often suffer from issues like mode collapse or training instability, diffusion models produce consistent and reliable results, making them highly attractive for developers, researchers, and businesses.

What Makes Diffusion Models Revolutionary in Generative AI?

Diffusion models have become the backbone of modern generative AI, outperforming traditional techniques like GANs (Generative Adversarial Networks) and VAEs (Variational Autoencoders). Their primary advantage lies in stability and image quality—they avoid common GAN pitfalls such as mode collapse, and their noise-based training approach ensures diversity in outputs.

Historical Evolution of Diffusion Models

- Inspired by non-equilibrium thermodynamics.

- Gained popularity after DDPM paper by Ho et al., 2020.

- Became mainstream with Stable Diffusion and DALLE-2.

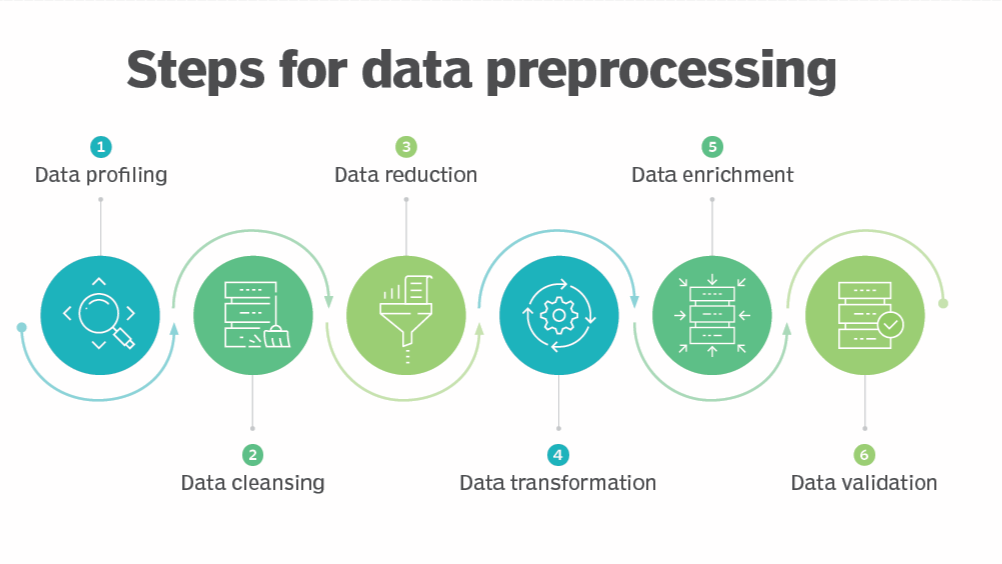

Data Preprocessing for Diffusion Models

Data preprocessing is one of the most crucial steps before training diffusion models. Since these models rely on progressively adding and removing noise, high-quality, well-prepared data ensures stability and performance. Below are the key steps and best practices:

Data Collection

- Gather large and diverse datasets relevant to your domain (e.g., ImageNet for images, LibriSpeech for audio, or COCO Captions for text-to-image).

- Ensure data variety to avoid overfitting and improve generalization.

Data Cleaning

- Remove duplicate or corrupted files that could distort the model’s learning.

- Normalize file formats (e.g., all images in JPEG/PNG or audio in WAV).

Image Resizing and Normalization

- Resize images to a fixed resolution (e.g., 256×256 or 512×512 pixels) for uniformity.

Normalize pixel values to [0, 1] or [-1, 1] depending on the model requirements.from torchvision import transforms

transform = transforms.Compose([

transforms.Resize((256, 256)),

transforms.ToTensor(),

transforms.Normalize((0.5,), (0.5,))

])

Data Augmentation

- Why? Augmentation increases dataset diversity without additional collection.

- Common techniques:

- Random flips (horizontal/vertical).

- Color jittering.

- Rotation and cropping.

transform = transforms.Compose([

transforms.RandomHorizontalFlip(),

transforms.RandomRotation(15),

transforms.ColorJitter(brightness=0.2, contrast=0.2),

transforms.ToTensor()

])

Tokenization for Text-to-Image Models

- When training models like Stable Diffusion, text prompts must be tokenized.

Use tokenizers from Hugging Face or CLIP.from transformers import CLIPTokenizer

tokenizer = CLIPTokenizer.from_pretrained("openai/clip-vit-base-patch32")

tokens = tokenizer("A beautiful sunset over the mountains", return_tensors="pt")

Audio Preprocessing (for Audio Diffusion Models)

- Convert audio to Mel spectrograms or normalized waveform tensors.

- Remove noise and silence segments for cleaner training.

Splitting the Dataset

- Divide into Training (80%), Validation (10%), and Testing (10%).

- Ensures that your model doesn’t overfit and can generalize well.

Batch Preparation

- Prepare batches efficiently for GPU/TPU processing.

Use PyTorch DataLoader for batching and shuffling.from torch.utils.data import DataLoader

dataloader = DataLoader(dataset, batch_size=32, shuffle=True)

Noise Scheduling

- Define a noise schedule (linear, cosine, or exponential) to progressively add noise.

- This is part of the forward diffusion process, but your dataset must be compatible with these steps.

Scaling for Large Datasets

- For large-scale training, consider:

- Distributed Data Parallel (DDP) for multi-GPU setups.

- Mixed Precision Training to save memory.

How Do Diffusion Models Work?

- Forward Diffusion: Add noise to an image gradually.

- Reverse Diffusion: Learn to denoise step-by-step.

- Uses Markov chain sampling and probabilistic modeling.

Mathematical Representation:

q(x_t | x_{t-1}) = N(x_t; √(1-β_t)x_{t-1}, β_tI)

Key Components

- Noise Scheduler: Controls noise addition.

- Denoising Function: Predicts clean image.

- Sampling Strategy: Guides final generation.

Types of Diffusion Models

- DDPM: Original diffusion models.

- DDIM: Faster sampling.

- Score-Based Models: Use gradients for generation.

Diffusion Models vs GANs vs VAEs

| Feature | GANs | VAEs | Diffusion Models |

| Stability | Low | High | Very High |

| Image Quality | High | Medium | Very High |

| Training Speed | Fast | Fast | Slow |

Applications

Diffusion models are already being deployed across industries, revolutionizing workflows:

1. Text-to-Image Generation

Tools like Stable Diffusion, DALL·E 2, and MidJourney use diffusion models to generate stunning artwork from text prompts.

2. Healthcare and Drug Discovery

- Generate synthetic medical images for research and training.

- Create molecular structures for drug design.

3. Video and Animation

- Diffusion-based systems like Runway Gen-2 can generate AI-powered videos from text or image prompts.

4. Gaming and Virtual Worlds

- Create 3D assets and game environments dynamically.

- Reduce time in concept design for VR worlds.

5. Audio and Music Generation

- Models like AudioLDM leverage diffusion for realistic sound synthesis.

6. Synthetic Data for AI Training

- Generate large datasets for autonomous vehicles and robotics.

Real-Time Examples

- Stable Diffusion by Stability AI.

- MidJourney for artistic image creation.

- AudioLDM for sound synthesis.

Advantages & Limitations

Advantages: High-quality results, versatility, stable training.

Limitations: High computational cost, slow sampling.

Compute Requirements: Training is resource-heavy.

Inference Speed: Slower compared to GAN-based models.

Ethical Concerns: Misuse in deepfakes and copyrighted material generation.

Popular Diffusion Models and Frameworks

- Stable Diffusion (open-source, text-to-image).

- Latent Diffusion Models (LDM) for faster generation.

- Imagen by Google (high-quality text-to-image).

- OpenAI’s DALL·E 2.

- Runway Gen-2 for video generation.

Recent Trends & Future Research

- Latent Diffusion: Reduces computational cost by operating in compressed space.

- ControlNet: Adds controllability to diffusion models (pose, depth, edges).

- Video Diffusion: Advanced research on generating coherent video sequences.

- Multimodal Models: Combining text, image, audio, and 3D generation in a single model.

Core Advantages of Diffusion Models

- High-Resolution Output: Capable of generating 4K images and beyond with realistic textures.

- Better Diversity: Reduces risk of repetitive or identical outputs.

- Stable Training: Unlike GANs, diffusion models do not require a discriminator, making training less adversarial.

- Scalable Across Domains: From text-to-image generation to 3D modeling.

Future of Diffusion Models

- Hybrid Models (Diffusion + Transformers).

- Real-Time Applications in AR/VR and gaming.

- Energy-Efficient Diffusion using optimized schedulers.

- Edge AI Integration: Lightweight diffusion models for smartphones.

- Enterprise Adoption: Fashion, entertainment, and real estate leveraging these models.

- Open Research: Community-driven improvements in speed and controllability.

Popular Tools

- Hugging Face Diffusers

- Stable Diffusion API

- OpenAI DALLE

Implementing Diffusion Models in Python

Include sample PyTorch code with steps for:

- Installing Hugging Face Diffusers.

- Loading pre-trained models.

- Generating an image.

Best Practices

- Use mixed precision training.

- Optimize sampling steps for faster results.

Key Takeaways

- Diffusion models mimic physical diffusion principles to progressively transform noise into structured data.

- They power some of today’s most advanced AI applications, including Stable Diffusion, DALL·E 2, and MidJourney.

- Their impact spans multiple industries: art and design, healthcare, drug discovery, autonomous systems, and synthetic data generation.

- Open-source availability and scalable architectures ensure that these models will continue to evolve rapidly.

Looking Ahead

The future of diffusion models is extremely promising. As research continues, we can expect:

- Faster inference times using optimization techniques like DDIM (Denoising Diffusion Implicit Models) and latent diffusion.

- Multimodal capabilities, enabling seamless integration of text, image, audio, and video generation.

- Improved controllability, allowing users to steer the generative process with higher precision.

- Applications in virtual reality, film production, fashion design, drug discovery, and even climate modeling.

Conclusion

Diffusion models are the future of generative AI, outperforming traditional models in image quality and diversity.Diffusion models represent a paradigm shift in generative AI, transforming how we create, manipulate, and interact with digital content. Their ability to generate high-resolution, photorealistic images, video, audio, and even 3D assets with unparalleled quality sets them apart from earlier approaches like GANs or VAEs.

Unlike traditional models that often face training instability or lack of diversity, diffusion models bring consistency, robustness, and creative flexibility—qualities that make them ideal for both research and production environments. This stability stems from their step-by-step noise-reduction approach, which not only makes them easier to train but also enables fine-grained control over the generative process.