Python is a versatile programming language used across data analytics, AI, web development, and more. To improve performance, especially in CPU-bound or I/O-bound tasks, Python developers leverage multithreading and multiprocessing.

While both approaches enable concurrent execution, understanding when and how to use each is essential for building high-performance applications.

In this guide, we’ll break down Python multithreading vs multiprocessing, discuss their advantages, limitations, real-world examples, and provide actionable insights for developers.

Understanding Python Concurrency

Python provides multiple ways to achieve concurrency — the ability for a program to execute multiple tasks simultaneously. The two primary methods are:

What is Multithreading?

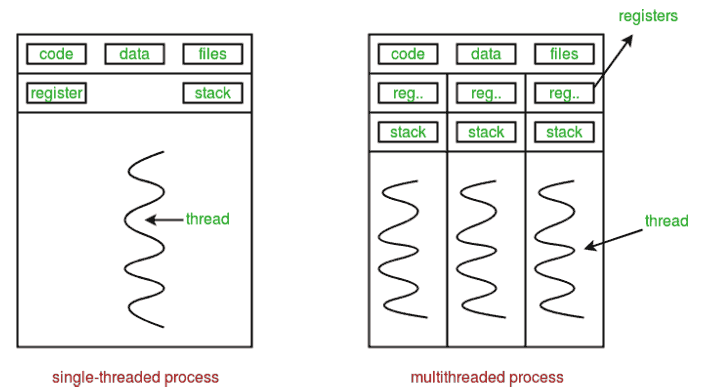

- Definition: Multithreading allows multiple threads (smaller units of a process) to run concurrently within the same program.

- Key Feature: All threads share the same memory space.

- Use Case: Best suited for I/O-bound tasks like web requests, file handling, or database operations.

- Python Implementation: Using the threading module.

Example snippet:

import threading

def print_numbers():

for i in range(5):

print(i)

thread1 = threading.Thread(target=print_numbers)

thread2 = threading.Thread(target=print_numbers)

thread1.start()

thread2.start()

thread1.join()

thread2.join()

What is Multiprocessing?

- Definition: Multiprocessing creates separate processes with independent memory space.

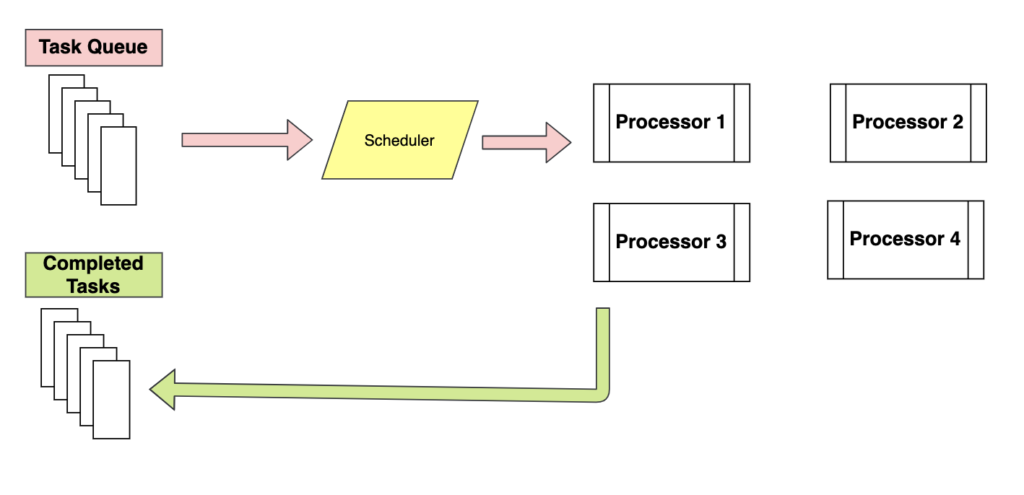

- Key Feature: Each process runs on a separate CPU core, bypassing the Global Interpreter Lock (GIL).

- Use Case: Best for CPU-bound tasks like large computations, data transformations, or machine learning model training.

- Python Implementation: Using the multiprocessing module.

Example snippet:

from multiprocessing import Process

def compute_square(n):

print(n * n)

process1 = Process(target=compute_square, args=(10,))

process2 = Process(target=compute_square, args=(20,))

process1.start()

process2.start()

process1.join()

process2.join()

Python Multithreading vs Multiprocessing: Key Differences

| Feature | Multithreading | Multiprocessing |

| Memory | Shared memory | Separate memory space |

| Overhead | Low | Higher due to process creation |

| CPU-bound | Less efficient | Highly efficient |

| I/O-bound | Efficient | Less efficient |

| GIL impact | Limited by GIL | No GIL limitation |

| Communication | Simple with shared objects | Needs IPC (Queue, Pipe) |

When to Use Multithreading

- Tasks involving network I/O (APIs, web scraping)

- File I/O operations

- GUI applications where responsiveness is critical

- Scenarios requiring lightweight concurrency without high memory overhead

Example: Concurrent Web Scraping

import threading

import requests

urls = ['https://example.com', 'https://example.org']

def fetch(url):

response = requests.get(url)

print(f'{url} - {len(response.text)} bytes')

threads = []

for url in urls:

t = threading.Thread(target=fetch, args=(url,))

t.start()

threads.append(t)

for t in threads:

t.join()

When to Use Multiprocessing

- Large-scale data processing

- CPU-intensive calculations

- Training machine learning models

- Tasks that need true parallelism

Example: Parallel Data Processing

from multiprocessing import Pool

def square(n):

return n*n

numbers = [1, 2, 3, 4, 5]

with Pool(4) as p:

results = p.map(square, numbers)

print(results)

Real-World Examples

Web Scraping with Multithreading

- Efficiently scrape hundreds of pages

- Reduces I/O waiting time

Data Processing with Multiprocessing

- Analyze millions of records in parallel

- Improves throughput by utilizing multiple CPU cores

Performance Comparison and Benchmarks

- Multithreading: Better for I/O-bound tasks but limited by GIL for CPU-bound tasks

- Multiprocessing: Overcomes GIL, scales with cores, higher memory usage

The Global Interpreter Lock (GIL): The Core Difference

At the heart of Python’s concurrency behavior lies the Global Interpreter Lock (GIL) — a mutex that allows only one thread to execute Python bytecode at a time within a single process. This means that even if multiple threads exist, only one can actively run Python code at once.

Why the GIL Exists

The GIL simplifies memory management and avoids race conditions within Python’s C API. However, this design also limits true parallelism for CPU-bound tasks.

How It Affects Multithreading

In multithreading, the GIL prevents multiple threads from executing CPU-bound operations simultaneously. This leads to minimal performance gain for computation-heavy code.

How Multiprocessing Overcomes It

Each process in multiprocessing has its own Python interpreter and memory space. Therefore, each process gets its own GIL — enabling true parallelism and CPU utilization across multiple cores.

In short:

- Multithreading = Concurrency (shared memory, single GIL)

- Multiprocessing = Parallelism (independent processes, multiple GILs)

Performance Optimization Strategies

While both concurrency models have their own strengths, developers can further enhance efficiency through optimization techniques.

Minimizing Overhead

- In multiprocessing, process creation is expensive due to memory duplication.

Solution: Use multiprocessing.Pool or concurrent.futures.ProcessPoolExecutor to reuse processes. - In multithreading, thread switching is faster, but too many threads can cause context-switching overhead.

Solution: Limit the number of threads to the number of I/O operations or network connections.

Balancing I/O and CPU Operations

In real-world scenarios, workloads are mixed — part CPU-bound, part I/O-bound.

For example, a machine learning pipeline might:

- Read massive datasets (I/O)

- Train models (CPU)

- Write predictions to files (I/O)

Best Practice:

Use multithreading for data loading and I/O, and multiprocessing for model training.

Memory Sharing Optimization

While multiprocessing isolates memory for safety, it can lead to excessive duplication.

- Use shared memory objects via multiprocessing.Value or Array.

- In Python 3.8+, use the shared_memory module to share NumPy arrays efficiently between processes.

Thread-Safe Programming Techniques

When working with threads, ensuring thread safety is essential.

Common Threading Issues

- Race Conditions: When two threads modify shared data simultaneously.

- Deadlocks: When two threads wait on each other indefinitely.

- Starvation: When some threads never acquire resources.

Tools for Synchronization

- Locks (threading.Lock): Ensures one thread accesses a resource at a time.

- Semaphores (threading.Semaphore): Controls access for multiple threads.

- Events (threading.Event): Coordinates threads through signals.

- Queues (queue.Queue): Thread-safe way to share data.

Example:

import threading

lock = threading.Lock()

counter = 0

def increment():

global counter

with lock:

counter += 1

threads = [threading.Thread(target=increment) for _ in range(100)]

for t in threads:

t.start()

for t in threads:

t.join()

print(counter)

This ensures atomic updates and prevents race conditions.

Inter-Process Communication (IPC)

When using multiprocessing, processes do not share memory, so they must communicate through Inter-Process Communication (IPC) mechanisms.

Queues and Pipes

Python’s multiprocessing.Queue and Pipe modules enable processes to send and receive messages safely.

Example:

from multiprocessing import Process, Queue

def worker(q):

q.put('Data from process')

if __name__ == '__main__':

q = Queue()

p = Process(target=worker, args=(q,))

p.start()

print(q.get())

p.join()

Managers

A Manager provides shared objects (like lists or dictionaries) that multiple processes can manipulate concurrently.

from multiprocessing import Manager

def append_data(shared_list):

shared_list.append(100)

if __name__ == '__main__':

with Manager() as manager:

shared_list = manager.list()

p = Process(target=append_data, args=(shared_list,))

p.start()

p.join()

print(shared_list)

Asynchronous Alternatives: asyncio

Beyond threading and multiprocessing, Python’s asyncio library offers another concurrency model — asynchronous I/O.

- Designed for I/O-bound and high-level structured network code.

- Unlike threads, it runs on a single thread with event loops.

Example:

import asyncio

async def fetch_data():

print("Fetching...")

await asyncio.sleep(1)

print("Done!")

asyncio.run(fetch_data())

Key Insight:

- asyncio > threading for lightweight I/O operations

- threading > asyncio when dealing with blocking libraries

- multiprocessing > both for CPU-heavy operations

Hybrid Concurrency Architectures

Modern systems often combine all three concurrency techniques:

| Type | Example Use |

| Multithreading | Concurrent I/O tasks like logging, file handling |

| Multiprocessing | Parallel computation for ML models |

| Asyncio | Non-blocking web requests |

Hybrid Example:

You could use:

- Asyncio for API requests

- Multithreading for handling responses concurrently

- Multiprocessing for heavy data transformations

This multi-layered design is common in frameworks like Dask, Ray, and Apache Airflow.

Real-World Applications

Web Servers

Web frameworks like Flask and FastAPI can use threaded workers to serve concurrent requests, while Gunicorn and Uvicorn use multiple processes for scalability.

Data Science and Machine Learning

Libraries like scikit-learn and XGBoost rely on multiprocessing to parallelize training across cores.

Big Data Systems

Tools like Dask, Ray, and Apache Spark (PySpark) abstract away threading and multiprocessing to provide distributed computation.

Computer Vision and AI

When processing video streams, multithreading can manage frame capture and buffering, while multiprocessing handles deep learning inference.

Benchmarking and Profiling

Understanding performance differences requires measurement.

Using time and cProfile

import time

from multiprocessing import Pool

def cube(n):

return n**3

if __name__ == '__main__':

start = time.time()

with Pool(4) as p:

p.map(cube, range(100000))

print("Time:", time.time() - start)

Memory Profiling

Use libraries like psutil or memory_profiler to monitor process usage. Multiprocessing generally consumes more memory, so optimize accordingly.

Benchmark Summary

| Task Type | Best Approach |

| File I/O, Network Requests | Multithreading |

| Numerical Computation | Multiprocessing |

| Mixed (I/O + CPU) | Hybrid (Thread + Process) |

| High-concurrency Networking | asyncio |

Debugging and Logging

Concurrency adds complexity to debugging. Use these best practices:

- Use threading.current_thread().name to identify thread logs.

- Use multiprocessing.get_logger() to track child process logs.

- Always use try/except blocks inside threads or processes to handle exceptions gracefully.

Design Patterns for Concurrent Systems

Common concurrency patterns include:

- Producer-Consumer: Threads or processes share a queue; one produces, another consumes.

- Worker Pool: A fixed number of threads or processes handle a stream of tasks.

- Map-Reduce: Data is mapped (distributed), processed in parallel, then reduced (aggregated).

Example of a simple worker pool:

from concurrent.futures import ThreadPoolExecutor

def task(n):

return n * 2

with ThreadPoolExecutor(max_workers=4) as executor:

results = executor.map(task, range(10))

print(list(results))

Future of Python Concurrency

Python’s evolution is addressing GIL-related challenges:

- PEP 703 (No-GIL Python): The future Python 3.13+ may introduce a GIL-free interpreter, allowing true parallel threads.

- Frameworks like Ray, Dask, and Modin already abstract multiprocessing complexities for large-scale workloads.

Once GIL-free Python becomes mainstream, multithreading will achieve true parallelism, blurring the boundary between threads and processes.

Integrating Multithreading and Multiprocessing Together

- Combine threading for I/O-bound subtasks inside CPU-bound processes

- Useful in web scraping with data analysis pipeline

Example:

from multiprocessing import Process

import threading

def io_task(n):

print(f"Processing {n}")

def process_task():

threads = []

for i in range(3):

t = threading.Thread(target=io_task, args=(i,))

t.start()

threads.append(t)

for t in threads:

t.join()

process = Process(target=process_task)

process.start()

process.join()

Conclusion

Choosing between Python multithreading vs multiprocessing depends on the nature of your tasks:

- Use multithreading for I/O-bound tasks where speed of waiting operations matters.

- Use multiprocessing for CPU-bound tasks to leverage multiple cores.

For complex applications, combining both approaches can optimize performance, reduce execution time, and maintain resource efficiency.

FAQ’s

Which is better, multiprocessing or multithreading in Python?

Data analytics focuses on examining existing data to uncover patterns, insights, and trends, while Generative AI (GenAI) goes a step further by creating new data, content, or insights using advanced AI models trained on large datasets.

What is the difference between multithreading and multiprocessing in Python medium?

In Python, multithreading allows multiple threads to run within the same process and share memory, making it ideal for I/O-bound tasks. Multiprocessing, on the other hand, uses multiple processes with separate memory spaces to perform true parallel execution, which is better suited for CPU-bound tasks.

Why doesn’t Python support multithreading?

Python doesn’t fully support true multithreading due to the Global Interpreter Lock (GIL), which allows only one thread to execute Python bytecode at a time. This prevents parallel execution of threads in CPU-bound tasks, though multithreading still works well for I/O-bound operations.

Can I use both multiprocessing and multithreading in Python?

Yes, you can use both multiprocessing and multithreading together in Python. This hybrid approach is useful when you want to handle I/O-bound tasks with threads and CPU-bound tasks with processes, leveraging concurrency and parallelism efficiently in the same program.

What is the alternative to multithreading in Python?

The main alternative to multithreading in Python is multiprocessing, which creates separate processes with their own memory space. This bypasses the Global Interpreter Lock (GIL) and allows true parallel execution of CPU-bound tasks. Other alternatives include asynchronous programming (asyncio) for I/O-bound operations.