K Nearest Neighbor (KNN) is one of the simplest and most widely used machine learning algorithms for classification and regression tasks. Known for its simplicity and effectiveness, KNN is a non-parametric, instance-based learning method that works on the principle of similarity.

Unlike algorithms like linear regression or logistic regression, KNN does not make assumptions about the underlying data distribution. Instead, it relies on distance measures to make predictions.

Why is KNN Important in Machine Learning?

KNN plays a significant role in machine learning because:

- It is easy to implement and understand.

- Suitable for small to medium-sized datasets.

- Works for both classification and regression.

- Often used as a benchmark model before implementing complex algorithms.

- Simple Yet Powerful Algorithm : KNN is one of the easiest algorithms to understand and implement.It does not require complex mathematical assumptions like linear regression or SVM.

- Non-Parametric Nature KNN is non-parametric, meaning it does not assume any specific distribution for data.This is critical when dealing with real-world data that rarely follows normal distribution.

- Versatile – Works for Classification and Regression

- Classification tasks (e.g., email spam detection, disease prediction).

- Regression tasks (e.g., predicting house prices).

This flexibility makes it a go-to algorithm in multiple domains. - Adaptable to Different Data Types : KNN can work with numerical, categorical, and even mixed data types with proper distance metrics.

- No Training Phase (Lazy Learner) : KNN does not build a model during training; it stores the dataset and performs computation at prediction time.Benefit: Training is extremely fast, which is helpful when data updates frequently.

- Handles Multi-Class Problems Easily : Unlike some algorithms that need adaptation for multi-class classification, KNN handles it naturally.

- Intuitive and Explainable : The algorithm’s logic is easy to explain: “An instance is classified based on its closest neighbors.”This interpretability is crucial in domains like healthcare, where model transparency is required.

- High Accuracy with Proper Tuning : With the right K value and distance metric, KNN can achieve excellent accuracy.

- Works Well for Small Datasets : KNN is ideal when the dataset is small and memory is not a constraint.

- Effective in Anomaly Detection : By analyzing distances from neighbors, KNN can identify outliers or anomalies.

- Foundation for Advanced Concepts : Many advanced ML and AI algorithms build on the principle of similarity used in KNN.

- Useful in Real-Time Applications : KNN is highly practical in applications that require on-the-fly decisions, such as:

1) Real-time content recommendations.

2) Spam email filtering. - Works Without Feature Engineering in Many Cases : While scaling is essential, KNN does not demand heavy feature engineering like tree-based models.

- Can Be Enhanced with Weighted Voting : Weighted KNN gives more importance to closer neighbors, improving accuracy for certain use cases.

- Easy Integration with Other Algorithms : KNN is often used as a baseline model for comparison or in ensemble methods.

Historical Background of KNN

- Origin: KNN was introduced in the early 1950s and has since been a cornerstone in pattern recognition.

- It remains popular because of its simplicity and non-parametric nature

- The K-Nearest Neighbor (KNN) algorithm is one of the oldest and simplest machine learning algorithms. Its development can be traced back to the early 1950s, long before the modern era of artificial intelligence.

- The concept of nearest neighbor classification emerged in statistics and pattern recognition.

- In the early decades, KNN was computationally expensive because it required storing and searching large datasets.

- In recent years, KNN has become a baseline algorithm in machine learning projects.

- It is widely taught in introductory ML courses due to its simplicity and intuitive approach.

- Advanced techniques such as KD-Trees, Ball Trees, and Approximate Nearest Neighbor (ANN) have optimized KNN for big data.

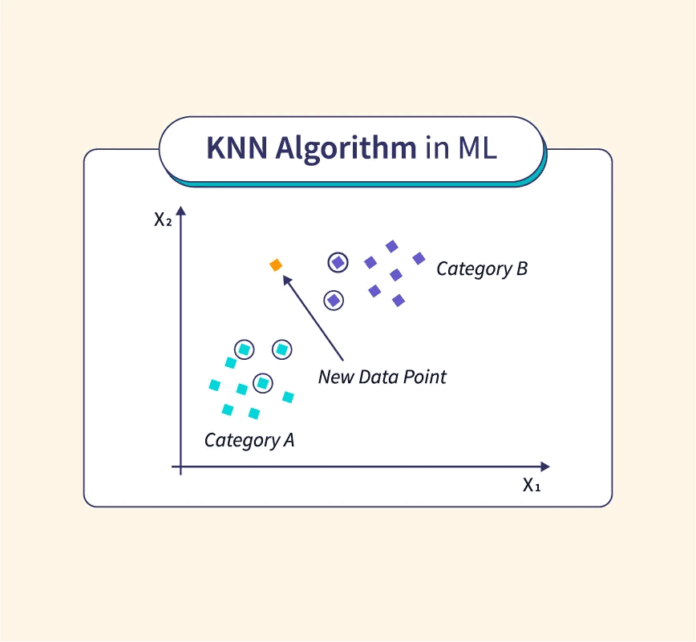

How Does K Nearest Neighbor Work?

Here’s how the KNN algorithm works:

- Choose the number K (number of nearest neighbors).

- Calculate the distance between the new data point and all existing data points.

- Select the K closest neighbors.

- Perform majority voting for classification or take the average for regression.

- Assign the class or value based on the result.

Example:

Suppose we want to predict whether a person likes a certain movie based on their age and interests. The algorithm will:

- Look for K similar people.

- Check what movies they liked.

- Predict based on the majority.

Core Principles Behind KNN

- Proximity matters: The closer the data point, the more influence it has.

- Lazy learning: KNN does not build a model during training; it stores the dataset and makes decisions during prediction.

- Non-parametric: No assumption about data distribution.

Understanding the Parameter ‘K’

The value of K is critical:

- Small K → Sensitive to noise, high variance.

- Large K → Higher bias, smoother boundaries.

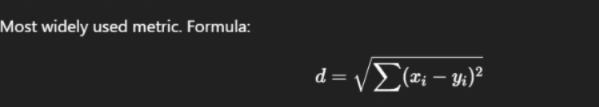

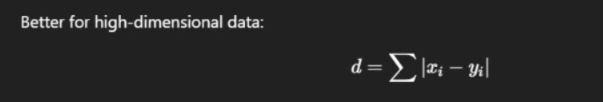

Distance Metrics Used in KNN

Choosing the right distance metric is essential. Common metrics include:

Euclidean Distance

Manhattan Distance

Minkowski Distance

Generalized form of Euclidean and Manhattan.

Hamming Distance

Used for categorical variables.

Advantages of K Nearest Neighbor

- Simple and intuitive.

- No training phase → Quick implementation.

- Works for both classification and regression.

- Handles multi-class problems well.

Limitations of KNN Algorithm

- Slow for large datasets (since prediction requires comparison with all points).

- Sensitive to irrelevant features and feature scaling.

- Struggles with high-dimensional data (curse of dimensionality).

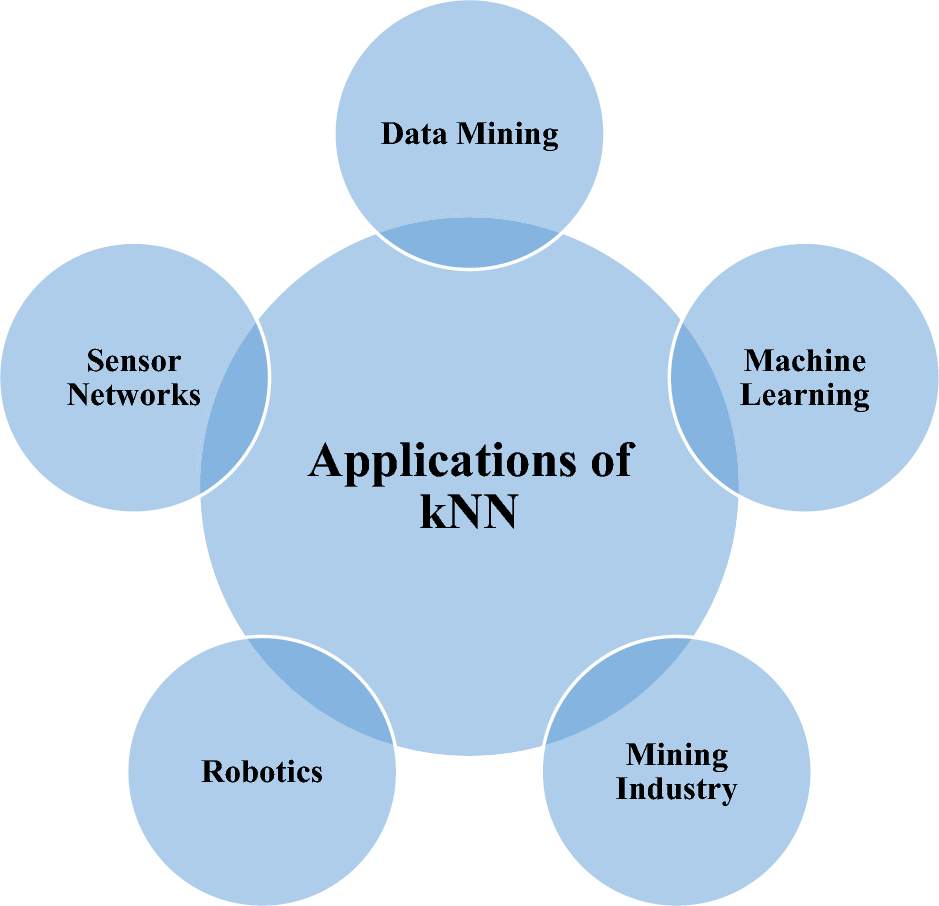

Real-Time Applications of KNN

- Recommendation Systems – Suggesting products based on user similarity.

- Medical Diagnosis – Predicting diseases from patient data.

- Image Recognition – Classifying objects in pictures.

- Financial Sector – Credit scoring and fraud detection.

- Customer Segmentation – Grouping customers based on purchasing habits.

KNN in Action: Real-World Examples

- Netflix uses KNN to recommend movies based on viewing patterns.

- Healthcare: Classifying cancerous vs non-cancerous cells.

- Retail: Predicting customer purchase behavior.

Step-by-Step Guide to Implement KNN in Python

Using Scikit-learn

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.neighbors import KNeighborsClassifier

from sklearn.metrics import accuracy_score

# Load dataset

iris = load_iris()

X, y = iris.data, iris.target

# Split data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

# Feature scaling

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_test = scaler.transform(X_test)

# Initialize KNN

knn = KNeighborsClassifier(n_neighbors=3)

# Train model

knn.fit(X_train, y_train)

# Predictions

y_pred = knn.predict(X_test)

# Accuracy

print("Accuracy:", accuracy_score(y_test, y_pred))

Hyperparameter Tuning in KNN

- Choosing optimal K using Grid Search or Cross Validation.

- Distance metric tuning.

- Weight parameter: uniform vs distance-based weighting.

KNN vs Other Algorithms

| Feature | KNN | Decision Tree | SVM |

| Model Type | Instance-based | Tree-based | Margin-based |

| Training Speed | Fast | Moderate | Slow |

| Prediction Speed | Slow | Fast | Fast |

Best Practices When Using KNN

- Always normalize or scale features.

- Remove irrelevant features.

- Use dimensionality reduction (PCA) for large feature sets.

Mathematical Intuition Behind KNN

Explain with formulas and visuals:

- Decision Boundary:

Show how KNN creates non-linear boundaries unlike linear classifiers. - Include a graph showing different K values and how boundaries change.

Impact of Feature Scaling

- Why scaling is important?

- KNN relies on distance, so features on different scales distort the results.

- KNN relies on distance, so features on different scales distort the results.

- Example:

If one feature ranges from 0-1000 and another from 0-1, the large scale dominates distance calculation.

Handling Missing Data in KNN

- Techniques:

- Imputation (mean/median)

- Dropping incomplete rows

- Imputation (mean/median)

- Explain why missing values cause problems in distance calculation.

Weighted KNN

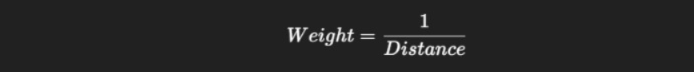

- Instead of simple majority voting, assign weights inversely proportional to distance.

- Example formula:

- Improves performance when closer neighbors are more relevant.

KNN in High-Dimensional Space

- Curse of Dimensionality:

Distance becomes less meaningful as dimensions increase. - Solutions:

- Dimensionality reduction (PCA, t-SNE)

- Feature selection

- Dimensionality reduction (PCA, t-SNE)

KNN for Regression

- Explain how KNN predicts continuous values by taking the average of K neighbors.

- Example: Predicting house prices based on similar properties.

KNN in Classification

- Showcase binary and multi-class classification examples.

- Include confusion matrix explanation for evaluation.

Evaluation Metrics for KNN

- Accuracy

- Precision, Recall, F1-Score

- ROC Curve and AUC

- Include Python code for these metrics using sklearn.metrics.

KNN with Cross-Validation

- Explain why train-test split is not enough.

- Show k-fold cross-validation code snippet.

Real-Time Advanced Use Cases

- Cybersecurity: Intrusion detection using KNN.

- Healthcare: Predicting heart disease risk.

- Finance: Fraud detection based on transaction patterns.

- Social Media: Classifying fake accounts.

- IoT & Edge Devices: KNN in sensor-based anomaly detection.

KNN in Big Data Era

- Problems:

- High computation for large datasets.

- Memory issues (storing entire dataset).

- High computation for large datasets.

- Solutions:

- KD-Trees

- Ball Trees

- Approximate Nearest Neighbors (ANN)

- KD-Trees

Comparison Table with Real Metrics

Create an SEO-friendly comparison table like:

| Algorithm | Training Time | Prediction Time | Accuracy on Iris |

| KNN | Low | High | 97% |

| SVM | Medium | Medium | 96% |

| Decision Tree | Medium | Fast | 94% |

Best Tools & Libraries for KNN

- Scikit-learn (Python)

- mlpack (C++)

- caret (R)

- TensorFlow KNN API

- H2O.ai

Common Mistakes to Avoid

- Not scaling features.

- Choosing arbitrary K without validation.

- Using KNN on huge datasets without optimization.

- Ignoring categorical variable encoding.

Advanced Optimizations

- Dimensionality Reduction before KNN.

- Parallelizing KNN using GPU acceleration.

- Approximate Nearest Neighbor for big data.

Interactive Visualization

- Add plots showing how K value affects decision boundaries.

- Example: K=1 (overfitting), K=15 (smoother boundary).

Future of KNN in AI & Data Science

With advancements in big data and distributed systems, KNN is being integrated into real-time systems and optimized for large-scale applications. Hybrid approaches (e.g., KNN + deep learning) are becoming common.

Conclusion

K Nearest Neighbor is a powerful, intuitive, and versatile algorithm that continues to hold relevance in the machine learning landscape. Whether you’re building a recommendation engine, working on medical diagnosis, or experimenting with image recognition, KNN provides a strong starting point.