In recent years, deep learning has become one of the most revolutionary areas of artificial intelligence. Within this domain, Artificial Neural Networks (ANNs) form the foundation upon which most deep learning models are built.

From recognizing images and understanding speech to generating music and analyzing financial trends, ANN deep learning has powered some of the most remarkable innovations in technology.

This article will guide you through the core concepts, architecture, algorithms, and applications of Artificial Neural Networks, explaining how they mimic human brain functions to process data and make intelligent predictions.

What is ANN in Deep Learning?

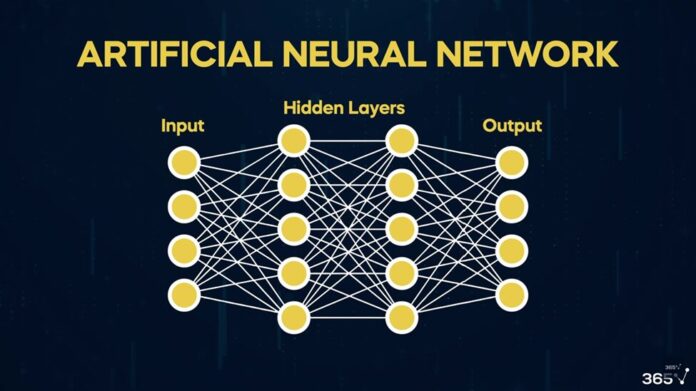

Artificial Neural Networks (ANN) are the computational models inspired by the biological structure of the human brain.

They consist of interconnected layers of nodes, or “neurons,” that work together to analyze data and extract meaningful patterns.

In deep learning, an ANN becomes “deep” when it has multiple hidden layers between the input and output layers, enabling the model to learn complex, non-linear relationships.

Key Components of an ANN:

- Input Layer: Receives data for processing.

- Hidden Layers: Perform transformations and feature extraction.

- Output Layer: Produces the final prediction or classification.

How Does ANN Work?

ANNs function through a process of learning from examples. They don’t rely on predefined rules but instead adjust their internal parameters—known as weights and biases—based on the data.

The process involves:

- Input Data Feeding: Data is passed into the input layer.

- Weighted Summation: Each neuron processes inputs by multiplying them with weights.

- Activation: The result is passed through an activation function to determine if it should be “fired.”

- Output Generation: The final output is compared with the actual result, and the difference (error) is minimized through backpropagation.

Example:

Imagine training an ANN to recognize handwritten digits (0–9).

- Each pixel of an image acts as an input.

- The network processes thousands of labeled images (from datasets like MNIST).

- After multiple iterations, the ANN learns which pixel patterns correspond to which digits.

Structure of Artificial Neural Networks

An ANN typically follows a layered architecture consisting of:

a. Input Layer

- Accepts raw data (like numbers, text, or images).

- Each node in this layer represents one feature of the input.

b. Hidden Layers

- Intermediate layers that apply mathematical operations.

- The depth (number of hidden layers) and width (number of neurons per layer) determine model complexity.

c. Output Layer

- Converts internal computations into a meaningful result.

- In classification, it usually employs a softmax function to produce probabilities.

The Role of Activation Functions in ANN

Activation functions introduce non-linearity, allowing ANNs to model complex patterns.

Popular Activation Functions:

- Sigmoid: Converts values between 0 and 1, useful for binary classification.

- ReLU (Rectified Linear Unit): The most common; efficient and less prone to vanishing gradients.

- Tanh: Maps inputs to between -1 and 1.

- Softmax: Used in the output layer for multi-class classification.

Learning Process in ANN: Forward and Backward Propagation

The training of an ANN involves two main processes:

1. Forward Propagation

Data flows from the input layer to the output layer, and predictions are made.

2. Backward Propagation

Errors between predicted and actual results are calculated, and weights are adjusted to minimize the error.

This is done using gradient descent, an optimization technique that fine-tunes model parameters.

Mathematical Representation:

- Loss Function (L): Measures prediction error.

- Gradient Descent Update:

W = W – η * ∂L/∂W

where η is the learning rate.

ANN vs Traditional Machine Learning

| Aspect | ANN Deep Learning | Traditional Machine Learning |

| Feature Extraction | Automatic (from raw data) | Manual (engineered by humans) |

| Data Requirement | High (large datasets) | Moderate |

| Performance | Excellent for complex tasks | Limited on unstructured data |

| Computation Power | GPU/TPU optimized | CPU sufficient |

| Examples | CNN, RNN, GAN | Decision Trees, SVM, KNN |

Key Insight:

Traditional models rely on explicit features, while ANNs learn hierarchical features directly from data.

Popular ANN Architectures in Deep Learning

There are various architectures built upon the ANN foundation, each tailored for specific use cases.

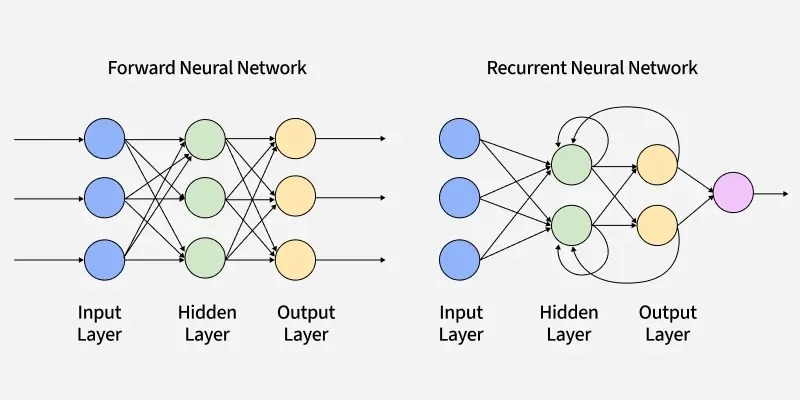

1. Feedforward Neural Network (FNN)

- Basic structure with unidirectional data flow.

- Ideal for classification and regression tasks.

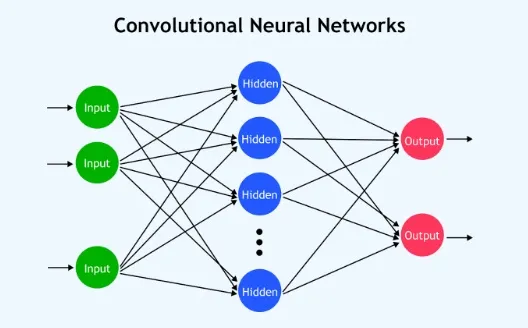

2. Convolutional Neural Network (CNN)

- Specialized for image data.

- Uses convolutional layers to detect spatial hierarchies.

3. Recurrent Neural Network (RNN)

- Designed for sequential data (text, time-series).

- Retains memory of previous inputs.

4. Long Short-Term Memory (LSTM)

- A type of RNN that addresses vanishing gradient problems.

- Effective in language modeling and speech recognition.

5. Autoencoders

- Used for unsupervised learning and dimensionality reduction.

- Also applied in image denoising and anomaly detection.

Case Study: Using ANN for Image Classification

Consider a real-world example where an Artificial Neural Network is trained to classify images of cats and dogs.

Step 1: Data Preparation

- 10,000 labeled images (cats/dogs).

- Normalized pixel values for uniformity.

Step 2: Model Architecture

- Input Layer: 64×64×3 (RGB image pixels).

- Hidden Layers: Two dense layers with ReLU activation.

- Output Layer: Softmax function for binary classification.

Step 3: Training

- Optimizer: Adam.

- Loss Function: Categorical cross-entropy.

- Epochs: 50.

Step 4: Result

- Achieved 95% accuracy on test data.

- Model deployed as a cloud-based image recognition API.

Advantages and Limitations of ANN

Advantages:

- Learns automatically from raw data.

- Handles non-linear and complex problems efficiently.

- Scalable and adaptable to multiple data types.

- Performs well on unstructured data like text, images, and sound.

Limitations:

- Requires large amounts of labeled data.

- Computationally expensive.

- Difficult to interpret (black-box models).

- Risk of overfitting if not regularized.

Mathematical Foundations of Artificial Neural Networks

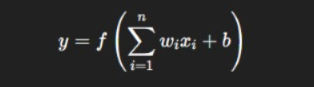

At the core of every Artificial Neural Network (ANN) lies the concept of mathematical optimization and linear algebra. Each neuron operates as a mathematical function that takes weighted inputs, sums them up, and applies an activation function to determine the output.

Mathematically, a neuron’s output can be expressed as:

Where:

- xi= input values

- wi = weight of each input

- b = bias term

- f = activation function (ReLU, sigmoid, tanh, etc.)

The weights are updated iteratively through an optimization process called gradient descent, which minimizes a loss function such as mean squared error (MSE) or cross-entropy. Backpropagation computes gradients of the loss with respect to weights and biases to fine-tune the network.

This mathematical backbone enables ANNs to approximate non-linear mappings between inputs and outputs — a property crucial in domains like image recognition, speech translation, and predictive analytics.

Deep Architectures Beyond the Basics

While basic feedforward networks introduced the foundation, modern deep learning architectures are significantly more sophisticated:

- Convolutional Neural Networks (CNNs): Specialized for image and video data, CNNs exploit spatial hierarchies using filters and pooling layers. They are used in facial recognition, autonomous vehicles, and visual inspection systems.

Example: Google’s Inception Network dramatically improved ImageNet classification accuracy using deep CNNs. - Recurrent Neural Networks (RNNs): Designed for sequential data, such as time-series or language, RNNs maintain a “memory” through recurrent connections. Variants like LSTM and GRU solve long-term dependency problems.

Example: Predicting stock prices or generating text using ChatGPT relies on this sequence modeling capability. - Transformer Networks: Introduced in 2017, Transformers revolutionized deep learning by replacing recurrence with self-attention mechanisms. This architecture underpins GPT, BERT, and T5, allowing for massive scalability and parallelism.

- Autoencoders and GANs: Autoencoders compress and reconstruct input data, aiding dimensionality reduction and anomaly detection. Generative Adversarial Networks (GANs), meanwhile, pit two networks (generator vs. discriminator) against each other to create realistic synthetic data — foundational to deepfake and AI art technologies.

Real-Time Industrial Applications of ANN Deep Learning

The power of ANN Deep Learning is most evident in real-world use cases across industries:

Artificial Neural Networks power numerous AI-driven systems across industries.

Automotive

- Self-driving cars using deep convolutional neural networks (CNNs).

- Real-time object detection for road safety.

Natural Language Processing

- Voice assistants (Alexa, Siri) use recurrent neural networks (RNNs).

- Sentiment analysis in customer feedback systems.

Marketing

- Predictive analytics for customer behavior.

- Personalized ad recommendations using neural networks.

Healthcare

- Medical Image Analysis: CNNs detect tumors in MRI scans or classify skin lesions with precision often exceeding human radiologists.

- Drug Discovery: Deep generative models simulate molecular interactions to predict potential compounds faster than traditional methods.

Finance

- Fraud Detection: ANN-based systems analyze millions of transactions per second to detect anomalies.

- Algorithmic Trading: Deep networks learn patterns in financial data to automate trades with predictive accuracy.

Manufacturing

- Predictive Maintenance: Deep learning models anticipate machine failures by analyzing sensor data.

- Quality Inspection: CNN-powered visual systems identify defects on assembly lines in real-time.

Transportation

- Autonomous Vehicles: ANNs process sensor and camera inputs to enable lane detection, obstacle avoidance, and route optimization.

- Traffic Management: Deep reinforcement learning algorithms improve city-wide traffic flow through adaptive signaling.

Customer Experience

- Chatbots & Virtual Assistants: Deep neural architectures like Transformers power conversational agents such as Google Bard and OpenAI’s ChatGPT, enhancing customer service automation.

These examples demonstrate how ANN deep learning models transform data into actionable intelligence across all verticals.

Challenges and Optimization Techniques

Despite their success, ANNs face multiple technical challenges:

- Overfitting: Deep networks often memorize training data. Solutions include dropout, data augmentation, and regularization.

- Vanishing/Exploding Gradients: Deep architectures struggle with stable gradient propagation. Techniques like batch normalization and residual connections (ResNets) mitigate this.

- Computational Cost: Training large models demands powerful GPUs or TPUs. Modern frameworks (TensorFlow, PyTorch) use distributed computing to accelerate training.

- Hyperparameter Tuning: Finding optimal learning rates, layer sizes, and batch configurations requires systematic experimentation via grid search or Bayesian optimization.

Optimization strategies like Adam, RMSProp, and Adagrad adapt learning rates dynamically, leading to faster and more reliable convergence.

Evaluating ANN Model Performance

The success of an ANN model is determined by key evaluation metrics:

- Accuracy: Percentage of correct predictions (used in classification).

- Precision and Recall: Measure model’s reliability for imbalanced datasets.

- F1-Score: Harmonic mean of precision and recall for balanced performance.

- ROC-AUC Curve: Evaluates model discrimination capability across thresholds.

- Confusion Matrix: Visualizes model errors across categories.

For real-time applications, latency, throughput, and energy efficiency also play critical roles in model deployment.

Real-World Example: Deep Learning in Predictive Maintenance

Consider a manufacturing plant that wants to predict machine failures.

- Data Collection: Sensors gather vibration, temperature, and power consumption data.

- Feature Engineering: Data is normalized and fed into an ANN regression model.

- Training: The network learns to associate sensor patterns with impending failures.

- Outcome: Maintenance is scheduled proactively, reducing downtime and saving costs.

This example illustrates how ANN deep learning transforms data-driven insights into tangible operational efficiency.

Integration with Modern AI Frameworks

Frameworks like TensorFlow, PyTorch, and Keras make ANN implementation intuitive and scalable.

For instance, a sample ANN in PyTorch for classification might look like this:

import torch

import torch.nn as nn

class SimpleANN(nn.Module):

def __init__(self):

super(SimpleANN, self).__init__()

self.fc1 = nn.Linear(10, 128)

self.relu = nn.ReLU()

self.fc2 = nn.Linear(128, 64)

self.fc3 = nn.Linear(64, 1)

self.sigmoid = nn.Sigmoid()

def forward(self, x):

x = self.relu(self.fc1(x))

x = self.relu(self.fc2(x))

x = self.sigmoid(self.fc3(x))

return x

This concise code represents the essence of ANN design — layered architecture, activation functions, and parameterized training.

Future of ANN Deep Learning

The evolution of ANNs continues with breakthroughs in explainability, efficiency, and hybrid models.

Emerging trends include:

These innovations will redefine how organizations use data for intelligent decision-making.

The frontier of deep learning research extends beyond conventional ANN structures:

- Neural Architecture Search (NAS): Uses AI to design optimal network topologies automatically.

- Edge AI and TinyML: Deploys ANN models on low-power devices, enabling smart IoT systems.

- Explainable AI (XAI): Focuses on making ANN decisions transparent and interpretable — a critical need in healthcare and finance.

- Neuro-symbolic AI: Combining neural networks with symbolic reasoning.

- Quantum Neural Networks: Leveraging quantum computing for faster processing.

Future innovations aim to reduce model size, improve interpretability, and democratize access to deep learning technologies across industries.

Final Thoughts

ANN Deep Learning represents one of the most transformative technologies in the digital era.

By mimicking human cognition and continuously improving through data, Artificial Neural Networks are enabling machines to perform tasks once thought impossible.

From healthcare to finance, their potential is limitless — and with ongoing research, ANN-based systems will continue to evolve into more efficient, interpretable, and scalable tools for the future.

FAQ’s

What is the ANN model in deep learning?

An ANN (Artificial Neural Network) in deep learning is a computational model inspired by the human brain that processes data through interconnected layers of neurons to recognize patterns, make predictions, and solve complex problems.

What is an ANN vs. CNN?

An ANN (Artificial Neural Network) is a general neural network used for structured data and basic prediction tasks, while a CNN (Convolutional Neural Network) is a specialized type of ANN designed to process image and spatial data by detecting patterns and features automatically.

What is the purpose of ANN?

The purpose of an Artificial Neural Network (ANN) is to enable machines to learn from data, identify patterns, and make intelligent decisions, mimicking how the human brain processes and interprets information.

What are the three layers of ANN?

The three layers of an Artificial Neural Network (ANN) are the input layer (receives data), hidden layer(s) (processes information through weighted connections), and the output layer (produces the final result or prediction).

What is ANN and its types?

An Artificial Neural Network (ANN) is a computational system modeled after the human brain that learns from data to make predictions or classifications. Its main types include Feedforward Neural Networks (FNN), Convolutional Neural Networks (CNN), Recurrent Neural Networks (RNN), and Modular Neural Networks (MNN).