Organizations are drowning in data. From e-commerce transactions to IoT devices, every second produces terabytes of information. But here’s the catch: without structure, governance, and reliability, data lakes often become data swamps—messy, unreliable, and hard to use.

This is where Delta Lake comes in. It’s not just another tool; it’s a game-changer that transforms raw, messy data lakes into trustworthy, high-performing, and analytics-ready storage systems.

In this blog, we’ll dive deep into what is Delta Lake, explore its features, real-world use cases, advantages, and why it has become the backbone of modern big data architecture.

What is Delta Lake?

Delta Lake is an open-source storage layer that sits on top of existing data lakes (like those built on Amazon S3, Azure Data Lake Storage, or Google Cloud Storage) and brings reliability, consistency, and performance to big data systems.

It is built on Apache Parquet format and is fully compatible with Apache Spark and Databricks.

In simple words:

- A data lake stores raw data.

- A data warehouse ensures structured, reliable data but is costly.

- Delta Lake combines the best of both worlds—scalability of data lakes with the reliability of warehouses.

Why Delta Lake Matters in Modern Data Architecture

Traditional data lakes face serious challenges:

- No ACID transactions → inconsistent data.

- No schema enforcement → corrupted tables.

- Hard to handle streaming + batch data.

- Query performance issues.

Delta Lake fixes these problems by adding a transaction log (Delta Log) that tracks all changes, making data trustworthy, reproducible, and consistent.

According to Databricks, companies using Delta Lake have improved ETL pipeline reliability by 70% and reduced data-related errors by 90%.

Key Features of Delta Lake

ACID Transactions

Delta Lake ensures that every operation is Atomic, Consistent, Isolated, and Durable—something traditional lakes lack.

Schema Enforcement & Evolution

- Prevents “bad data” from corrupting tables.

- Supports schema changes dynamically (adding new columns).

Time Travel

Query historical versions of data using Delta’s versioning system. Perfect for debugging and auditing.

Scalable Metadata Handling

Unlike Hive Metastore, Delta Lake handles billions of files efficiently with its optimized log structure.

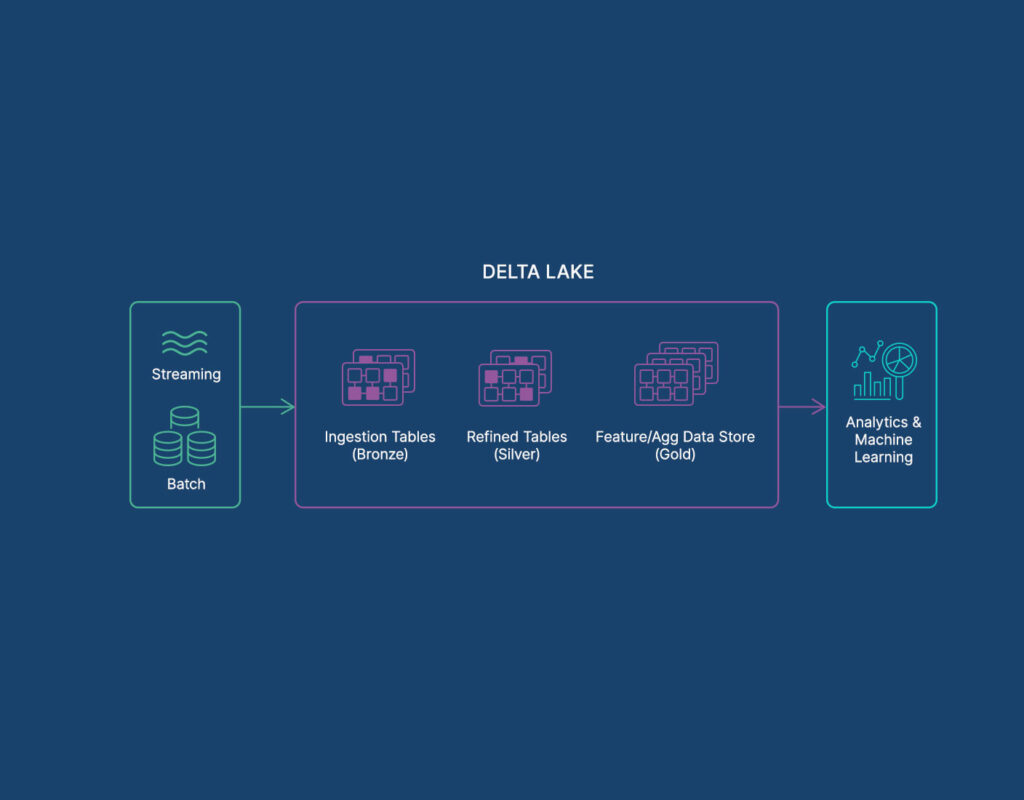

Unified Batch & Streaming

Delta unifies streaming (real-time) and batch processing into a single pipeline.

Real-Time Examples of Delta Lake in Action

- Healthcare: Storing patient sensor data for predictive diagnostics.

- Finance: Fraud detection with reliable historical transaction logs.

- Retail: Personalized recommendations based on real-time customer behavior.

- IoT: Managing massive IoT device logs for anomaly detection.

Delta Lake vs Traditional Data Lake

| Feature | Traditional Data Lake | Delta Lake |

| Data Reliability | Low | High (ACID) |

| Schema Enforcement | No | Yes |

| Time Travel | No | Yes |

| Streaming Support | Limited | Unified |

| Cost Efficiency | High storage, low processing | Optimized |

Delta Lake vs Data Warehouse

Delta Lake doesn’t replace warehouses but complements them. Unlike warehouses, Delta Lake supports unstructured + semi-structured data and scales cheaply.

Delta Lake with Apache Spark

Delta Lake integrates natively with Apache Spark, making ETL pipelines more reliable and queries faster. Developers can use PySpark, Scala, or SQL to work with Delta tables.

Delta Lake with Databricks

Databricks is the founder of Delta Lake and offers managed services that simplify scaling Delta for enterprises.

Advanced Concepts in Delta Lake

- Delta Caching: Speeds up read performance.

- Data Skipping: Skips irrelevant data during queries.

- Z-Ordering: Optimizes data layout for faster queries.

- Compaction & Optimization: Reduces small files problem.

Security in Delta Lake

Delta Lake integrates with cloud IAM (Identity & Access Management) and supports encryption, access controls, and auditing.

Delta Lake in Cloud Platforms

- AWS: Works with S3, EMR, Redshift.

- Azure: Works with Azure Synapse & Data Lake Storage.

- Google Cloud: Works with BigQuery & GCS.

Delta Lake for Machine Learning and AI

Delta Lake ensures ML pipelines get clean, consistent, and versioned training datasets. This eliminates the infamous training-serving skew problem.

Delta Lake Architecture Internals

- Delta Transaction Log (_delta_log):

Every change (insert, update, delete) is tracked in JSON/Parquet files stored in the _delta_log directory. This makes Delta event-sourced and auditable. - Checkpointing:

To optimize performance, Delta periodically writes Parquet checkpoint files summarizing logs for fast queries. - Optimistic Concurrency Control (OCC):

Delta handles multiple writers by comparing transaction versions before committing. If conflicts occur, failed transactions retry.

Delta Live Tables (DLT)

Delta Live Tables is a managed ETL framework by Databricks that uses Delta Lake underneath.

- Automates data pipeline reliability with built-in testing.

- Supports data quality rules (expectations).

- Monitors lineage for compliance.

Example: A financial institution can define expectations like “transaction amount > 0”, and if violated, records are quarantined.

Delta Sharing – Open Data Sharing Standard

Delta Lake powers Delta Sharing, the world’s first open protocol for secure data sharing across organizations.

- Works across clouds, tools, and platforms.

- Enables real-time data collaboration without moving/copying data.

- Compatible with BI tools like Tableau, Power BI, and Python.

Delta Lake Performance Optimizations

- Auto Compaction & Optimize Jobs: Merge small files automatically.

- Z-Ordering on Multiple Columns: Optimize queries by clustering data.

- Caching Hot Data: Speeds up repeated queries by storing in-memory.

- Data Skipping with Statistics: Delta stores column-level min/max values to skip irrelevant files.

In production workloads, these optimizations reduce query times by up to 80%.

Delta Lake with Apache Iceberg & Hudi

Delta competes and collaborates with Iceberg and Hudi (other open-source table formats).

- Delta vs Iceberg: Delta has stronger Databricks integration, Iceberg is cloud-native friendly.

- Delta vs Hudi: Hudi focuses on incremental data ingestion, Delta emphasizes transactional reliability.

- Modern enterprises often adopt a multi-table format strategy.

Delta Lake for Regulatory Compliance

Industries like healthcare, banking, and insurance must comply with strict regulations (GDPR, HIPAA, SOX).

- Time Travel helps auditors query old versions.

- Immutable Logs serve as compliance evidence.

- Data Lineage in DLT ensures full visibility into transformations.

Delta Lake in Real-Time Streaming Use Cases

- Fraud Detection: Analyze financial transactions in milliseconds.

- IoT Analytics: Handle millions of device signals per second.

- Clickstream Analysis: Process e-commerce user clicks instantly.

- Predictive Maintenance: Monitor sensor data from aircraft, vehicles, or factories.

Example: An airline uses Delta + Spark Structured Streaming to predict engine part failures before they occur.

Delta Lake in MLOps Pipelines

Delta Lake has become a standard for ML data management:

- Versioned Training Sets: Reproducible ML experiments.

- Feature Store Integration: Delta ensures features are consistent across training & inference.

- Streaming ML: Delta feeds real-time pipelines for fraud detection or recommendations.

Delta Lake and Cost Optimization

- Cloud-Native Storage Efficiency: Stores data in Parquet (columnar format), reducing storage costs.

- Auto Optimize in Databricks: Reduces file fragmentation → fewer I/O operations.

- Delta Caching: Cuts cloud storage reads → lower cloud bills.

Gartner reports organizations using Delta Lake save up to 30% cloud storage costs compared to raw data lakes.

Delta Lake and Lakehouse Evolution

Delta Lake powers the Lakehouse Architecture, merging data lake flexibility with warehouse reliability.

- Supports BI queries with SQL analytics.

- Handles AI/ML pipelines with Spark + MLflow.

- Reduces the need for separate warehouse & lake infrastructure.

Multi-Cloud and Hybrid Deployments

Delta Lake is cloud-agnostic:

- AWS (S3 + EMR/Glue)

- Azure (ADLS + Synapse)

- GCP (GCS + BigQuery)

- On-premise with Hadoop/Spark clusters

Hybrid enterprises use Delta Sharing for cross-cloud collaboration.

Delta Lake and Data Mesh

Delta Lake supports Data Mesh architecture by:

- Allowing teams to manage their domain-specific Delta tables.

- Enforcing governance policies while enabling decentralized ownership.

- Supporting federated queries across business units.

Latest Developments in Delta Lake (2024–2025)

- Delta Universal Format (UniForm): Read/write Delta tables in Iceberg + Hive + Presto without duplication.

- Delta Kernel API: Provides low-level APIs for developers to extend Delta functionalities.

- Performance Boosts: New caching layers speed up queries by 2x.

- Streaming Table APIs: Simpler integration for real-time data pipelines.

Case Studies: Delta Lake in Industries

- Healthcare: Genomic data pipelines.

- Finance: Real-time fraud detection.

- Retail: Demand forecasting.

- IoT: Smart city infrastructure monitoring.

Benefits of Using Delta Lake

- Reliable ETL pipelines.

- Simplified architecture (batch + streaming).

- Lower storage costs.

- Improved query performance.

- Scalable & cloud-agnostic.

Best Practices for Implementing Delta Lake

- Use Z-ordering for query optimization.

- Compact small files regularly.

- Leverage schema enforcement to avoid dirty data.

- Integrate with BI tools like Power BI or Tableau.

Future Trends of Delta Lake

- More Interoperability: Seamless read/write with Snowflake, Redshift, and other warehouses.

- Deeper integration with AI.

- Delta Lake as the default storage layer for enterprise cloud systems.

- Quantum computing impact on large-scale profiling.

- Expansion in real-time IoT analytics.

- Integration with AI Agents: Automated data governance and self-healing pipelines.

- Quantum-Safe Encryption: Preparing for next-gen cybersecurity.

- IoT & Edge Expansion: Lightweight Delta for edge devices.

Getting Started with Delta Lake (Step-by-Step)

- Install Apache Spark or Databricks.

- Configure Delta Lake.

- Create Delta tables.

- Run batch + streaming pipelines.

- Optimize & monitor.

Challenges and Limitations of Delta Lake

- Performance tuning needed for very large-scale systems.

- Learning curve for teams.

- Requires Spark expertise.

Conclusion

Delta Lake is not just a storage layer—it’s a revolution in data engineering. By combining the scalability of data lakes with the reliability of warehouses, it lays the foundation for data-driven enterprises.

Companies adopting Delta Lake today are future-proofing their data architectures for AI, ML, and beyond. If you’ve been asking “What is Delta Lake?”, now you know it’s the ultimate power guide to building reliable, efficient, and scalable data lakes.?”, now you know it’s the ultimate power guide to building reliable, efficient, and scalable data lakes.

FAQ’s

What is a delta lake?

A Delta Lake is an open-source storage layer that brings reliability to data lakes by enabling ACID transactions, scalable metadata handling, and unifying batch and streaming data processing.

What is the difference between Delta Lake and Databricks?

Delta Lake is an open-source storage framework that ensures reliable data management on data lakes, while Databricks is a cloud-based platform that provides a collaborative environment for big data analytics and AI—offering Delta Lake as one of its core technologies.

What is a delta lake in Azure?

In Azure, a Delta Lake is used with Azure Databricks to provide a reliable and scalable data lake solution, enabling ACID transactions, versioned data, and seamless integration of batch and streaming data for advanced analytics and AI.

How do I get to Delta Lake?

You can get to Delta Lake by using it through Apache Spark or cloud platforms like Databricks on AWS, Azure, or GCP. Simply create Delta tables within your data lake (e.g., Azure Data Lake Storage or AWS S3) and access them using Spark SQL or PySpark commands.

Is Delta Lake a lakehouse?

Yes, Delta Lake is a key technology behind the lakehouse architecture, as it combines the scalability of data lakes with the reliability and performance features of data warehouses, enabling unified data storage and analytics.