Bootstrapping is one of the most powerful and versatile techniques in statistics and data science. At its core, it is a resampling method that helps estimate population parameters using only sample data. Unlike traditional methods that rely heavily on theoretical distributions, bootstrapping uses the data itself to approximate confidence intervals, standard errors, and other statistical measures.

Imagine you have a small dataset and you need to make predictions or derive insights without assuming normality. That’s where bootstrapping shines. It leverages computational power to simulate multiple samples, giving you robust and reliable estimates.

Why Bootstrapping Matters in Statistics

Statistics often relies on assumptions like normal distribution or large sample sizes. But what if those assumptions fail? Bootstrapping solves this problem by:

- Removing dependency on theoretical distribution.

- Providing a data-driven approach for confidence intervals.

- Allowing robust estimation for small datasets.

Real-Time Relevance:

For instance, when analyzing customer satisfaction surveys with limited responses, bootstrapping enables accurate confidence interval estimation without requiring a large sample size.

Types of Bootstrapping

- Nonparametric Bootstrapping – Resampling directly from observed data without any distribution assumption.

- Parametric Bootstrapping – Assumes a distribution and samples from it using estimated parameters.

- Bayesian Bootstrapping – Uses Bayesian principles for resampling and estimating uncertainty.

Bootstrapping Works – Step-by-Step

Bootstrapping involves a straightforward process:

- Start with a sample dataset of size n.

- Randomly sample with replacement from the dataset to create a new sample of size n.

- Calculate the statistic of interest (mean, median, variance, etc.) on this new sample.

- Repeat this process 1,000 or more times to create a distribution of the statistic.

- Use the distribution to estimate confidence intervals, standard errors, or prediction intervals.

Core Principles Behind Bootstrapping

- Resampling: Drawing samples with replacement from the original dataset.

- Non-parametric Nature: No strict assumptions about population distribution.

- Computational Intensity: Requires multiple iterations (1000+ in most cases).

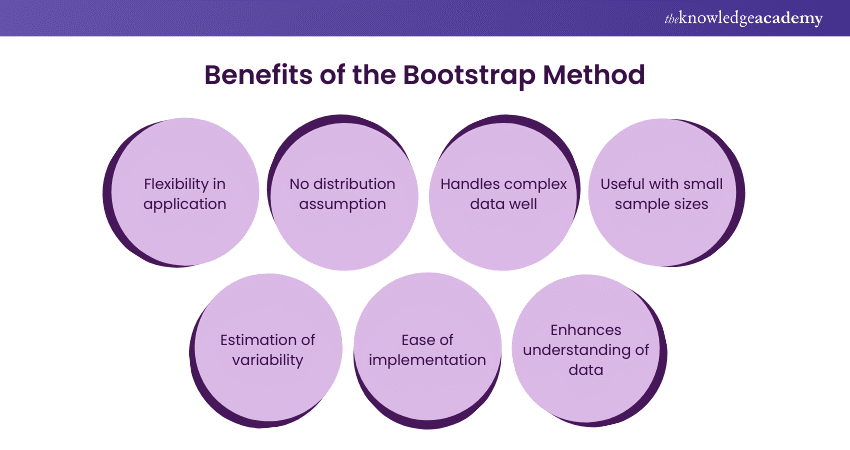

Advantages of Using Bootstrapping in Data Analysis

Bootstrapping is widely adopted because of its benefits:

- Works for small datasets.

- Does not rely on normality assumptions.

- Easy to implement with modern computing power.

- Produces empirical confidence intervals.

- Useful for complex estimators like median or regression coefficients.

Real-World Applications of Bootstrapping

- Finance: Estimating Value at Risk (VaR) when historical data is limited.

- Healthcare: Evaluating drug effectiveness using small clinical trials.

- Machine Learning: Model validation when the dataset is small.

- Marketing: Measuring campaign ROI with limited data points.

Bootstrapping vs Traditional Statistical Methods

| Aspect | Bootstrapping | Traditional Methods |

| Assumptions | Minimal | Often assumes normality |

| Data Requirement | Works for small n | Large sample needed |

| Flexibility | High | Limited |

| Computation | Intensive | Low |

Key Use Cases in Machine Learning and AI

- Model Evaluation: Bootstrapping helps compute confidence intervals for performance metrics like accuracy or AUC.

- Bias-Variance Tradeoff: Useful for ensemble models like Bagging.

- Feature Importance: Estimate variability of feature weights.

Common Challenges in Bootstrapping and How to Overcome Them

- High Computational Cost → Use parallel processing.

- Sampling Bias → Ensure original sample is representative.

- Overfitting Risk → Avoid excessive resampling in ML models.

Practical Examples and Case Studies

Example 1: Bootstrapping Mean in Python

import numpy as np

data = np.array([3, 7, 8, 5, 12])

bootstrap_means = []

for i in range(1000):

sample = np.random.choice(data, size=len(data), replace=True)

bootstrap_means.append(np.mean(sample))

confidence_interval = np.percentile(bootstrap_means, [2.5, 97.5])

print("95% CI for Mean:", confidence_interval)

Example 2: Bootstrapping in Business Forecasting

A retail company uses bootstrapping to predict sales variance for next quarter revenue forecasting, especially when only a few years of data are available.

When Should You Use Bootstrapping?

- Small sample sizes with unknown population distribution.

- When traditional parametric assumptions fail.

- For confidence intervals of complex estimators (e.g., median, regression coefficients).

- In business analytics when working with sparse datasets.

Key Statistical Metrics You Can Estimate with Bootstrapping

- Confidence Intervals

- Standard Errors

- Bias of an Estimator

- Prediction Intervals

- Percentiles

Bootstrapping in Predictive Modeling

- Helps validate ML models when k-fold cross-validation is not feasible.

- Used in ensemble methods like Bagging and Random Forest.

- Improves model robustness by generating multiple synthetic training sets.

Limitations of Bootstrapping

- Computationally expensive for large datasets.

- May give biased results if original sample is biased.

- Performance depends on the number of resamples (too low = inaccurate, too high = costly).

Practical Tips for Implementing Bootstrapping

- Always check for representativeness of the original dataset.

- Use at least 1000 resamples for stable results (or more for better accuracy).

- Apply parallel processing for faster computation.

- Validate results using a visual distribution plot.

Bootstrapping in Different Industries

- Finance: Stock return predictions, portfolio risk analysis.

- Healthcare: Medical research with limited patient samples.

- E-commerce: Conversion rate estimation with small A/B test samples.

- Manufacturing: Quality control analysis when data is limited.

Advanced Bootstrapping Techniques

- Wild Bootstrapping (for regression models with heteroscedastic errors).

- Block Bootstrapping (for time-series data).

- Cluster Bootstrapping (when data is grouped or hierarchical).

Bootstrapping in R vs Python

| Feature | Python | R |

| Ease of Use | High with libraries like bootstrapped | Excellent with boot package |

| Visualization | Matplotlib, Seaborn | ggplot2 |

| Popularity in Academia | Medium | Very High |

Popular Bootstrapping Tools and Libraries

- Python: numpy, scipy, bootstrapped

- R: boot package

- MATLAB: Built-in bootstrapping functions

Future of Bootstrapping in Modern Analytics

As data-driven decision-making grows, bootstrapping is becoming more relevant for:

- AI models validation

- Uncertainty estimation in deep learning

- Business risk analysis under limited data

Final Thoughts on Bootstrapping

Bootstrapping has transformed the way statisticians and data scientists approach uncertainty. Its flexibility, simplicity, and robustness make it indispensable in modern analytics. Whether you’re working on predictive modeling, clinical trials, or business forecasts, mastering bootstrapping is a must.trials, or business forecasts, mastering bootstrapping is a must.