Artificial Intelligence and Natural Language Processing (NLP) have transformed how humans interact with machines. Search engines, chatbots, recommendation systems, and even voice assistants are powered by sophisticated language models. Among these, one name stands out — BERT.

But what is BERT, and why has it become the backbone of modern NLP? In this blog, we’ll dive deep into its architecture, real-world applications, limitations, and future directions — making it the ultimate guide for anyone eager to understand BERT in detail.

What is BERT in Natural Language Processing?

BERT stands for Bidirectional Encoder Representations from Transformers. It is a deep learning model introduced by Google AI in 2018 to better understand the context and meaning of words in a sentence.

Unlike earlier NLP models that processed text either left-to-right or right-to-left, BERT processes text bidirectionally. This means it looks at both the left and right context of a word at the same time, allowing it to capture nuanced meanings.

Example:

- Sentence: “The bank is by the river.”

- Sentence: “The bank approved my loan.”

Traditional models would struggle with the meaning of “bank,” but BERT uses contextual embeddings to understand that the first refers to a riverbank, while the second refers to a financial institution.

Why Was BERT Developed?

Before BERT, NLP models like Word2Vec and GloVe relied on static word embeddings — meaning the word “bank” always had the same representation. This caused problems in tasks like search, question answering, and sentiment analysis.

BERT was developed to solve these challenges by:

- Capturing context-dependent meanings.

- Enabling deeper bidirectional training.

- Providing state-of-the-art performance on multiple NLP benchmarks.

Google integrated BERT into its search engine in 2019, drastically improving how queries are understood.

The Architecture of BERT Explained

Transformer Basics

BERT is built on the Transformer architecture, which relies heavily on the concept of self-attention to process words in relation to each other.

Encoder Layers

BERT uses the encoder part of the Transformer. For example:

- BERT Base: 12 encoder layers, 110 million parameters.

- BERT Large: 24 encoder layers, 340 million parameters.

Attention Mechanism

The self-attention mechanism allows BERT to focus on relevant words in a sentence.

Pre-training Objectives of BERT

BERT was pre-trained on massive text corpora like Wikipedia and BooksCorpus using two key objectives:

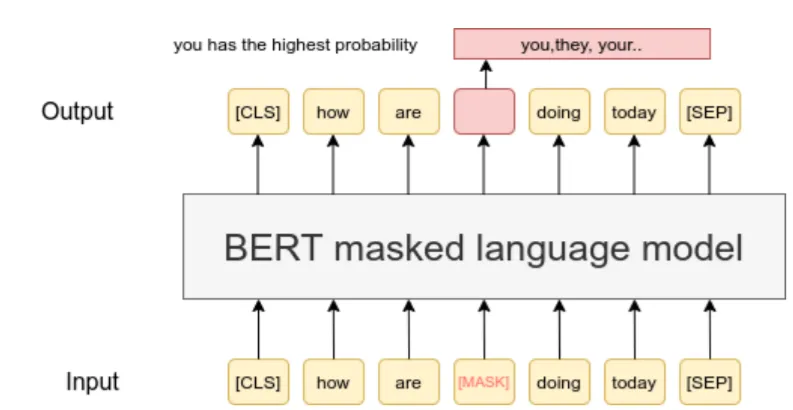

- Masked Language Model (MLM)

- Randomly masks words in a sentence and predicts them.

- Example: “The ___ sat on the mat” → “cat”.

- Randomly masks words in a sentence and predicts them.

- Next Sentence Prediction (NSP)

- Trains the model to predict if one sentence logically follows another.

- Example:

- Sentence A: “I went to the market.”

- Sentence B: “I bought vegetables.” → True.

- Sentence C: “The weather is hot.” → False.

- Sentence A: “I went to the market.”

- Trains the model to predict if one sentence logically follows another.

Fine-Tuning BERT for Real-World Tasks

BERT is pre-trained once but fine-tuned many times for specific tasks. Examples:

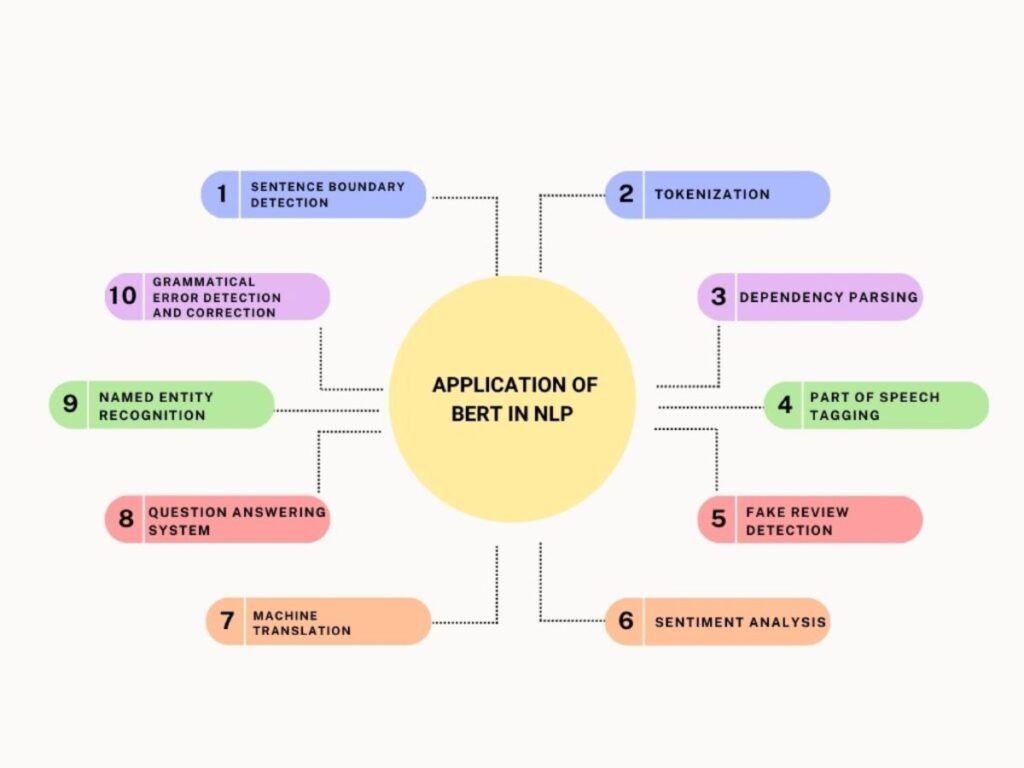

- Text Classification: Sentiment analysis, spam detection.

- Named Entity Recognition (NER): Extracting names, dates, and organizations.

- Question Answering (QA): Powering chatbots and search engines.

- Language Translation: Assisting multilingual models.

Applications of BERT in Everyday Life

- Google Search: Better query understanding.

- Chatbots: Smarter customer service bots.

- Healthcare: Extracting insights from clinical notes.

- Finance: Analyzing contracts and fraud detection.

- E-commerce: Enhancing product recommendations.

BERT vs Traditional NLP Models

| Feature | Traditional Models (Word2Vec/GloVe) | BERT |

| Word Embeddings | Static | Contextual |

| Directionality | One-way | Bidirectional |

| Pre-training Objective | Co-occurrence statistics | MLM + NSP |

| Performance | Limited | State-of-the-art |

Advantages and Limitations of BERT

Advantages:

- Context-aware understanding.

- Works well on small datasets with fine-tuning.

- Outperforms previous models in multiple NLP tasks.

Limitations:

- High computational cost.

- Requires large memory and GPUs.

- Sometimes lacks interpretability.

BERT Variants and Extensions

- RoBERTa → Improved training with larger batches.

- DistilBERT → Smaller, faster version.

- ALBERT → Efficient with fewer parameters.

- BioBERT → Adapted for biomedical texts.

- mBERT → Multilingual BERT supporting 100+ languages.

BERT in SEO and Google Search

In SEO, BERT helps Google better understand long-tail queries.

Digital marketers now focus on content relevance and natural language instead of keyword stuffing.

Implementation of BERT in Python (Code Example)

from transformers import BertTokenizer, BertModel

import torch

# Load pre-trained BERT model and tokenizer

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = BertModel.from_pretrained('bert-base-uncased')

# Encode text

inputs = tokenizer("BERT is revolutionizing NLP models", return_tensors="pt")

outputs = model(**inputs)

print(outputs.last_hidden_state.shape)

How Enterprises Are Using BERT

- Amazon: Personalized recommendations.

- Microsoft: Enhancing Office products with NLP.

- Healthcare Startups: Diagnosing diseases using medical literature.

- Financial Institutions: Risk assessment and fraud detection.

The Future of BERT and NLP Models

The rise of LLMs (Large Language Models) like GPT-4 doesn’t make BERT obsolete. Instead, BERT is becoming a building block for domain-specific and hybrid models. Expect:

- More efficient training methods.

- Greater integration into real-time applications.

- Domain-specialized BERT variants.

Beyond BERT – The Evolution of Transformer Models

- While BERT revolutionized NLP in 2018, newer models like DeBERTa (Decoding-enhanced BERT with disentangled attention), ELECTRA, and T5 push the boundaries by offering improved efficiency and generative capabilities.

- Comparison Insight: BERT is encoder-only, while GPT (decoder-only) and T5 (encoder-decoder) enable generative tasks, making them better for applications like text summarization and content generation.

- Real-world impact: Google Search still uses BERT-like models for contextual understanding, but also integrates MUM (Multitask Unified Model) for multimodal tasks (text + image).

BERT vs GPT – Complementary, Not Competitors

- BERT specializes in understanding text, while GPT excels at generating text.

- Example:

- Query intent classification → BERT is ideal.

- Blog/article writing → GPT-like models dominate.

- Query intent classification → BERT is ideal.

- Industry adoption: Financial firms use FinBERT for sentiment classification, while media companies use GPT-like models for content drafting.

Domain-Specific BERT Applications

- BioBERT: Used in healthcare to mine relationships from PubMed papers (e.g., drug-disease correlations).

- LegalBERT: Automates contract review, clause extraction, and compliance checks.

- SciBERT: Accelerates research discovery by classifying and summarizing scientific literature.

- FinBERT: Provides sentiment analysis for stock price movement predictions.

Technical Depth – Why BERT Works So Well

- Masked Language Modeling (MLM): Randomly masks words, forcing BERT to understand bidirectional context.

- Next Sentence Prediction (NSP): Helps capture relationships between sentences, useful for tasks like Q&A.

- Attention Visualization: Researchers study attention heads to see how BERT “attends” to syntactic/semantic relationships (e.g., subject-verb agreement).

Optimization Advancements:

- Mixed precision training speeds up large BERT models.

- Knowledge distillation (DistilBERT, TinyBERT) reduces computational load while maintaining performance.

Fine-Tuning BERT for Specialized Tasks

- Stepwise Fine-Tuning:

- Start with pre-trained BERT weights (base or large).

- Replace the top layer with a task-specific layer (e.g., softmax for classification, CRF for sequence labeling).

- Train on your labeled dataset with a low learning rate to preserve pre-trained knowledge.

- Start with pre-trained BERT weights (base or large).

- Use Cases:

- Sentiment analysis on product reviews.

- Named Entity Recognition (NER) in legal contracts.

- Question Answering systems like SQuAD.

- Sentiment analysis on product reviews.

BERT in Text Embedding & Semantic Search

- BERT Embeddings: Transform words or sentences into context-aware vectors, unlike static embeddings (Word2Vec, GloVe).

- Semantic Search: Search engines and enterprise document systems use BERT embeddings to find conceptually similar content, even when keywords don’t match exactly.

- Example: A query “how to apply for a loan” can retrieve documents containing “loan application process” due to semantic understanding.

BERT Variants and Their Advantages

| Variant | Special Feature | Use Case |

| DistilBERT | Smaller, faster, ~40% fewer parameters | Mobile NLP applications |

| RoBERTa | Optimized pre-training, no NSP | Improved accuracy for classification |

| ALBERT | Parameter sharing, factorized embedding | Efficient large-scale NLP models |

| SpanBERT | Focus on span-level masking | Better NER and relation extraction |

| ClinicalBERT | Trained on medical notes | Healthcare NLP |

- Insight: Choosing a BERT variant balances accuracy vs computational efficiency, essential for real-world deployment.

Advanced Techniques: BERT with Attention Mechanisms

- BERT uses multi-head self-attention to capture dependencies between words regardless of their distance in a sentence.

- Attention Visualization: Tools like BertViz allow researchers to see which words influence predictions, aiding interpretability.

- Example: For the sentence “The cat sat on the mat”, BERT might focus on the word “sat” when predicting the role of “cat.”

Handling Large Documents with BERT

- Standard BERT is limited to 512 tokens, which is a challenge for books, research papers, or long contracts.

- Solutions:

- Longformer / BigBird: Extend attention to thousands of tokens efficiently.

- Sliding Window Technique: Break documents into overlapping chunks, process separately, then aggregate embeddings.

- Longformer / BigBird: Extend attention to thousands of tokens efficiently.

- Real-World Example: Legal firms use Longformer to extract clauses from multi-page contracts.

BERT in Multilingual NLP

- mBERT (Multilingual BERT): Pre-trained on 104 languages, enabling cross-lingual tasks.

- Applications:

- Automatic translation quality assessment.

- Cross-lingual information retrieval.

- Sentiment analysis for global product reviews.

- Automatic translation quality assessment.

- Advantage: Reduces the need for separate models for each language.

BERT in Production Systems

- Infrastructure Requirements:

- High RAM and GPU/TPU support for real-time inference.

- Model optimization with ONNX, TensorRT, or HuggingFace’s Transformers for deployment.

- High RAM and GPU/TPU support for real-time inference.

- Latency Reduction Techniques:

- Model distillation (DistilBERT, TinyBERT).

- Quantization to reduce precision without much loss in accuracy.

- Model distillation (DistilBERT, TinyBERT).

- Practical Use Cases:

- Chatbots: BERT enhances contextual understanding of user queries.

- Document summarization: Enterprise knowledge bases generate concise summaries.

- Fraud detection: BERT identifies suspicious patterns in textual transaction data.

- Chatbots: BERT enhances contextual understanding of user queries.

Ethical Considerations in BERT Applications

- Bias Mitigation: Pre-trained BERT models may encode gender, racial, or cultural biases. Techniques include:

- Debiasing embeddings using counterfactual data.

- Fairness-aware fine-tuning.

- Debiasing embeddings using counterfactual data.

- Privacy Concerns: Using BERT on sensitive data requires data anonymization and adherence to regulations like GDPR.

- Transparency: Explainable AI tools help users understand model predictions, critical in domains like healthcare and finance.

Research Frontiers: Next-Generation BERT

- Adapter Layers: Allow fine-tuning only small layers instead of full BERT, reducing training cost.

- Continual Learning: BERT models updated with new knowledge without forgetting prior training.

- Multimodal Transformers: Integrating text, image, and audio for richer context (e.g., VideoBERT).

- Low-Resource NLP: Applying BERT in languages with limited labeled data using cross-lingual transfer learning.

Challenges & Limitations of BERT

- Computational Cost: Training BERT-large requires TPUs/GPUs and terabytes of data.

- Context Window Limitation: Standard BERT handles sequences of up to 512 tokens, limiting its ability to handle very long documents.

- Bias Issues: Since BERT is trained on internet-scale data, it inherits and amplifies biases (e.g., gender bias in word associations).

- Interpretability: Understanding why BERT makes certain predictions remains difficult.

Future Directions in BERT Research

- Long-Context Models: Variants like Longformer and BigBird extend sequence length beyond 512 tokens, useful for processing research papers, books, or legal contracts.

- Multimodal BERTs: VideoBERT, VisualBERT, and VilBERT extend language models into vision + language tasks (e.g., captioning, video QA).

- Energy-Efficient Transformers: Focus on making BERT lighter and greener for edge devices.

- Explainable BERT: New tools aim to visualize attention weights to ensure transparency in sensitive domains like healthcare and law.

Real-World Examples

- Google Search – Understanding user intent behind natural queries like “flights to New Delhi” vs “New Delhi flights status”.

- LinkedIn Recruiter – Uses BERT for better job matching between candidates and recruiters.

- Amazon Alexa – Context-aware response improvements with BERT embeddings.

- Financial Services – Detecting fraudulent activity in transaction logs.

- Healthcare – Identifying patient conditions from electronic health records (EHR).

Conclusion

So, what is BERT? It’s not just a model — it’s a paradigm shift in NLP. By capturing bidirectional context, BERT transformed how search engines, chatbots, and AI systems understand human language.

From powering Google Search to healthcare innovations, BERT continues to evolve. Its variants and future advancements ensure it will remain central to AI-driven communication.

FAQ’s

What is the use of BERT in Google?

BERT is used by Google to improve search results by understanding the context and meaning of words in queries, enabling more accurate and relevant responses to user searches.

What is BERT used for in NLP?

In NLP, BERT is used for understanding context in text, enabling tasks like question answering, sentiment analysis, named entity recognition, and text classification with higher accuracy than traditional models.

What’s the Bert model and why is it good?

BERT (Bidirectional Encoder Representations from Transformers) is a pre-trained NLP model that reads text bidirectionally, capturing context from both left and right of a word. It’s good because this deep contextual understanding significantly improves performance on tasks like search, Q&A, and text classification.

How do I use BERT model?

You can use the BERT model by loading a pre-trained version from libraries like Hugging Face Transformers, then fine-tuning it on your specific NLP task—such as text classification, question answering, or named entity recognition—using frameworks like PyTorch or TensorFlow.

What is the BERT method?

The BERT method involves pre-training a bidirectional Transformer model on large text corpora using tasks like Masked Language Modeling (MLM) and Next Sentence Prediction (NSP), then fine-tuning it on specific NLP tasks for high-accuracy results.