Artificial Intelligence (AI) has transformed industries, and at the core of AI lies the concept of neural networks. Among the simplest yet powerful forms of neural networks is the feed forward neural network.

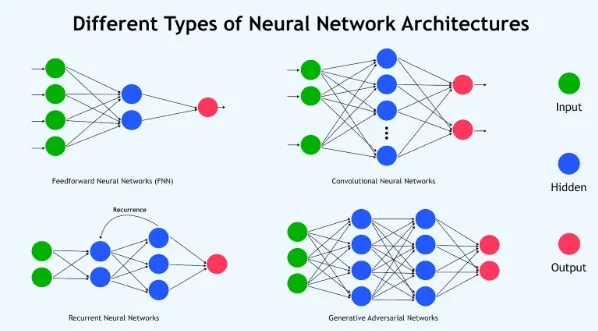

This network is called feed forward because data flows strictly in one direction—from input nodes, through hidden layers, to output nodes—without looping back. This makes it easier to understand and implement, making it a foundation for more advanced architectures like convolutional neural networks (CNNs) and recurrent neural networks (RNNs).

Why Study Feed Forward Neural Networks?

Feed forward neural networks are considered the building blocks of deep learning. If you understand them, you can easily move on to more complex models.

- They provide a foundation for computer vision, natural language processing, and speech recognition.

- They’re widely used in industries such as finance (fraud detection), healthcare (disease prediction), and e-commerce (recommendation systems).

- Many real-world systems rely on the core principles of feed forward networks even if the architecture evolves.

Basic Architecture of a Feed Forward Neural Network

A typical feed forward neural network has three main types of layers:

- Input Layer: Accepts raw data (features).

- Hidden Layers: Perform computations and extract features.

- Output Layer: Produces the final prediction.

For example, in a customer churn prediction system, the input could be customer age, contract type, and monthly charges. The hidden layers would process these inputs, and the output layer would predict whether the customer is likely to leave.

How a Feed Forward Neural Network Works Step-by-Step

- Input data enters the network.

- Each input is multiplied by weights and added to biases.

- The result passes through an activation function.

- Outputs from one layer become inputs to the next.

- The final output is compared with the expected result using a loss function.

- Optimization algorithms adjust the weights to minimize error.

This flow is forward-only (no feedback loops), hence the name.

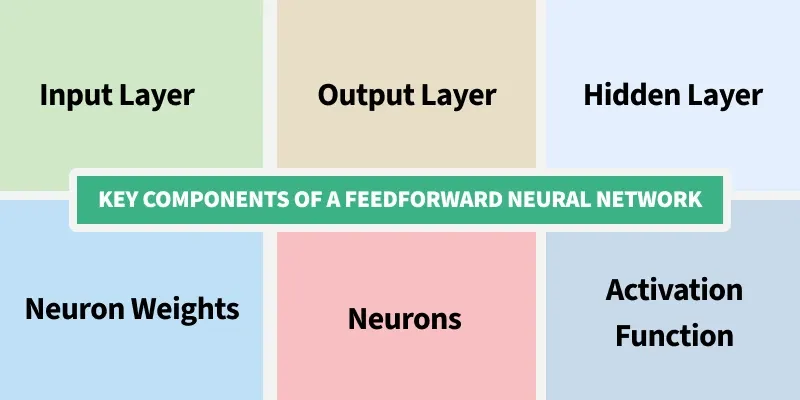

Key Components of a Feed Forward Neural Network

a) Neurons

The fundamental unit that processes information. Each neuron takes weighted inputs, applies an activation function, and passes output forward.

b) Layers

- Input Layer: Raw data features.

- Hidden Layers: Intermediate layers that perform transformations.

- Output Layer: Gives predictions (e.g., classification, regression).

c) Weights and Bias

Weights define the importance of inputs, while bias shifts the output curve to improve learning.

d) Activation Functions

Introduce non-linearity into the system. Without them, the network behaves like linear regression.

Forward Propagation Explained

Forward propagation is the calculation of output from given input:

y=f(Wx+b)

Where:

- x = input

- W = weight

- b = bias

- f = activation function

Example: Predicting house price based on size and location. Inputs are multiplied by weights (importance of size and location), added to bias (baseline price), and passed through an activation function for prediction.

Backpropagation and Training Process

Backpropagation is the learning process:

- Calculate the error between predicted output and actual output.

- Propagate error backward through layers.

- Update weights using optimization (e.g., gradient descent).

This iterative process ensures the network improves accuracy with every epoch.

Mathematical Foundation of Feed Forward Neural Networks

The equation for one neuron:

z=∑(wi⋅xi)+b

a=f(z)

Where a is the activated output.

When stacked across multiple layers, this becomes a deep feed forward neural network.

Activation Functions in Detail with Real Examples

- Sigmoid: Good for probabilities. Example: Predicting churn (yes/no).

- ReLU: Common in hidden layers, avoids vanishing gradient. Example: Image recognition.

- Tanh: Outputs between -1 and 1. Example: Sentiment analysis.

- Softmax: Used in classification. Example: Handwritten digit recognition (0–9).

Loss Functions and Optimization Techniques

Loss functions measure performance:

- Mean Squared Error (MSE): Regression problems.

- Cross-Entropy Loss: Classification tasks.

Optimization techniques minimize loss:

- Gradient Descent

- Adam Optimizer

- RMSProp

Advantages of Feed Forward Neural Networks

- Simplicity and ease of implementation.

- Can model complex relationships.

- Widely applicable across industries.

Universal Approximation Theorem and Its Implications

One of the most important theoretical results related to feed forward neural networks (FFNNs) is the Universal Approximation Theorem.

It states that:

- A feed forward network with a single hidden layer containing a finite number of neurons can approximate any continuous function on compact subsets of real numbers, given appropriate weights and activation functions.

- This establishes FFNNs as powerful function approximators.

Implication in practice:

Even though deeper architectures like CNNs or Transformers dominate modern AI, FFNNs remain fundamental because they theoretically cover any mapping between inputs and outputs. In applications like tabular data modeling (e.g., banking, risk prediction, industrial IoT signals), FFNNs often outperform more complex architectures.

Depth vs. Width Debate

While the universal approximation theorem guarantees the potential of shallow networks, research shows that deeper FFNNs often require fewer neurons to represent complex functions compared to very wide but shallow networks.

- Shallow + Wide Network: Needs a large number of neurons.

- Deep + Narrow Network: More computationally efficient and captures hierarchical feature representations.

This tradeoff is critical in resource-constrained environments like mobile AI or embedded systems.

Regularization in Feed Forward Neural Networks

Regularization is essential to prevent overfitting. Beyond dropout (already covered), advanced methods include:

- L1 and L2 Regularization: Add penalty terms to weights, encouraging sparsity or smaller weights.

- Batch Normalization: Normalizes inputs to each layer, improving training stability and convergence speed.

- Early Stopping: Monitors validation error and halts training when performance stops improving.

- Data Augmentation (for structured data): Synthetic oversampling methods like SMOTE can help balance classification tasks where FFNNs are applied.

Advanced Optimization Algorithms

While SGD and Adam are standard, researchers have explored:

- AdaMax: A variant of Adam, more stable for certain high-dimensional problems.

- Nadam: Combines Adam with Nesterov accelerated gradients.

- Lookahead Optimizer: Improves generalization by looking at “fast weights” and synchronizing them with “slow weights.”

In real-world deployments, optimizer choice can drastically influence convergence speed and stability.

Interpretability of Feed Forward Neural Networks

One of the criticisms of neural networks is the “black-box” problem. With FFNNs, several interpretability methods exist:

- Feature Importance via Input Perturbation: Changing one input feature while holding others constant to measure sensitivity.

- SHAP (Shapley Additive Explanations): Assigns importance values to each feature, based on cooperative game theory.

- LIME (Local Interpretable Model-Agnostic Explanations): Builds surrogate interpretable models around predictions.

These methods are crucial in finance, healthcare, and law, where transparency is as important as accuracy.

Hardware Considerations and Efficiency

Training large FFNNs requires optimization for hardware efficiency:

- GPU vs. CPU: GPUs accelerate matrix multiplications significantly, crucial for FFNN training.

- TPUs (Tensor Processing Units): Provide even greater acceleration for TensorFlow/Keras implementations.

- Quantization: Reducing precision (e.g., FP32 → INT8) allows faster inference on edge devices.

- Pruning: Removing unnecessary weights/neurons to shrink network size without significant accuracy loss.

Hybrid Models with Feed Forward Neural Networks

Modern AI often combines FFNNs with other architectures:

- FFNN + CNN: CNN extracts features from images, FFNN performs classification. Example: Detecting diabetic retinopathy from eye scans.

- FFNN + RNN/LSTM: RNNs capture sequential dependencies, FFNNs make final predictions. Example: Stock price forecasting combining sequential signals with structured company features.

- FFNN in Transformers: Even transformers include feed forward layers after self-attention mechanisms. This demonstrates how FFNNs remain foundational even in cutting-edge models like GPT and BERT.

Advanced Case Studies

a) Healthcare: Predicting Sepsis

Researchers have used FFNNs on patient vital signs and lab data to predict sepsis hours before onset. Compared to logistic regression, FFNNs capture nonlinear relationships, improving predictive power.

b) Banking: Credit Scoring

Feed forward networks outperform traditional scoring models by capturing complex interactions between features like income, repayment history, and credit utilization.

c) Climate Modeling

FFNNs have been applied to forecast temperature and precipitation trends by combining satellite data with structured meteorological features.

Training Challenges and Solutions

- Vanishing/Exploding Gradients: Particularly in deep FFNNs, gradients may shrink or blow up.

- Solution: ReLU/Leaky ReLU activations, proper initialization (Xavier, He).

- Solution: ReLU/Leaky ReLU activations, proper initialization (Xavier, He).

- Hyperparameter Tuning: Learning rate, batch size, and number of layers drastically affect outcomes.

- Solution: Automated tools like Optuna, Hyperopt, or Bayesian optimization.

- Solution: Automated tools like Optuna, Hyperopt, or Bayesian optimization.

- Imbalanced Data: FFNNs struggle with minority classes.

- Solution: Weighted loss functions, oversampling techniques.

Advanced Mathematical Insight

Consider an FFNN with two hidden layers:

h1=f(W1x+b1)

h2=f(W2h1+b2)

y=g(W3h2+b3)

Where f and g are nonlinear activations.

- The choice of activation function determines representational power.

- Using linear activations across all layers collapses the network into a single linear transformation, removing nonlinearity.

Thus, the true power of FFNNs lies in nonlinear activations.

Future Trends

- Neural Architecture Search (NAS): Automates design of FFNNs by finding the best number of layers and neurons.

- Explainable AI (XAI): Expect greater focus on interpretable FFNNs, particularly for regulated industries.

- Green AI: Research into energy-efficient FFNN training to reduce carbon footprint.

- Edge Deployment: Lightweight FFNNs deployed on IoT devices for predictive maintenance and smart city applications.

Limitations and Challenges

- Requires large datasets.

- Computationally expensive.

- Prone to overfitting without regularization.

- Limited in handling sequential or spatial data compared to CNNs/RNNs.

Feed Forward Neural Network vs. Other Neural Networks

- CNNs: Better for images due to convolution operations.

- RNNs: Better for sequence data like text or time series.

- FFNNs: Best for structured tabular data or when interpretability is key.

Real-Time Applications of Feed Forward Neural Networks

- Finance: Fraud detection, credit scoring.

- Healthcare: Disease diagnosis, patient risk prediction.

- E-commerce: Recommendation engines.

- Manufacturing: Predictive maintenance.

Case Studies: Using Feed Forward Neural Networks in Industry

- Netflix: Recommendation systems use FFNN principles for personalized viewing.

- Healthcare AI startups: Predict patient readmission risks using structured EHR data.

- Retail: Demand forecasting and pricing strategies.

Implementation with Python and TensorFlow/Keras

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

# Simple Feed Forward Neural Network

model = Sequential([

Dense(64, activation='relu', input_shape=(10,)),

Dense(32, activation='relu'),

Dense(1, activation='sigmoid')

])

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

This simple example demonstrates building a binary classification model with a feed forward neural network.

Best Practices for Building Feed Forward Neural Networks

- Normalize data before training.

- Use dropout layers to prevent overfitting.

- Perform hyperparameter tuning.

- Apply cross-validation.

Future of Feed Forward Neural Networks in AI

While advanced architectures dominate AI research, feed forward neural networks remain fundamental:

- As baseline models for new datasets.

- In hybrid architectures combined with other deep learning models.

- In explainable AI due to their simplicity.

Final Thoughts

The feed forward neural network is the foundation of deep learning. By mastering its architecture, training process, and applications, you set yourself up for success in advanced fields like computer vision and natural language processing.

Whether you are a student, data scientist, or AI researcher, feed forward neural networks remain a vital tool in your machine learning toolkit.

FAQ’s

What is a feed forward neural network?

A Feed Forward Neural Network (FNN) is a type of artificial neural network where data moves in one direction—from input to output—without looping back, making it the simplest form of deep learning model.

What is the concept of feed forward?

The concept of feed forward refers to passing information in one direction only—from input nodes, through hidden layers, to the output layer—without any feedback loops, ensuring a straightforward flow of data.

What is FNN used for?

A Feedforward Neural Network (FNN) is used for tasks like classification, regression, and pattern recognition, where the goal is to map input data directly to output predictions.

Why is it called a feed forward neural network?

It is called a feedforward neural network because the flow of information moves only in one direction—from input to hidden layers to output—without looping back, unlike recurrent networks.

What is the FFN layer?

In deep learning, the FFN (Feed Forward Network) layer is a fully connected layer that applies a linear transformation followed by a non-linear activation, allowing the model to learn complex patterns from input features.