Machine Learning (ML) has become a cornerstone of modern innovation—driving applications from fraud detection and healthcare diagnostics to autonomous driving and cybersecurity. However, as ML systems gain prominence, they also become prime targets for malicious actors.

Unlike traditional software, ML models learn from data, making them vulnerable to manipulation in ways that legacy systems were not. A single poisoned dataset or adversarial input can lead to catastrophic decisions, whether it’s misclassifying a fraudulent transaction as legitimate or causing a self-driving car to ignore a stop sign.

This is why machine learning security is not just a technical afterthought—it’s a core requirement for ensuring trust, reliability, and safety in AI-driven ecosystems.

Understanding the Landscape of Machine Learning Threats

Machine learning models face unique and evolving threats. Below are the most common ones:

Data Poisoning

Attackers inject malicious data during the training phase. For example, adding mislabeled “spam” emails to a dataset can train a spam filter to incorrectly classify spam as safe.

Adversarial Attacks

These involve small, often imperceptible changes to inputs that cause ML models to misclassify. Example: Altering a few pixels in a stop sign image to trick an autonomous vehicle into reading it as a speed limit sign.

Model Inversion

Hackers attempt to reconstruct sensitive training data (such as medical records) by exploiting model outputs.

Model Extraction

Attackers query a black-box ML model extensively and build a replica of it, stealing intellectual property.

Evasion Attacks

Malicious inputs are crafted to bypass ML models at inference time, often used in fraud detection systems.

The Role of Cybersecurity in Machine Learning Systems

Machine learning security is an extension of cybersecurity—but with unique complexities. Traditional firewalls and antivirus software aren’t enough to defend against ML-specific risks.

Instead, cybersecurity strategies must evolve to include:

- Secure ML pipelines to ensure data integrity

- Threat modeling specific to AI/ML

- Continuous monitoring for adversarial attacks

- Incident response tailored for model exploitation

Common Vulnerabilities in ML Models

Training Data Vulnerabilities

“Garbage in, garbage out.” If training data is manipulated, the model’s predictions become unreliable.

Model Deployment Risks

Deploying models through APIs opens them up to extraction attacks.

API Security Concerns

Unprotected APIs can leak sensitive predictions or allow attackers to flood the system with adversarial queries.

Bias and Fairness Issues

Security also involves ethical risks—a biased model can be manipulated to discriminate against specific groups.

Real-World Examples of Machine Learning Security Breaches

- 2018 Tesla Autopilot Attack: Researchers demonstrated that stickers placed on stop signs could trick Tesla’s ML-based vision systems.

- Microsoft Tay Chatbot (2016): Manipulated by adversarial tweets, Tay started producing offensive outputs within 24 hours of deployment.

- Healthcare ML Systems: Adversarial images of X-rays were shown to mislead cancer detection systems, raising serious concerns about patient safety.

Strategies for Securing Machine Learning Pipelines

- Data Integrity and Validation – Use anomaly detection to filter poisoned data.

- Secure Model Training – Encrypt training datasets, apply differential privacy.

- Robust Deployment Practices – Secure APIs with authentication and rate limits.

- Monitoring and Logging – Detect unusual query patterns that may signal an attack.

Advanced Defense Mechanisms

Adversarial Training

Models are trained with adversarial examples to build robustness.

Differential Privacy

Ensures training data remains private, protecting individuals’ sensitive information.

Federated Learning

Distributes training across devices without centralizing data, reducing exposure.

Homomorphic Encryption

Enables computations on encrypted data, ensuring confidentiality.

Secure Multiparty Computation

Allows collaborative ML training across organizations without exposing private data.

Regulatory and Compliance Considerations

Governments are now stepping in to regulate AI security:

- GDPR: Requires organizations to ensure AI systems protect personal data.

- HIPAA: In healthcare, ML systems must meet strict data privacy requirements.

- NIST AI Risk Management Framework (2023): Provides guidelines for secure AI adoption.

- ISO/IEC Standards: Emerging global standards for ML system robustness.

The Role of Explainable AI in Security

Black-box ML models are difficult to secure because we don’t know how they make decisions. Explainable AI (XAI) enhances security by:

- Identifying when an adversarial input caused an anomaly

- Increasing trust among stakeholders

- Helping compliance teams meet regulatory requirements

Tools and Frameworks for Machine Learning Security

- IBM Adversarial Robustness Toolbox (ART)

- Microsoft Counterfit

- TensorFlow Privacy

- CleverHans

- PySyft (for secure federated learning)

Industry Applications of Machine Learning Security

Finance

Banks use ML to detect fraud—but adversarial attacks can undermine these systems. ML security ensures resilient fraud prevention.

Healthcare

Patient data is sensitive; ML security protects against model inversion attacks.

Autonomous Vehicles

Robust ML models ensure vehicles interpret road signs correctly, preventing accidents.

Cybersecurity

ML is used to detect malware; securing these models prevents adversarial bypassing.

Government & Defense

AI security is critical in national defense systems against cyberwarfare.

Emerging Attack Vectors in ML Security

- Trojan Attacks (Backdoors in Models)

Attackers embed hidden triggers in a model during training. For example, an image classifier may behave normally but misclassify whenever a specific pixel pattern (the “trigger”) appears. These are hard to detect and extremely dangerous. - Supply Chain Attacks on ML Models

With the popularity of open-source ML models (Hugging Face, TensorFlow Hub), attackers inject malicious pre-trained models into repositories. When businesses reuse them, vulnerabilities spread widely. - Membership Inference Attacks

Attackers determine whether a particular record was part of the training dataset, compromising privacy (critical for healthcare/finance ML).

Security Risks in Large Language Models (LLMs)

As enterprises adopt LLMs (like GPT, Claude, Gemini), new security challenges emerge:

- Prompt Injection Attacks – Malicious prompts that trick LLMs into ignoring safety rules.

- Data Exfiltration – Attackers extract confidential information from fine-tuned models.

- Hallucination Exploits – Incorrect outputs are manipulated to spread misinformation.

- Model Bias Exploitation – Attackers intentionally exploit bias to harm decision-making systems.

Red Teaming for ML Security

Organizations now adopt AI Red Teaming—specialized teams simulate adversarial attacks on ML systems.

- Test robustness of deployed ML models.

- Identify hidden vulnerabilities before real attackers do.

- Red teaming is now becoming a regulatory requirement for high-risk AI applications in the EU and US.

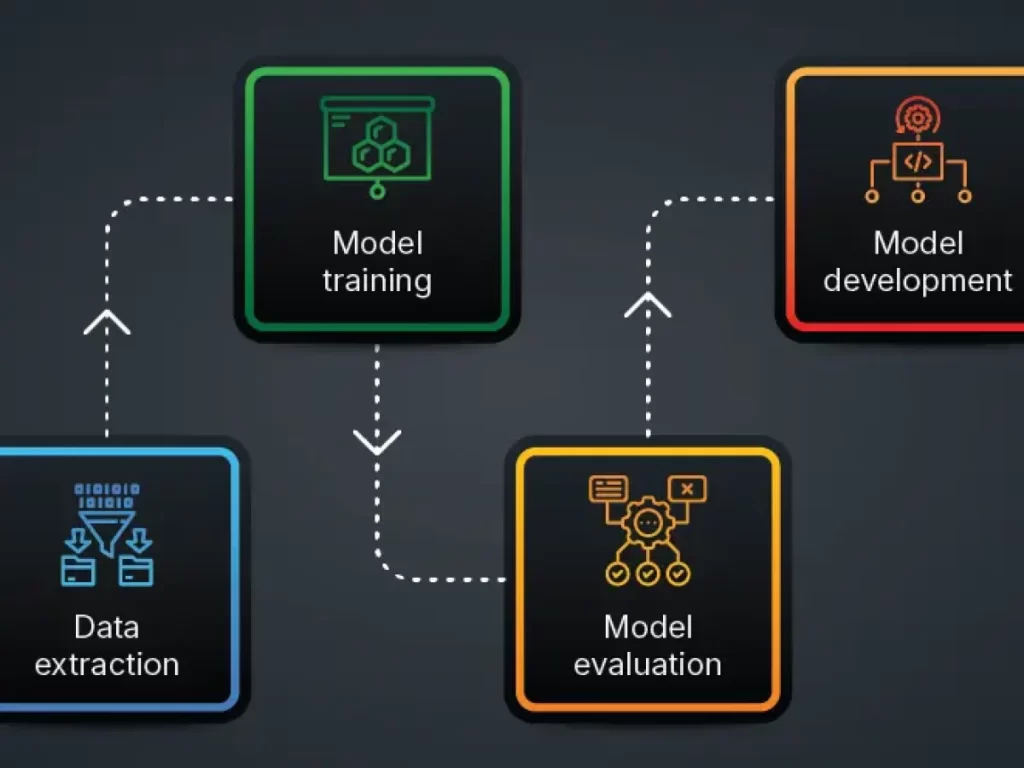

ML Security Across the Lifecycle

Security is not a one-time fix. It must cover the entire ML lifecycle:

- Data Collection – Validate provenance, watermark datasets.

- Model Training – Use secure enclaves (e.g., Intel SGX) to prevent tampering.

- Model Deployment – Secure containers and encrypted model files.

- Monitoring – Continuous anomaly detection for adversarial queries.

- Decommissioning – Securely destroy outdated models to avoid leaks.

Explainability as a Security Shield

- SHAP and LIME can be used not only for interpretability but also for attack detection.

- Example: If a model suddenly gives weight to irrelevant features, it could signal an ongoing adversarial attack.

Hardware-Level ML Security

- Trusted Execution Environments (TEE) – Isolate ML computations to prevent tampering.

- Edge Device Security – IoT devices running ML are vulnerable; secure firmware and lightweight encryption are crucial.

- GPU Vulnerabilities – Researchers found that GPUs can leak memory contents, exposing model parameters.

Blockchain for Machine Learning Security

- Blockchain ensures data provenance by tracking dataset origins.

- Smart contracts enforce secure ML model sharing.

- Decentralized training with blockchain prevents single points of failure.

Metrics for Measuring ML Security

Traditional accuracy isn’t enough—organizations need security-specific metrics:

- Robust Accuracy: Model accuracy under adversarial conditions.

- Attack Success Rate (ASR): Percentage of adversarial inputs that fool the model.

- Certified Robustness Bounds: Mathematical guarantees that adversarial inputs won’t succeed.

Security in Federated Learning

While federated learning protects data privacy, it also introduces risks:

- Model Poisoning – Malicious participants corrupt shared updates.

- Gradient Leakage – Attackers infer training data from gradients.

Solutions include: - Secure Aggregation

- Differential Privacy

- Byzantine-resilient algorithms

Industry-Specific Advanced Cases

- Healthcare: ML models used in diagnostic imaging are being tested with adversarial images that mimic tumors, risking false diagnosis.

- Finance: Credit scoring models are targets of model inversion to extract user profiles.

- Defense: Military drone ML vision systems are vulnerable to adversarial camouflage.

The Rise of ML Security-as-a-Service (MLSaaS)

Companies like HiddenLayer and Robust Intelligence now offer ML security platforms. These provide:

- Continuous model scanning

- Adversarial simulation environments

- Compliance automation for AI laws

Zero-Trust Architectures for ML Systems

- Zero-trust is moving into AI deployments, meaning no entity (user, device, or process) is automatically trusted.

- Applied to ML: every data request, model query, or pipeline action is authenticated and validated.

- Helps prevent insider attacks and unauthorized API access to ML models.

Secure Multi-Party Computation (SMPC) in ML

- SMPC allows multiple parties to train ML models collaboratively without exposing raw data.

- Example: Banks can jointly train fraud detection models while keeping customer data private.

- Security benefit: attackers can’t compromise a single dataset since data is never shared directly.

Homomorphic Encryption for ML Security

- Allows computations on encrypted data without decryption.

- Critical in healthcare and finance where raw data exposure = legal liability.

- Example: Hospitals can use encrypted X-ray images for ML training without revealing patient identities.

Watermarking & Fingerprinting ML Models

- Companies now watermark models to prove ownership and detect theft.

- Passive fingerprinting can identify if a model was stolen by analyzing its predictions on special queries.

- Protects against model extraction attacks where adversaries replicate proprietary ML models.

ML Supply Chain Security Framework

- Just like DevSecOps, MLOps needs SecMLOps:

- Vetting third-party datasets

- Validating pre-trained models

- Monitoring pipeline dependencies

- Vetting third-party datasets

- Example: A poisoned open-source dataset could introduce systemic vulnerabilities into global ML systems.

Continuous Verification with AI Observability

- ML security isn’t static—models drift, data evolves, and attackers adapt.

- AI Observability platforms (e.g., Arize AI, Fiddler AI) now add security lenses:

- Detect adversarial query spikes

- Monitor model fairness & bias drift

- Alert on anomalous decision patterns

- Detect adversarial query spikes

Regulatory Compliance & AI Security

- With AI regulations (EU AI Act, US AI Bill of Rights), security is now a compliance requirement.

- Categories of obligations:

- High-Risk AI (finance, healthcare, defense) → mandatory adversarial testing

- Transparency requirements → logs for model access & retraining events

- High-Risk AI (finance, healthcare, defense) → mandatory adversarial testing

- Non-compliance could mean hefty fines and reputational damage.

Differential Privacy in ML Security

- Protects individual-level data contributions in a dataset.

- Example: A hospital applying differential privacy ensures ML models can’t reveal if a specific patient’s record was used.

- Key in preventing membership inference attacks.

Security Testing Tools for ML

- Adversarial Robustness Toolbox (ART) – from IBM, simulates attacks/defenses.

- CleverHans – popular Python library for adversarial examples.

- SecML – adversarial ML research toolkit.

- Adding these tools gives your blog practical utility beyond theory.

Human-in-the-Loop for Secure ML

- While automation is powerful, humans are essential in spotting anomalies that models may ignore.

- Hybrid setups use:

- ML for fast detection of adversarial queries

- Human review for edge cases & attack confirmation

- ML for fast detection of adversarial queries

- Especially valuable in fraud detection, cybersecurity, and healthcare AI.

Incident Response for ML Systems

- Traditional cybersecurity has playbooks for breaches—ML now needs the same.

- Incident response in ML security should include:

- Attack detection and classification (e.g., poisoning vs. evasion).

- Rollback to safe model checkpoints.

- Forensic analysis of compromised datasets or adversarial queries.

- Attack detection and classification (e.g., poisoning vs. evasion).

- Disaster recovery plans for ML pipelines are becoming mandatory.

AI Red-Teaming Meets Bug Bounty Programs

- Just like software, ML models are now included in bug bounty platforms.

- Example: Microsoft and Google reward researchers who expose adversarial vulnerabilities.

- Crowdsourcing security = more resilient ML deployments.

Human-Centric Risks: Social Engineering Meets ML

- Attackers don’t always hack the model—they hack the people around it.

- Example: Engineers can be tricked into deploying poisoned datasets or ignoring security patches.

- Training ML teams in security awareness is as critical as model hardening.

Future: Convergence of ML Security & Cybersecurity

- By 2030, ML Security is expected to merge with mainstream cybersecurity frameworks (NIST, ISO 27001).

- Enterprises will treat ML models as core digital assets requiring:

- Firewalls for ML APIs

- Intrusion detection for model queries

- Cryptographic audits of ML training pipelines

- Firewalls for ML APIs

Challenges in Implementing Machine Learning Security

- Lack of standardization across industries

- High computational costs of adversarial training

- Difficulty in balancing security with performance

- Shortage of skilled AI security professionals

Future of Machine Learning Security: Trends to Watch

- Quantum-Safe ML Security: Preparing models for the quantum computing era

- Zero-Trust ML Architectures

- AI-powered Security for AI (AI vs AI attacks)

- Blockchain for AI Integrity Verification

Conclusion

Machine learning is transforming industries, but with great power comes great responsibility. As AI adoption accelerates, so does the need for machine learning security. Organizations that fail to secure their models risk not only financial losses but also reputational damage and compliance penalties.Investing in robust ML security is no longer optional—it’s a necessity for building trustworthy AI systems in the digital future.

FAQ’s

What is machine learning security?

Machine learning security is the practice of protecting AI and ML systems from threats, attacks, and vulnerabilities, ensuring models remain reliable, accurate, and resistant to data manipulation or adversarial inputs.

What is the security framework for AI?

A security framework for AI is a structured approach that defines policies, practices, and safeguards—such as data protection, model robustness, transparency, and monitoring—to secure AI systems against cyber threats and adversarial attacks.

What is the security AI tool?

A security AI tool is software that uses artificial intelligence to detect, prevent, and respond to cyber threats—examples include Darktrace, IBM QRadar, CrowdStrike Falcon, and Palo Alto Cortex XDR, which leverage ML for real-time threat intelligence and defense.

What is AI ML security?

AI/ML security refers to the protection of artificial intelligence and machine learning models, data, and systems from attacks, manipulation, or misuse, ensuring their integrity, confidentiality, and trustworthy performance.

Can machine learning be secure?

Yes, machine learning can be made secure by implementing robust data validation, adversarial defense techniques, model monitoring, and security frameworks, which help protect against manipulation, bias, and cyberattacks.