When you want a model stronger than a single decision tree but more flexible than a simple linear model, Gradient Boosting Decision Tree (GBDT) shines. It builds an ensemble of weak learners (usually shallow trees) in a sequential way — each new tree is trained to correct the mistakes of the ensemble so far. Over the years, GBDT has become one of the most reliable and powerful tools for tabular data modeling.

In this guide, we explore how GBDT works under the hood, how to tune it, and what real-world applications it shines in. We also cover advanced topics like privacy-preserving GBDT, multi-task variants, and cutting-edge research.

What Is a Gradient Boosting Decision Tree?

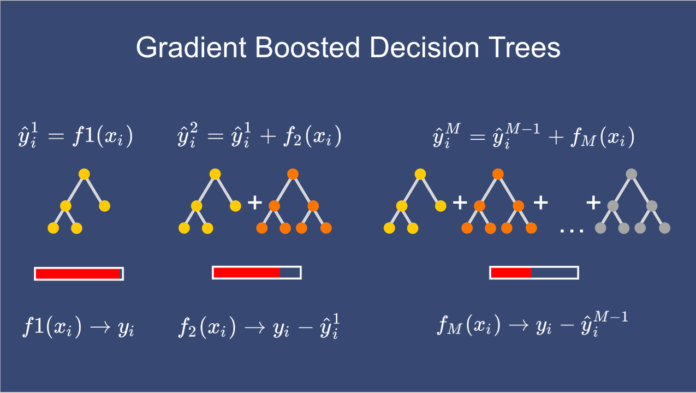

A Gradient Boosting Decision Tree is an ensemble method where the base learners are decision trees (weak models), and they are combined in a boosting manner. The term “gradient boosting” arises because the algorithm uses gradients of a loss function to guide the training of each new tree.

Informally, one can see it as:

- Start with an initial simple model (often constant prediction).

- Iteratively add trees, each aiming to reduce the residual errors (or pseudo‐residuals) of the current ensemble.

- Each tree’s contribution is scaled (via learning rate) and added to the model.

Modern GBDT implementations also allow custom differentiable loss functions (not just squared error / logistic) to tailor for regression, classification, ranking, or other tasks.

Why Use Gradient Boosting over Single Decision Trees

Single decision trees are interpretable and fast, but they tend to overfit and can suffer from high variance. Boosting addresses this by building a sequence of weak learners, each focusing on the remaining errors.

Compared to bagging (e.g. random forest), which reduces variance by averaging many independent models, boosting reduces both bias and variance by sequential error correction.

Because of its ability to gradually refine residuals, GBDT often yields higher predictive accuracy on structured / tabular data, which is why it is widely used in industry and competition settings.

How GBDT Works — Step by Step

Loss Functions & Pseudo-Residuals

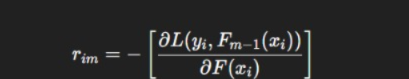

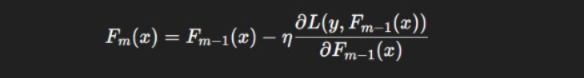

The core concept is to minimize a loss function L(y,F(x)). At stage m, the algorithm computes pseudo-residuals:

These residuals represent the direction and magnitude of error for each sample. A new tree hm(x) is trained to predict rim.

Stage-Wise Additive Modeling

The model is updated stage by stage:

Fm(x)=Fm−1(x)+η⋅hm(x)

Here η is the learning rate (also called shrinkage). Each tree contributes a scaled prediction to the ensemble.

In more advanced versions, each leaf of the tree may have its own multiplier (line search) to minimize loss in that region.

Line Search / Shrinkage

After fitting the new tree to residuals, a line search may be used to find the best multiplier γ that minimizes:

Shrinkage helps mitigate overfitting by reducing the step size of each tree. Smaller η requires more trees for the same performance but often leads to better generalization.

Key Hyperparameters & Their Effects

| Hyperparameter | What It Controls | Effect / Tradeoff |

| n_estimators | Number of boosting stages / trees | More trees → better fit (if not overfitting) |

| learning_rate | The shrinkage applied to each tree | Smaller rate → more cautious learning but needs more trees |

| max_depth | Maximum depth of each tree | Controls complexity; deeper → risk of overfitting |

| min_samples_split / min_samples_leaf | Minimum samples required to split / leaf | Prevents overfitting by enforcing node size |

| subsample | Fraction of samples used in each tree (stochastic boosting) | Helps reduce variance by adding randomness |

| colsample_bytree / colsample_bylevel | Fraction of features used per tree or per level | Adds diversity among trees |

| regularization (λ, α) | L2 / L1 regularization on weights or leaf outputs | Penalizes model complexity (in implementations like XGBoost) |

Proper tuning of these parameters is vital. For example, a small learning rate and a large number of trees often yield better performance versus a large learning rate and fewer trees.

Variants & Advanced Implementations

GBDT as a concept has spawned many optimized implementations and variants that improve scalability, speed, and handle specialized tasks.

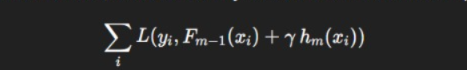

XGBoost

XGBoost is one of the most popular gradient boosting frameworks. It introduces regularization, tree pruning, parallelization, and efficient handling of missing values and sparse data.

It uses second-order (Hessian) information (approximate Newton boosting) to refine the update step.

LightGBM

LightGBM, developed by Microsoft, optimizes for speed and scalability:

- Leaf-wise tree growth (choose leaf with max loss reduction)

- Gradient-based One-Side Sampling (GOSS) and Exclusive Feature Bundling (EFB) for efficiency

- Better for large datasets and high-dimensional data

CatBoost

CatBoost (by Yandex) is designed to handle categorical features smoothly, using internal encodings and careful handling to reduce overfitting.

Other Variants & Research

- SecureBoost / Federated GBDT: A privacy-preserving GBDT for federated learning settings.

- Privacy-Preserving GBDT with differential privacy techniques to add noise while preserving model quality.

- MT-GBM (Multi-Task Gradient Boosting Machine) shares trees across tasks.

- Tree-structured boosting combining features of CART and boosting.

These variants allow GBDT to adapt to collaborative, privacy-sensitive, or multi-output settings.

Use Cases & Real-World Examples

GBDT excels across industries due to its flexibility and performance.

- Finance / Credit Risk: Predicting default probability using highly non-linear interactions.

- Insurance / Claim Modeling: Estimate claim severity, risk scoring.

- Healthcare: Disease prediction, patient risk stratification.

- Retail / Ecommerce: Churn prediction, recommendation systems, demand forecasting.

- Advertising / Ranking: Click-through rate prediction, ranking ads.

- Competitions: Kaggle winners often use XGBoost / LightGBM as backbone models.

Example: A retailer uses GBDT to model customer lifetime value by combining purchase history, demographics, web behavior, and promotional exposure.

Strengths, Limitations & Tradeoffs

Strengths

- High accuracy and robustness

- Handles non-linear interactions well

- Flexible with loss functions

- Works on mixed data types (categorical + numeric)

- Good out-of-box performance with default parameters

Limitations

- Training time can be large, especially for many trees and deep models

- Less interpretable than a single decision tree

- Sensitive to hyperparameters

- Prone to overfitting if not regularized

- Memory-intensive with large datasets

The tradeoff is often between expressive power and interpretability / compute resources.

Interpretability & Feature Importance

Although GBDT is more complex than a single tree, you can still interpret aspects:

- Feature Importance: Many libraries provide importance by gain, split count, or permutation.

- SHAP Values: Explain per-sample contributions of each feature to the prediction.

- Partial Dependence Plots: Show marginal effect of features.

- Surrogate Models: Train a simple interpretable model to approximate GBDT predictions.

These tools help bridge the gap between accuracy and transparency.

Advanced Theoretical Foundation of Gradient Boosting Decision Tree

Functional Gradient Descent Viewpoint

The gradient boosting process can be viewed as functional gradient descent in function space rather than parameter space.

Unlike traditional optimization (where you update weights θ), GBDT updates the model function F(x) itself.

At each iteration mmm, we move in the direction opposite to the gradient of the loss functional:

However, since F(x) is approximated as an ensemble of trees, this derivative is approximated by fitting a regression tree hm(x) to the negative gradient values (pseudo-residuals).

Thus, GBDT can be viewed as performing stage-wise additive modeling to approximate the functional optimum.

This interpretation connects boosting to general gradient-based optimization, enabling theoretical insights into convergence and regularization.

Connection to Additive Models and Regularization Paths

GBDT builds additive models where each weak learner adds complexity gradually.

Mathematically, this creates a regularization path, analogous to how LASSO (L1 regularization) or Ridge (L2) controls model capacity by shrinking coefficients.

In GBDT, the learning rate (shrinkage parameter) acts like a step-size regularizer.

Small learning rates trace smoother paths in the loss landscape, ensuring convergence to a more generalized minimum — similar to gradient descent with weight decay.

Thus, hyperparameters like:

- learning_rate

- n_estimators

- max_depth

jointly control the bias–variance tradeoff along this regularization path.

Mathematical Optimization in Gradient Boosting

Taylor Expansion in Advanced Implementations

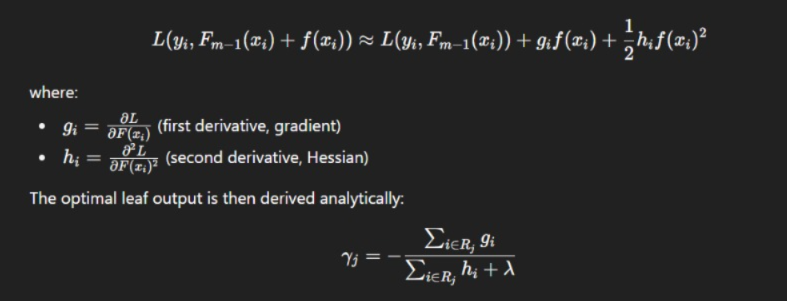

Modern GBDT libraries like XGBoost and LightGBM extend classic boosting by applying a second-order Taylor expansion to approximate the loss function.

where Rj represents samples in leaf j, and λ is the L2 regularization parameter.

This approach yields faster convergence and allows regularization directly within the optimization, giving rise to Newton boosting or second-order gradient boosting.

Regularization Beyond Tree Depth

Traditional decision trees are regularized by limiting depth, but in GBDT, advanced forms include:

- L1 (Lasso) and L2 (Ridge) regularization: Controls leaf output magnitude.

- Tree pruning: Removes splits with negligible gain post-construction (used in XGBoost).

- Dropout Boosting (DART): Randomly drops trees during training to prevent overfitting.

- Early stopping: Cease training when validation loss stops improving after n rounds.

These strategies ensure GBDT models remain compact and robust, even with thousands of trees.

Scalability and Efficiency: From CPU to Distributed Systems

Parallelization Strategies

GBDT historically was sequential, as each tree depends on previous residuals. However, modern frameworks have introduced:

- Histogram-based splitting (LightGBM): Continuous features are bucketed into discrete bins to reduce computation.

- Feature and data parallelism: Parallelize split-finding and gradient computation.

- Out-of-core computation: Handles datasets larger than memory via data streaming (XGBoost).

- GPU acceleration: CUDA-based gradient computation and histogram updates.

These optimizations make GBDT feasible for datasets with hundreds of millions of samples.

Distributed GBDT

Enterprise-scale frameworks like XGBoost on Spark, LightGBM on Dask, and CatBoost Distributed enable horizontal scaling across clusters.

Distributed versions use all-reduce operations to aggregate gradient and histogram information across workers efficiently.

For cloud-native environments, services like:

- Google Vertex AI Decision Forests

- Azure Machine Learning LightGBM

- AWS SageMaker XGBoost

offer managed, distributed gradient boosting pipelines that integrate seamlessly with cloud storage and monitoring systems.

Advanced Interpretability: Making GBDT Explainable

SHAP (SHapley Additive exPlanations)

SHAP is now the standard framework to interpret GBDT models.

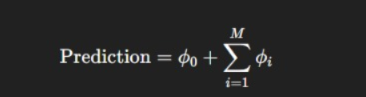

It decomposes each prediction into feature-level contributions satisfying local accuracy, consistency, and additivity.

where ϕi is the SHAP value for feature iii.

Using SHAP summary plots, decision makers can visualize how each feature influences predictions across datasets, aiding model transparency.

Surrogate Models and Rule Extraction

To simplify GBDT interpretation:

- Surrogate decision trees approximate GBDT outputs using a single interpretable tree.

- RuleFit algorithms extract linear combinations of decision rules derived from GBDT trees, blending interpretability with accuracy.

- Partial Dependence Plots (PDP) and Individual Conditional Expectation (ICE) plots visualize feature effect trends.

Such explainability tools are critical in regulated sectors like finance, healthcare, and insurance.

Hybrid and Neural-Integrated GBDT Models

Neural-Boosted Models

Recent research explores combining GBDT with neural networks:

- DeepGBM: Uses deep embeddings to process high-dimensional or unstructured data before passing it to a GBDT layer.

- Neural GBDT (NGBoost): Applies gradient boosting principles to model probabilistic outputs using neural networks as base learners.

This hybrid architecture allows the strengths of both paradigms — GBDT’s structured data power and deep learning’s representation capacity — to coexist.

Embedding Features for GBDT

Categorical variables can be encoded as learned embeddings (via autoencoders or word2vec-style encodings).

LightGBM and CatBoost already incorporate advanced categorical encoders like Target Encoding and Ordered Boosting, which prevent target leakage and improve generalization.

Privacy, Security & Federated Learning in GBDT

Differential Privacy in Boosting

Differentially Private Gradient Boosting ensures individual training samples cannot be reverse-engineered from trained models.

Techniques include:

- Adding calibrated noise to gradient updates.

- Gradient clipping to bound sensitivity.

- Secure aggregation protocols.

Frameworks like DP-GBDT and Privacy-preserving XGBoost achieve strong privacy guarantees with minimal accuracy degradation.

Federated Gradient Boosting

Federated GBDT (FGBDT) allows multiple organizations to collaboratively train models without sharing raw data.

For example, in a banking consortium, different banks can jointly train a credit risk model without exposing customer data.

Algorithms like SecureBoost (Tencent) or FATE (WeBank) utilize homomorphic encryption and secure multi-party computation (SMPC) to perform distributed gradient aggregation securely.

This direction is becoming crucial as AI governance, privacy laws (like GDPR), and multi-institutional collaboration grow.

Evaluation and Model Monitoring in Production

Model Drift and Recalibration

Once deployed, GBDT models must be monitored for concept drift — when data distributions change over time.

Techniques include:

- Periodic retraining

- Drift detection using KS tests or population stability index

- Adaptive learning rate scheduling based on new data streams

Model Compression and Deployment

For edge or real-time systems, large GBDT models can be compressed via:

- Tree pruning

- Quantization of leaf weights

- Knowledge distillation into smaller models

Libraries like Treelite and ONNX-GBDT convert GBDT models for deployment in C++, Java, or embedded environments with minimal latency.

Future Trends in Gradient Boosting Decision Trees

- GBDT + LLM Integration:

Using language model embeddings as features in GBDT to blend textual and tabular intelligence. - Explainable AutoML Pipelines:

AutoML frameworks (e.g., H2O.ai, AutoGluon) that auto-tune GBDT while maintaining transparency. - Quantum and GPU-accelerated Boosting:

Research explores using quantum annealing and advanced GPUs to accelerate large-scale gradient boosting. - Fairness-aware Boosting:

Ensuring GBDT models meet fairness metrics (equalized odds, demographic parity) through constrained optimization. - Streaming Boosting:

Online GBDT variants that incrementally update trees as new data streams in, enabling real-time adaptability.

Tips for Training & Avoiding Overfitting

- Use early stopping (monitor validation performance)

- Start with a small learning rate and more trees

- Use subsample and colsample to add randomness

- Use regularization (L1, L2) if supported

- Limit tree depth and increase min_samples_leaf

- Use cross-validation for robust evaluation

- Monitor training vs validation losses for divergence

Summary & Best Practices

- Gradient Boosting Decision Tree is a powerful ensemble method combining multiple weak trees in a gradient descent framework.

- Choose loss function, learning rate, number of trees, and tree complexity carefully.

- Use modern implementations like XGBoost, LightGBM, and CatBoost for performance and flexibility.

- Always guard against overfitting using early stopping, subsampling, and validation.

- Leverage interpretability tools to explain models in high-stakes settings.

- Explore privacy-preserving and federated variants when data security is critical.

GBDT remains a cornerstone algorithm in the machine learning toolkit, especially for structured data tasks, due to its balance of flexibility, accuracy, and extensibility.

FAQ’s

What are gradient boosting decision trees?

Gradient Boosting Decision Trees (GBDT) are an ensemble machine learning technique that builds multiple decision trees sequentially, where each tree corrects the errors of the previous one to improve overall model accuracy and performance.

How many trees for gradient boosting?

The number of trees in Gradient Boosting typically ranges from 100 to 500, but it depends on the dataset and learning rate — more trees with a smaller learning rate often yield better accuracy while preventing overfitting.

What is GBM used for?

GBM (Gradient Boosting Machine) is used for predictive modeling tasks such as classification, regression, and ranking, where it combines multiple weak learners (usually decision trees) to build a highly accurate and robust predictive model.

What is an XGBoost decision tree?

An XGBoost decision tree is an optimized implementation of gradient boosting that builds decision trees sequentially, where each new tree corrects the errors of the previous ones — offering faster performance, better accuracy, and efficient handling of large datasets.

Is random forest bagging or boosting?

Random Forest is a bagging (Bootstrap Aggregating) algorithm — it builds multiple independent decision trees on random subsets of data and averages their predictions to improve accuracy and reduce overfitting.