Running Python code in the cloud without worrying about local setup is a game-changer. With the rise of collaborative and remote data science, Google Colab, often referred to as Google Notebook, has emerged as a top tool for coders, data scientists, and researchers.

Google Colab offers a powerful browser-based notebook interface, making it easy to write and execute Python code from any device, anywhere. Backed by Google’s cloud infrastructure and integrated with Google Drive, it empowers you to develop and share notebooks seamlessly.

What Is Google Colab?

Google Colab (short for Colaboratory) is a free Jupyter notebook environment that runs in the cloud and requires no setup. It supports Python and offers access to GPUs and TPUs, making it a great platform for AI, data analysis, and education.

Unlike traditional Jupyter Notebooks that require local setup via Anaconda or pip, Google Notebook is ready-to-use directly in the browser and comes pre-installed with major Python libraries like NumPy, Pandas, TensorFlow, Keras, and OpenCV.

Why Use Google Notebook for Your Projects

Using Google Colab over local environments offers several advantages:

- No installation or configuration required

- Free access to NVIDIA GPUs and TPUs

- Easily shareable via links or GitHub

- Compatible with Google Drive

- Ideal for data science, machine learning, deep learning, and education

- Supports real-time collaboration, similar to Google Docs

Key Features of Google Colab

Here are some standout features of Google Notebook:

- Free GPU and TPU Support

- Notebook Sharing & Comments

- Rich Text + Code Integration

- Real-Time Collaboration

- Direct Import from Google Drive/GitHub

- Support for Interactive Visualizations (Plotly, Matplotlib)

- Markdown, LaTeX, HTML Rendering

- Auto-save to Drive

- Pre-installed Python Libraries

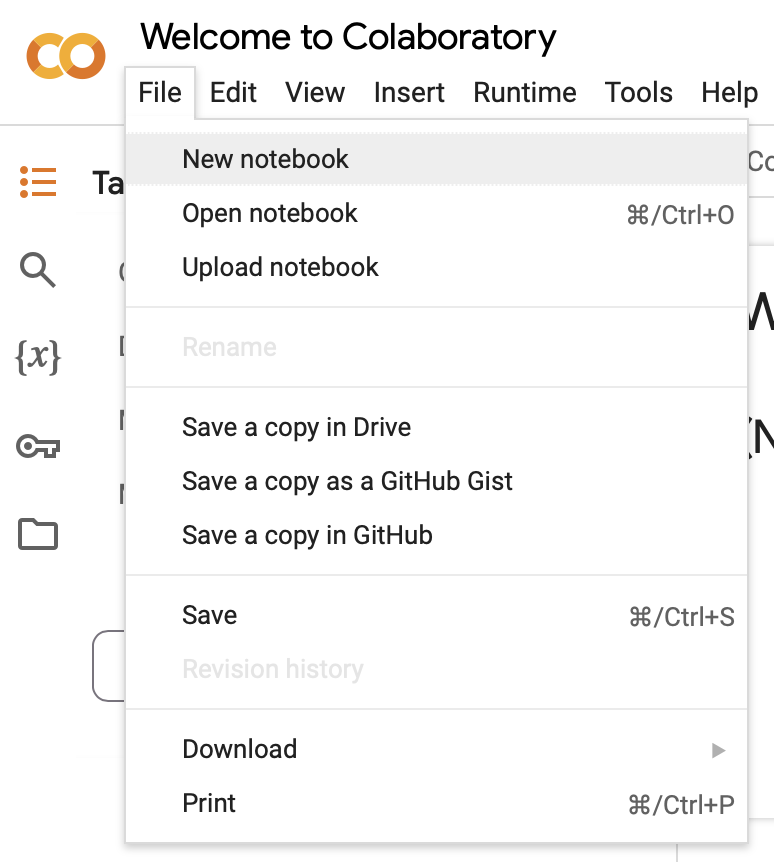

Getting Started with Google Colab

Step 1: Open Google Colab

Go to https://colab.research.google.com

Step 2: Sign in with your Google Account

You’ll need a Google account to access the service.

Step 3: Create or Upload a Notebook

You can start a new notebook or upload one from your system or Google Drive.

Understanding the Google Colab Interface

The Google Colab UI resembles a standard Jupyter Notebook with added cloud features.

- Code Cells: Run Python code

- Text Cells: Add documentation using Markdown or HTML

- Toolbar: Save, Upload, Insert Cells, Runtime Settings

- Runtime Menu: Select hardware (GPU/TPU/CPU)

Image Alt: Google Notebook interface overview in browser

How Google Colab Compares with Jupyter Notebook

| Feature | Google Colab | Jupyter Notebook |

| Setup Required | No | Yes |

| Free GPU/TPU Access | Yes | No |

| Cloud Storage | Google Drive | Local File System |

| Collaboration | Real-Time Sharing | Manual Sharing |

| Cost | Free (with limits) | Free (self-hosted) |

| External Hosting | Yes (GitHub, Drive) | Yes (Manual Upload) |

Colab is best for quick experimentation and sharing, while Jupyter excels in offline or large data workflows.

Installing and Importing Python Libraries in Google Colab

Colab comes with most essential libraries, but if you need something custom:

!pip install seaborn

You can use ! to run shell commands:

!ls /usr/local/lib/python3.10/dist-packages

Or import standard libraries like:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

Image Alt: Installing Python libraries in Google Notebook using pip

Real-Time Examples Using Google Notebook

Example 1: Data Cleaning with Pandas

import pandas as pd

df = pd.read_csv('https://raw.githubusercontent.com/datasciencedojo/datasets/master/titanic.csv')

df.dropna(inplace=True)

df['Age'] = df['Age'].astype(int)

df.head()

Example 2: Visualization with Seaborn

import seaborn as sns

sns.histplot(data=df, x='Age', hue='Survived')

These examples demonstrate how Colab supports data handling, cleaning, and visualization — all within your browser.

Using Google Colab for Machine Learning

With libraries like Scikit-learn and TensorFlow pre-installed, you can build and train models right away.

Example: Logistic Regression with Scikit-learn

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

X = df[['Age', 'Fare']]

y = df['Survived']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

model = LogisticRegression()

model.fit(X_train, y_train)

GPU/TPU Acceleration

To enable GPU/TPU:

- Click Runtime > Change Runtime Type > Hardware Accelerator

Google Notebook automatically uses your selected hardware for model training.

Integration with Google Drive and GitHub

Mounting Google Drive

from google.colab import drive

drive.mount('/content/drive')

This allows seamless access to files stored in Google Drive.

Loading Notebooks from GitHub

Go to File > Open Notebook > GitHub tab and paste a repository URL.

Optimizing Performance with GPUs and TPUs

While most users know how to enable GPU/TPU from the Runtime > Change Runtime Type menu, few realize the importance of optimizing resource usage:

- Use torch.cuda.is_available() or tensorflow.test.is_gpu_available() to confirm hardware access.

- Batch size tuning: Larger batch sizes can improve training speeds on GPUs but may exhaust memory; experimenting with the right size is critical.

- Mixed Precision Training: Use tf.keras.mixed_precision or PyTorch’s autocast for faster training with reduced memory overhead.

- TPU vs. GPU: TPUs are excellent for large-scale deep learning models, while GPUs offer more flexibility for traditional ML tasks.

Using Colab Pro and Colab Pro+

Free Colab resources are limited to 12-hour sessions and shared GPUs. Colab Pro and Pro+ offer:

- Priority access to faster GPUs like V100 or A100.

- Longer runtimes (up to 24 hours).

- More memory for handling larger datasets.

For professionals working with deep learning, large models, or frequent experiments, Colab Pro can save significant time.

Advanced Data Handling

Colab isn’t just about mounting Google Drive. You can:

- Stream data directly from APIs using requests or gdown for Google Drive file downloads.

Use BigQuery integration with:from google.colab import auth

auth.authenticate_user()

- This allows direct queries on massive datasets without downloading locally.

- Connect with Google Cloud Storage (GCS) buckets for scalable workflows.

Interactive Widgets and Dashboards

Colab supports IPyWidgets and Plotly Dashboards, enabling interactive experiments:

- Sliders, dropdowns, and toggles to adjust hyperparameters on the fly.

- Real-time model training visualization with tqdm or TensorBoard (%load_ext tensorboard).

- Build lightweight dashboards directly in Colab for demos and presentations.

Version Control and GitHub Integration

Colab integrates smoothly with GitHub, making collaboration easier:

- Save notebooks directly to repositories.

- Open .ipynb files via https://colab.research.google.com/github/.

- Use !git clone inside Colab to bring entire repositories for experimentation.

- Pair this with DVC (Data Version Control) for reproducible ML pipelines.

Secrets Management and APIs

Advanced workflows often need secure access to APIs and databases.

- Use Google Colab’s secrets API or environment variables (os.environ) instead of hardcoding credentials.

- Store keys in Google Drive or GCP Secret Manager for security.

- Automate workflows like training models, saving them to Drive, and deploying them with Flask or FastAPI on cloud servers.

Scaling Beyond Colab

For projects too large for Colab’s limits:

- Transition from Colab notebooks to Vertex AI Notebooks (Google Cloud) for enterprise workflows.

- Use Colab for prototyping and scale up on GCP, AWS, or Azure when moving to production.

- Export trained models (.h5 or .pkl) directly to cloud buckets or ML pipelines.

Custom Environments with Conda and Docker-Like Isolation

By default, Colab uses a pre-built Python environment, but advanced users often need custom dependencies. You can:

Install Conda inside Colab:!pip install -q condacolab

import condacolab

condacolab.install()

- This enables isolated Conda environments similar to local Anaconda setups.

- Emulate container workflows: build “Docker-like” reproducible environments by saving requirements.txt or environment.yml files in Drive/GitHub.

This allows reproducibility across teams and avoids dependency conflicts.

Parallelism and Distributed Training

Colab supports parallelism beyond single GPU/TPU usage:

Data Parallelism with PyTorch:

from torch.nn.parallel import DataParallel

model = DataParallel(model)

- Horovod or TensorFlow’s tf.distribute.MirroredStrategy can be used to experiment with distributed training, even inside Colab.

- TPUs: While trickier than GPUs, TPUs can dramatically accelerate large model training (e.g., BERT, GPT-like models).

Extending Runtime with Smart Workarounds

The 12-hour runtime cap is one of Colab’s main limitations. Some workarounds include:

- Checkpoints: Save model weights periodically to Drive (model.save() or torch.save()).

- Session Keep-Alive: Running background JavaScript snippets prevents idle disconnections (ethical use recommended).

- Colab + External VM: Trigger background tasks on GCP or AWS while monitoring logs in Colab.

This hybrid setup gives Colab a “control panel” role while heavy lifting happens in the cloud.

Advanced Visualization and Reporting

Colab can double as a data storytelling platform:

- Use Plotly, Altair, or Bokeh for interactive plots directly inside the notebook.

- Generate Dash apps or Streamlit prototypes in Colab and share them instantly with ngrok tunnels.

- Export polished reports as PDF/HTML directly via nbconvert.

This makes Colab useful for both experimentation and executive-ready reporting.

Integrating with MLOps Tools

Colab fits neatly into the MLOps ecosystem:

- Weights & Biases (W&B) or MLflow for experiment tracking.

- DVC (Data Version Control) for dataset management.

- CI/CD Pipelines: Automate notebook execution via GitHub Actions or GitLab CI to keep experiments reproducible.

With these, Colab notebooks evolve into collaborative research pipelines.

Hybrid Cloud + Colab Workflows

Advanced users often blend Colab with enterprise cloud platforms:

- Train in Colab with free GPUs, then export models to Vertex AI (Google Cloud), Sagemaker (AWS), or Azure ML for deployment.

- Use APIs to connect Colab-trained models with production systems. Example: Serve a TensorFlow model via Flask and call it from Colab.

- Automate data ingestion from Google Cloud Storage (GCS), BigQuery, or AWS S3 into Colab for preprocessing.

This bridges experimentation and production without losing agility.

Security and Compliance for Enterprises

For corporate or sensitive data, Colab needs careful handling:

- IAM Policies: Restrict access to Drive files when mounting inside Colab.

- OAuth2 with Service Accounts: Authenticate securely with APIs (instead of storing raw keys).

- Data Masking & Encryption: Always anonymize datasets before uploading to Drive.

This ensures Colab remains compliant with data privacy rules like GDPR and HIPAA.

Scaling Beyond Colab’s Limits

While Colab is excellent for prototyping, researchers often need scale:

For big-data projects, combine Colab with Apache Spark via Dataproc or connect with Snowflake/BigQuery for massive data processing.

Move from Colab → Colab Pro → Vertex AI Notebooks for seamless scaling.

Export notebooks to .py scripts or Docker containers for long-term training.

Limitations of Google Colab

While powerful, Colab has its constraints:

- 12-hour max runtime (can disconnect unexpectedly)

- Limited GPU availability (usage quota)

- No internet access for third-party APIs (in some modes)

- Cannot run without internet

- Code execution timeout after inactivity

For continuous training or large datasets, paid platforms or Colab Pro may be more appropriate.

Best Practices for Google Notebook Users

- Regularly download and back up notebooks

- Use smaller batch sizes to avoid GPU limits

- Split code across multiple cells for clarity

- Comment your code and use Markdown for documentation

- Save checkpoints in Drive after major changes

- Avoid hardcoding sensitive credentials

Security and Privacy in Google Colab

While Google Colab uses secure cloud infrastructure, follow these tips:

- Don’t share notebooks with sensitive data

- Avoid running untrusted code from GitHub

- Use OAuth2 or service accounts for authenticated APIs

- Disconnect Drive after usage

- Turn off sharing links when not in use

Conclusion

Google Colab, or Google Notebook, is more than just a cloud-based Python IDE. It is a gateway to powerful, scalable, and collaborative computing. Whether you’re a student running your first Python script or a machine learning engineer training deep neural networks, Google Notebook delivers the flexibility and speed you need — all for free.

By understanding how to install libraries, handle data, run ML models, and collaborate in real time, you can unlock the full potential of Python programming without ever leaving your browser.

If you’re looking for a free, GPU-backed, shareable Python environment, Google Colab is your go-to platform. Bookmark this guide, share it with your network, and start experimenting.

FAQ’s

Can I use Google Colab for machine learning?

Yes, you can use Google Colab for machine learning, as it provides a cloud-based Python environment with free GPU/TPU support, pre-installed libraries, and seamless integration with popular ML frameworks like TensorFlow and PyTorch.

Is Google Colab totally free?

Google Colab is mostly free, offering access to GPUs and TPUs, but it has usage limits and restrictions. For higher performance, longer runtimes, and more resources, users can opt for Colab Pro or Pro+ subscriptions.

Is Google Colab an AI tool?

Yes, Google Colab can be considered an AI tool, as it provides a cloud-based Python environment for developing, training, and running AI and machine learning models using frameworks like TensorFlow and PyTorch.

Can Colab run Python code?

Yes, Google Colab can run Python code directly in the browser, allowing users to write, execute, and share Python scripts, notebooks, and machine learning experiments without any local setup.

Is Google Colab good for beginners?

Yes, Google Colab is excellent for beginners because it requires no installation, provides free access to GPUs/TPUs, supports Python and popular ML libraries, and offers an interactive notebook environment that makes learning coding and machine learning easier.