Machine learning models rely heavily on the quality and diversity of training data. In real-world scenarios, collecting massive datasets is expensive, time-consuming, and sometimes impossible. This limitation often leads to models that perform well on training data but fail when exposed to unseen inputs.

To address this challenge, researchers and practitioners adopt techniques that enhance dataset diversity without collecting new data. One such approach has become fundamental to modern artificial intelligence workflows.

Understanding the Concept of Data Augmentation

Data augmentation refers to the process of artificially expanding a dataset by applying transformations to existing data while preserving its original meaning.

Instead of gathering new samples, this approach modifies existing ones to create realistic variations. These variations help machine learning models learn invariant features and improve their ability to generalize.

The core idea behind data augmentation is simple:

- Increase dataset diversity

- Reduce overfitting

- Improve model robustness

Why Data Augmentation Matters in Modern AI Systems

In many domains, data scarcity is a critical problem. Medical imaging, autonomous driving, and natural language processing all suffer from limited labeled datasets.

Key benefits include:

- Improved generalization on unseen data

- Reduced model bias

- Better performance on edge cases

- Lower dependency on large labeled datasets

In deep learning, where models contain millions of parameters, this technique is often essential rather than optional.

Types of Data Augmentation Techniques

Different data types require different augmentation strategies. There is no universal method that works for all datasets.

Broad categories include:

- Image augmentation

- Text augmentation

- Audio augmentation

- Time-series augmentation

- Synthetic data generation

Each category has domain-specific rules to ensure that the transformed data remains meaningful.

Image-Based Data Augmentation Explained

Image datasets are among the most common use cases for data augmentation. Small transformations can significantly improve model performance.

Common image transformations:

- Rotation and flipping

- Cropping and scaling

- Brightness and contrast adjustment

- Noise injection

- Color space transformations

These operations help models become invariant to orientation, lighting, and scale changes.

Text Data Augmentation Methods

Text data presents unique challenges because language structure and meaning must be preserved.

Popular techniques include:

- Synonym replacement

- Random insertion or deletion

- Sentence paraphrasing

- Back translation using multilingual models

For example, sentiment analysis models benefit greatly when trained on linguistically diverse text variations.

Audio and Time-Series Data Augmentation

Audio data is widely used in speech recognition, music analysis, and healthcare monitoring systems.

Common techniques:

- Time stretching

- Pitch shifting

- Adding background noise

- Temporal shifting

Time-series data augmentation is used in finance, IoT, and sensor-based systems to improve prediction accuracy under varying conditions.

Data Augmentation in Deep Learning Pipelines

In deep learning workflows, data augmentation is often applied dynamically during training rather than pre-processing.

Advantages of real-time augmentation:

- Reduced storage requirements

- Infinite data variations

- Improved training efficiency

Modern frameworks integrate augmentation directly into training pipelines, ensuring consistent performance improvements.

Data Augmentation Strategies for Complex Datasets

As machine learning applications expand into more complex environments, traditional augmentation techniques are sometimes insufficient. Advanced strategies focus on context-aware transformations that better represent real-world data distributions.

These approaches consider:

- Feature dependencies

- Domain-specific constraints

- Statistical consistency of generated samples

This level of augmentation is especially useful in enterprise-grade AI systems where data variability is high.

Synthetic Data Generation vs Traditional Data Augmentation

Although often used interchangeably, synthetic data generation and traditional augmentation are conceptually different.

Key differences include:

- Traditional augmentation modifies existing data samples

- Synthetic data generation creates entirely new samples using probabilistic or generative models

Synthetic data techniques rely on:

- Generative Adversarial Networks

- Variational Autoencoders

- Diffusion models

These methods are particularly effective when real data is scarce or sensitive, such as in healthcare or finance.

Data Augmentation for Imbalanced Datasets

Class imbalance is a major challenge in classification problems. Data augmentation provides a practical solution by increasing the representation of minority classes.

Common techniques include:

- Targeted augmentation of underrepresented classes

- Controlled noise injection

- Class-aware transformation pipelines

By focusing augmentation efforts on minority classes, models become more stable and less biased toward majority labels.

Augmentation Policies and Automated Optimization

Manual selection of augmentation techniques can be inefficient. Automated augmentation systems learn optimal transformation policies during training.

Popular approaches include:

- AutoAugment

- RandAugment

- Population-Based Augmentation

These systems dynamically adjust augmentation parameters to maximize validation performance, reducing the need for manual tuning.

Data Augmentation in Transfer Learning Workflows

Transfer learning models often rely on pretrained weights trained on generic datasets. Augmentation helps adapt these models to new domains.

Benefits include:

- Faster convergence

- Improved domain adaptation

- Reduced need for fine-tuning layers

In practical applications, augmentation bridges the gap between source and target domains without retraining from scratch.

Measuring the Effectiveness of Data Augmentation

Not all augmentation improves performance. Proper evaluation is essential.

Evaluation techniques include:

- Cross-validation analysis

- Learning curve comparisons

- Robustness testing under noise and distortions

Models trained with effective augmentation show consistent improvements across multiple validation datasets.

Augmentation for Edge and Real-Time AI Systems

Edge devices often operate in unpredictable environments. Data augmentation helps simulate real-world conditions during training.

Examples include:

- Low-light image augmentation for surveillance cameras

- Signal distortion simulation for IoT sensors

- Acoustic noise injection for voice assistants

This preparation improves reliability in deployment scenarios with limited computational resources.

Data Augmentation in Multimodal AI Systems

Modern AI systems increasingly rely on multiple data types such as text, images, and audio.

Multimodal augmentation focuses on:

- Synchronizing transformations across data types

- Preserving semantic alignment

- Improving cross-modal learning

For example, augmenting an image-text dataset requires ensuring captions remain consistent with visual transformations.

Data Augmentation and Regulatory Compliance

In regulated industries, transparency in data handling is critical.

Organizations must:

- Document augmentation pipelines

- Validate synthetic data integrity

- Ensure compliance with data protection laws

Proper governance frameworks ensure that augmented datasets remain compliant with industry standards.

Role of Data Augmentation in Responsible AI

Responsible AI initiatives emphasize fairness, transparency, and accountability.

Data augmentation supports responsible AI by:

- Reducing dataset bias

- Improving fairness across demographic groups

- Enhancing robustness against adversarial inputs

However, augmentation must be carefully designed to avoid amplifying existing biases.

Enterprise Adoption of Data Augmentation

Large organizations integrate augmentation into MLOps pipelines to ensure scalability and consistency.

Enterprise adoption typically includes:

- Automated augmentation pipelines

- Version-controlled datasets

- Continuous performance monitoring

This integration ensures long-term model reliability across evolving data landscapes.

Data Augmentation Across Different Data Domains

Data augmentation is not limited to images or text. Its effectiveness spans multiple data domains, each requiring specialized techniques.

Structured Data Augmentation

Structured data presents unique challenges because arbitrary transformations can break logical relationships.

Common techniques include:

- Feature scaling with controlled variance

- Probabilistic value replacement

- Synthetic row generation using statistical sampling

These methods are widely used in fraud detection and financial forecasting.

Domain-Specific Data Augmentation Use Cases

Healthcare and Medical Imaging

In healthcare, data scarcity is common due to privacy concerns.

Augmentation helps by:

- Simulating anatomical variations

- Enhancing rare disease representation

- Improving diagnostic model reliability

These techniques must be validated carefully to avoid clinical misinterpretation.

Autonomous Systems and Robotics

Robotic systems rely on sensor data from multiple sources.

Augmentation simulates:

- Environmental changes

- Sensor noise

- Lighting and weather conditions

This improves model robustness before real-world deployment.

Augmentation Pipelines in MLOps

Modern machine learning workflows integrate augmentation into automated pipelines.

Key components include:

- Dataset versioning

- Reproducible transformation logic

- Continuous evaluation checkpoints

MLOps-driven augmentation ensures consistency across training, testing, and production environments.

Data Augmentation for Small and Medium Datasets

When data availability is limited, augmentation becomes a critical enabler.

Advantages include:

- Reduced overfitting

- Better generalization

- Faster experimentation cycles

Small datasets benefit most when augmentation is applied conservatively and systematically.

Data Augmentation and Model Interpretability

Interpretability is often overlooked in augmented datasets.

Key considerations:

- Track transformation lineage

- Analyze feature importance shifts

- Monitor decision boundary stability

Transparent augmentation practices help maintain trust in AI-driven decisions.

Augmentation in Natural Language Processing

Text-based augmentation requires preserving semantic meaning.

Popular techniques include:

- Synonym substitution

- Sentence paraphrasing

- Back translation

These approaches improve NLP model performance without distorting original intent.

Advanced Image Augmentation Techniques

Beyond basic transformations, advanced image augmentation focuses on realism.

Techniques include:

- Style transfer

- Random erasing

- MixUp and CutMix

These methods improve generalization for deep learning models in computer vision.

Time-Series Data Augmentation

Time-series data requires continuity preservation.

Effective techniques include:

- Window slicing

- Temporal warping

- Noise injection with trend preservation

Applications include financial forecasting and predictive maintenance.

Data Augmentation in Reinforcement Learning

Reinforcement learning environments benefit from simulated diversity.

Augmentation strategies include:

- State perturbation

- Reward shaping

- Environment randomization

These approaches reduce overfitting to specific scenarios.

Ethical Considerations in Data Augmentation

Augmentation must be applied ethically to avoid misleading outcomes.

Ethical guidelines include:

- Avoiding demographic distortion

- Preventing unrealistic scenario creation

- Ensuring auditability

Ethical augmentation contributes to responsible AI development.

Data Augmentation Performance Trade-Offs

While augmentation improves generalization, it can introduce trade-offs.

Trade-offs include:

- Increased training time

- Computational overhead

- Complex pipeline management

Understanding these trade-offs helps teams design balanced systems.

Data Augmentation in Industry-Scale AI Systems

Large-scale AI systems rely on augmentation to handle data drift.

Benefits include:

- Improved robustness to distribution shifts

- Faster adaptation to new data patterns

- Enhanced model longevity

Industry-scale adoption often includes real-time augmentation strategies.

Augmentation Strategy Selection Framework

Selecting the right augmentation strategy requires systematic evaluation.

Key factors:

- Dataset size

- Domain sensitivity

- Model architecture

- Deployment constraints

A structured framework prevents over-augmentation and maintains data integrity.

Real-World Use Cases Across Industries

Healthcare

Medical imaging models use augmented scans to detect diseases more accurately, even with limited patient data.

Autonomous Vehicles

Self-driving systems rely on augmented road images to handle weather, lighting, and traffic variations.

Retail and E-commerce

Product recognition and recommendation systems improve accuracy by training on augmented visual and textual data.

Finance

Fraud detection systems simulate rare transaction patterns to strengthen model reliability.

Popular Libraries and Tools for Data Augmentation

Several open-source tools simplify implementation.

Commonly used libraries:

- TensorFlow ImageDataGenerator

- PyTorch torchvision transforms

- Albumentations

- NLPAug for text processing

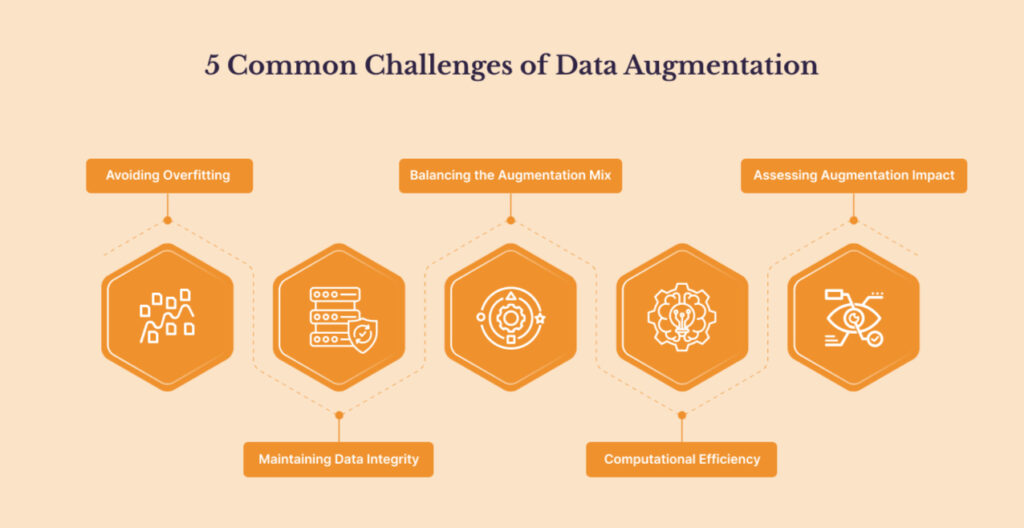

Challenges and Limitations of Data Augmentation

Despite its advantages, data augmentation is not without limitations.

Challenges include:

- Increased training time

- Risk of unrealistic samples

- Domain-specific complexity

- Difficulty in evaluating augmentation quality

Understanding these limitations helps practitioners apply augmentation strategically rather than blindly.

Best Practices and Common Pitfalls

While data augmentation is powerful, incorrect use can degrade performance.

Best practices:

- Maintain label integrity

- Apply domain-relevant transformations

- Avoid excessive distortion

- Validate augmented data quality

Common mistakes include applying transformations that alter semantic meaning or introduce unrealistic patterns.

Performance Impact and Evaluation

Evaluating augmentation effectiveness requires careful experimentation.

Metrics to monitor:

- Validation accuracy

- Precision and recall

- Model robustness to noise

- Performance on unseen datasets

A/B testing with and without augmentation is a reliable approach to measure impact.

Ethical and Practical Considerations

Artificially generated data must be used responsibly.

Key considerations:

- Bias amplification

- Data representativeness

- Transparency in AI pipelines

In regulated industries, documentation of augmentation techniques is often required.

Future Trends in Data Augmentation

As AI evolves, augmentation techniques are becoming more intelligent.

Emerging trends include:

- GAN-based synthetic data generation

- Automated augmentation policy learning

- Domain-adaptive augmentation

- Multimodal data augmentation

These advancements will further reduce dependence on large labeled datasets.

Conclusion

Modern machine learning systems demand robust, generalizable models capable of handling real-world variability. Data augmentation plays a crucial role in achieving this goal by enhancing dataset diversity without increasing data collection costs.

When applied thoughtfully, it improves model accuracy, reduces overfitting, and strengthens reliability across industries. As tools and techniques continue to evolve, data augmentation will remain a cornerstone of effective AI development.

FAQ’s

How does data augmentation help in training machine learning models?

Data augmentation improves model performance by artificially increasing dataset size and diversity, reducing overfitting and helping models generalize better to unseen data.

How can the robustness of machine learning models be improved?

Model robustness can be improved through data augmentation, high-quality and diverse datasets, regularization techniques, cross-validation, hyperparameter tuning, and continuous evaluation on unseen data.

What is the best data augmentation strategy?

The best data augmentation strategy depends on the data type, but generally involves applying realistic transformations (such as rotation, scaling, noise addition, or text paraphrasing) that preserve labels while increasing data diversity and generalization.

What is an example of data augmentation?

An example of data augmentation is rotating, flipping, or zooming images in an image classification dataset to create new training samples without collecting additional data.

What are the benefits of augmentation?

An example of data augmentation is rotating, flipping, or zooming images in an image classification dataset to create new training samples without collecting additional data.