In today’s digital-first world, organizations are generating data at an unprecedented scale. From eCommerce transactions to healthcare systems, social media interactions, IoT devices, and financial markets, data is flowing at lightning speed. But raw data by itself has no value unless it is structured, processed, and analyzed for insights.

This is where the role of a Big Data Engineer comes in. Big data engineers are the architects who design, build, and manage the systems that enable companies to process massive volumes of data efficiently. Without them, advanced data analytics, machine learning, and AI applications would not be possible.

Who is a Big Data Engineer?

A Big Data Engineer is a specialized IT professional responsible for developing, maintaining, testing, and optimizing big data systems. Their job revolves around building scalable pipelines that collect, store, and process large data sets so that analysts and data scientists can use them for decision-making.

Think of a big data engineer as the data plumber — ensuring that data flows smoothly from one source to another, cleaned, transformed, and ready for use.

Why Big Data Engineers Are in High Demand

The global big data market is projected to reach $650 billion by 2029 (Statista). Every industry — finance, healthcare, retail, logistics, and entertainment — depends on data-driven insights.

Reasons for rising demand:

- Explosion of IoT and connected devices.

- Increasing adoption of cloud computing.

- AI and machine learning relying heavily on big data.

- Companies needing real-time analytics for competitive advantage.

Without big data engineers, companies would be overwhelmed with unstructured information and unable to act quickly in dynamic markets.

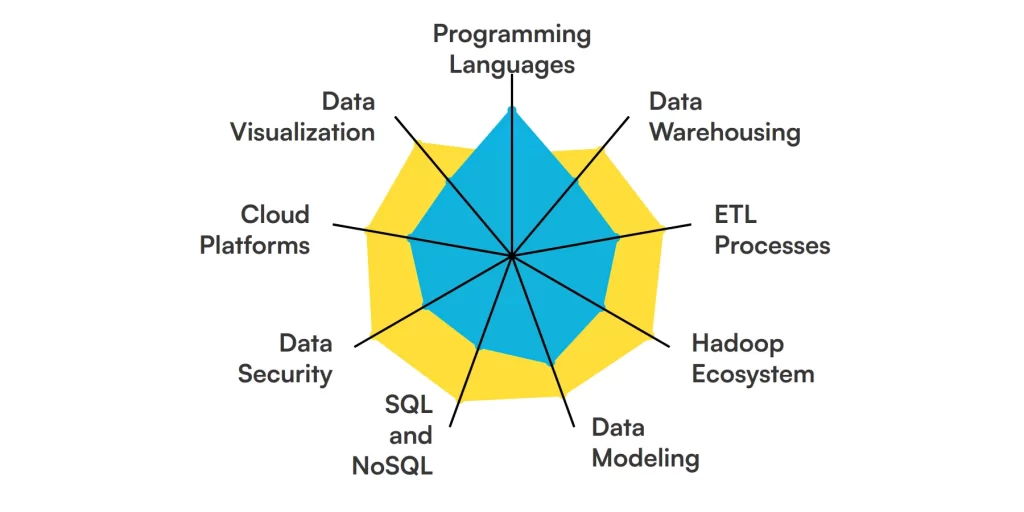

Skills Required for a Big Data Engineer

To excel as a big data engineer, one must develop both technical and non-technical skills.

1. Programming Languages

- Python: Widely used for scripting, data wrangling, and analytics.

- Java/Scala: Essential for working with Apache Spark and Hadoop.

- SQL: For managing and querying relational databases.

2. Big Data Tools and Frameworks

- Apache Hadoop

- Apache Spark

- Kafka for data streaming

- Flink for real-time processing

3. Database Management Skills

- Relational Databases (MySQL, PostgreSQL)

- NoSQL Databases (MongoDB, Cassandra, HBase)

4. Cloud Computing Knowledge

- AWS (Amazon EMR, Redshift)

- Google Cloud (BigQuery, Dataflow)

- Microsoft Azure (HDInsight, Synapse)

5. Data Warehousing

Understanding data pipelines, ETL processes, and warehouse solutions like Snowflake and Redshift.

6. Soft Skills

- Problem-solving

- Communication

- Collaboration with data scientists & analysts

Responsibilities of a Big Data Engineer

Big data engineers wear multiple hats in organizations. Their core responsibilities include:

- Designing scalable data pipelines.

- Collecting and integrating data from multiple sources.

- Cleaning and transforming raw data.

- Optimizing performance of data infrastructure.

- Collaborating with analysts and scientists.

- Ensuring data security and compliance.

Big Data Engineer vs Data Scientist: Key Differences

| Feature | Big Data Engineer | Data Scientist |

| Focus | Infrastructure & pipelines | Analysis & modeling |

| Tools | Hadoop, Spark, Kafka | Python, R, TensorFlow |

| Goal | Data availability & scalability | Insight extraction & predictions |

While both roles complement each other, big data engineers act as the backbone, making sure the data is usable for advanced analytics.

Real-Time Applications of Big Data Engineering

- Healthcare: Patient data integration for early disease detection.

- Retail: Recommendation engines for eCommerce platforms like Amazon.

- Finance: Fraud detection using real-time data processing.

- Transport: Optimizing routes for ride-sharing services like Uber.

- Entertainment: Streaming platforms (Netflix, Spotify) using big data for personalization.

Tools and Technologies Every Big Data Engineer Must Know

- Apache Hadoop & Spark

- Kafka & Flink

- Hive & Pig

- NoSQL databases like MongoDB, Cassandra

- Cloud-based big data solutions (AWS, GCP, Azure)

Roadmap to Becoming a Big Data Engineer

- Learn programming (Python, Java, SQL).

- Gain knowledge of databases (SQL + NoSQL).

- Master big data frameworks (Hadoop, Spark).

- Practice cloud platforms.

- Work on real projects (ETL pipelines, streaming data).

- Get certified and build a strong portfolio.

Certifications to Boost Your Big Data Engineering Career

- Google Professional Data Engineer

- AWS Certified Big Data Specialty

- Cloudera Certified Data Engineer

- Microsoft Certified: Azure Data Engineer Associate

Advanced Architectures in Big Data Engineering

1. Lambda Architecture

- Combines batch processing and real-time streaming.

- Batch layer handles large-scale historical data, while the speed layer deals with real-time events.

Example: Fraud detection in banking, where transactions are checked instantly while long-term risk profiles are updated in batch.

2. Kappa Architecture

- A simplified alternative to Lambda.

- Relies solely on real-time streaming systems (e.g., Apache Kafka, Flink).

Example: Social media monitoring where streams are continuous, and batch is not needed.

3. Microservices for Data Pipelines

- Breaking down large ETL pipelines into containerized microservices for scalability.

- Often orchestrated with Kubernetes + Docker.

Big Data Engineering in AI and Machine Learning

Big data engineers are no longer only infrastructure experts — they now empower machine learning engineers and AI scientists.

- Building feature stores for ML models.

- Creating real-time streaming datasets for recommendation engines.

- Supporting large-scale training of generative AI (e.g., diffusion models, LLMs).

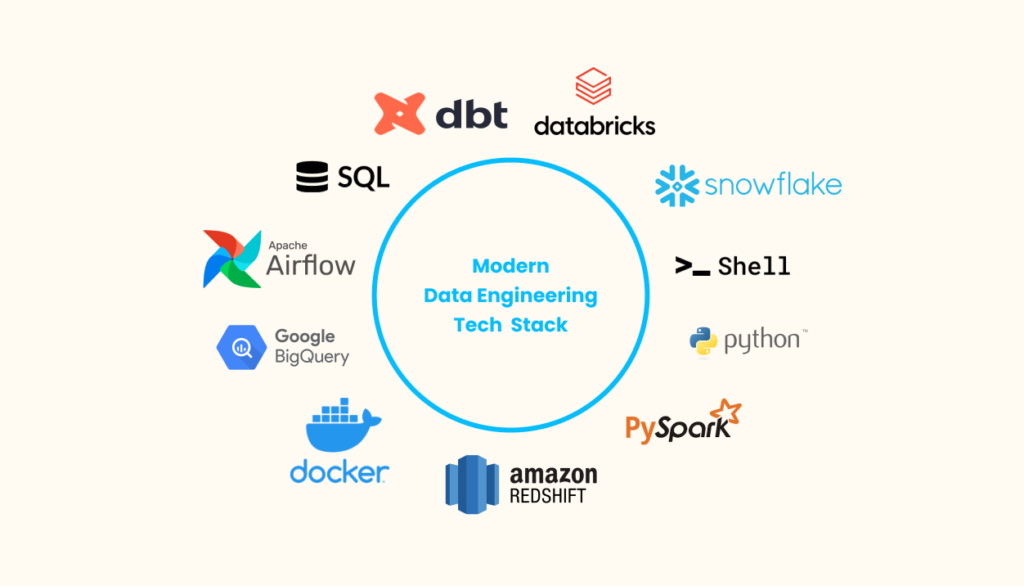

Advanced Data Engineering Tools and Ecosystem

Beyond Hadoop and Spark, modern big data engineers use advanced tools:

- Airflow – Workflow orchestration for scheduling data pipelines.

- dbt (Data Build Tool) – SQL-based transformation tool for analytics.

- Delta Lake – Ensures ACID transactions on data lakes.

- Apache Iceberg – High-performance data table format for big data.

- Snowflake & Databricks – Cloud-native data platforms merging data engineering and analytics.

Security and Compliance in Big Data Engineering

Data engineers must ensure that massive pipelines comply with global regulations:

- GDPR (Europe) – User data privacy.

- HIPAA (US Healthcare) – Protection of medical records.

- CCPA (California) – Consumer privacy.

- Techniques used:

- Data anonymization & tokenization.

- Encryption at rest & in transit.

- Role-based access control (RBAC).

- Data anonymization & tokenization.

Performance Optimization Techniques

Big data systems deal with petabytes of data, so performance is critical. Techniques include:

- Partitioning and bucketing large datasets.

- Caching with in-memory frameworks like Spark RDDs.

- Parallel processing across distributed clusters.

- Optimizing schema with Parquet/ORC file formats.

Industry-Wise Use Cases of Big Data Engineering

1. Healthcare

- Building patient record lakes for predictive analytics.

- Genomic data storage and processing.

2. Retail & eCommerce

- Personalized product recommendations.

- Demand forecasting using sales + weather + social media data.

3. Finance

- High-frequency trading systems relying on real-time big data pipelines.

- AML (Anti-Money Laundering) detection via anomaly detection.

4. Smart Cities & IoT

- Traffic flow optimization using sensor data.

- Energy consumption forecasting for smart grids.

Research and Academic Importance of Big Data Engineering

- Universities offering Master’s in Data Engineering (Carnegie Mellon, ETH Zurich, IITs).

- Research areas: Scalable storage models, distributed algorithms, and federated data systems.

- Academic collaborations with industries (e.g., Google Cloud + MIT for big data research).

Big Data Engineering Market Growth (2024–2025)

- According to Statista, the global big data market size is projected to hit $447 billion by 2025.

- A LinkedIn Jobs Report (2024) shows “Big Data Engineer” consistently ranks among the top 10 emerging tech roles worldwide.

- Industries with the highest demand for big data engineers:

- Finance & Banking → Fraud detection, credit risk scoring.

- Healthcare → Predictive analytics for patient care.

- Retail/eCommerce → Personalized recommendations and dynamic pricing.

- Telecom → Optimizing bandwidth and reducing churn.

- Finance & Banking → Fraud detection, credit risk scoring.

Rise of Real-Time Data Processing

Batch systems like Hadoop are declining, while real-time frameworks are booming.

- Apache Kafka + Flink + Spark Structured Streaming dominate real-time use cases.

- Example: Uber uses real-time big data pipelines to calculate surge pricing and ETA predictions instantly.

- Cloud-native services like AWS Kinesis, Google Pub/Sub, and Azure Stream Analytics are replacing traditional batch ETL systems.

Big Data and Generative AI Integration

- Big Data Engineers now prepare training pipelines for LLMs (Large Language Models) and Generative AI.

Example: OpenAI, Anthropic, and Google DeepMind rely on massive engineered datasets for training GPT, Claude, and Gemini. - Engineers must now manage:

- Synthetic data generation for AI models.

- Scaling distributed training with millions of GPU clusters.

- Data bias detection in AI training pipelines.

- Synthetic data generation for AI models.

Cloud-Native Data Engineering

Most modern data engineering is moving entirely to the cloud.

- Snowflake, Databricks, and BigQuery dominate modern data stack.

- Data Lakehouse (merging lakes and warehouses) is the hottest trend.

Example: Netflix migrated to a Lakehouse architecture on AWS + Databricks for real-time personalization.

DataOps and MLOps Convergence

- Big Data Engineering is no longer isolated → It merges with DataOps (automation of data pipelines) and MLOps (deployment of ML models).

- Tools like Airflow, MLflow, and dbt help streamline end-to-end AI pipelines.

- Example: Spotify’s DataOps framework processes millions of real-time streams while feeding ML models for music recommendations.

Latest Tools in the Big Data Engineer’s Toolbox

Apache Iceberg & Delta Lake → Future-proof storage formats

- DuckDB → Lightweight SQL engine for analytics on local machines.

- dbt (Data Build Tool) → For SQL-based data transformations.

- Great Expectations → Open-source tool for data quality checks.

Ray + Dask → For distributed machine learning and data processing.

Security and Responsible Data Engineering

- With increasing global data regulations, big data engineers must integrate privacy-preserving techniques.

- Trends in secure big data pipelines:

- Homomorphic encryption (process data without decrypting).

- Federated learning (train ML models without moving raw data).

- Zero-trust architecture for data pipelines.

- Homomorphic encryption (process data without decrypting).

Example: Apple’s Federated Learning collects insights from millions of iPhones without centralizing private data.

Industry Case Studies (Latest Examples)

- Tesla → Uses big data pipelines to process autonomous driving sensor data in real time.

- Airbnb → Relies on data engineering + ML for pricing optimization and fraud prevention.

- NASA → Big data pipelines analyze space mission data with Petabyte-scale satellite imagery.

Challenges in Big Data Engineering

- Handling unstructured data at scale.

- Ensuring security and compliance (GDPR, HIPAA).

- Integrating data from multiple sources.

- Managing cost on cloud platforms.

- Keeping up with evolving technologies.

Future of Big Data Engineering

- Data Mesh Architecture

- Decentralized approach: Data treated as a product, owned by domain teams.

- Example: Large enterprises like Netflix and Spotify adopting data mesh.

- Decentralized approach: Data treated as a product, owned by domain teams.

- AI-Powered Data Engineering

- Automated ETL using AI (self-healing pipelines).

- AI-based anomaly detection for data quality.

- Automated ETL using AI (self-healing pipelines).

- Streaming-First Infrastructure

- Batch processing is declining; real-time is becoming the norm.

- Batch processing is declining; real-time is becoming the norm.

- Quantum Data Engineering

- Future possibility where quantum processors will handle unstructured data analysis at unimaginable speeds.

- Future possibility where quantum processors will handle unstructured data analysis at unimaginable speeds.

- Edge Computing

processing closer to data sources. - Real-Time Analytics

Increasing demand for instant insights. - DataOps & MLOps

Bridging data engineering and machine learning.

Case Studies and Real-World Examples

- Netflix: Uses big data engineering for personalized recommendations, saving $1 billion annually in customer retention.

- Uber: Real-time big data pipelines optimize driver routes and surge pricing.

- Airbnb: Data pipelines built with Hadoop and Spark manage millions of listings globally.

Conclusion

A Big Data Engineer plays a mission-critical role in the modern data ecosystem. They are the enablers of innovation, ensuring data is collected, processed, and made accessible for analytics and decision-making.

With the growing reliance on AI, cloud, and real-time analytics, the demand for skilled big data engineers will only increase. By mastering the right skills, tools, and certifications, you can position yourself for one of the most rewarding careers in the data industry.

FAQ’s

What does a big data engineer do?

A big data engineer designs, builds, and maintains systems that collect, process, and analyze large-scale datasets, ensuring data is accessible, reliable, and optimized for analytics, machine learning, and business decision-making.

Is big data engineer a coding job?

Yes, a big data engineer role involves significant coding, as they use programming languages like Python, Java, Scala, or SQL to build data pipelines, process large datasets, and optimize systems for analytics and machine learning.

Is Python useful for big data engineering?

Yes, Python is highly useful for big data engineering because of its rich ecosystem of libraries and frameworks like PySpark, Pandas, Dask, and Airflow, which simplify data processing, pipeline automation, and integration with big data platforms.

Do data engineers use AI?

Yes, data engineers support AI by building and managing the data pipelines and infrastructure that feed machine learning models, though they typically focus on preparing and delivering high-quality data rather than developing AI algorithms directly.

Which programming language is best for big data?

The best programming languages for big data are Python (for flexibility and libraries), Java (for Hadoop ecosystem), Scala (for Apache Spark), and SQL (for querying and managing data), with the choice depending on the platform and use case.