The digital age marked the birth of cybercrime. Since then, there has been a massive uptick in cyberattacks, especially those launched by state-sponsored groups, third-party vendors and insider threats. Organizations are finding it increasingly difficult to anticipate and defend against these attacks.

As the world enters the era of artificial intelligence, this poses a significant challenge. If attackers target the training dataset, they could exfiltrate personally identifiable information, proprietary data or medical records. Fortunately for AI engineers and users, the dawn of federated AI signals the end of these worries. Its privacy-preserving qualities address the core issue.

What Is Federated AI?

Federated AI is also referred to as a federated model. It is accomplished through the federated learning process. This collaborative distributed training framework involves multiple clients, which independently train local models with their own datasets.

This innovative technique reverses the traditional approach, moving the training to the dataset instead of vice versa. It enables multiple devices or entities to build a joint algorithm without transferring or sharing information. The clients can be at a great physical distance from each other, so many parties can collaborate even if they are hundreds of miles apart.

Performancewise, standard and federated models are virtually indistinguishable. Both can be machine learning, predictive, deep learning or large language models (LLMs). Their primary difference lies in their training process.

During federated training, a single global model aggregates model updates — not the information itself — from each client. Therefore, raw data never leaves its original location. This differs substantially from the traditional method, which involves pooling a large amount of data and resources in a centralized location.

The Rise of Federated AI

The rise of AI is self-explanatory. For the first time in history, tech companies developed a technology that can mimic human speech, behavior and cognitive processes. Everything from training technique to parameter count is fully customizable, enabling them to deploy the resulting model virtually anywhere.

As a result, industrial, commercial and institutional sectors are broadly adopting AI. Experts estimate it will experience a 36.2% compound annual growth rate (CAGR) by 2027, reaching a value of $407 billion. Private businesses are quickly incorporating this once-in-a-generation technology into everything from customer service to assembly line quality control.

Federated AI is not as prominent because it is relatively new. Still, it accounts for a considerable portion of the overall AI market. Market research projects it will go from $138.6 million in 2024 to $297.5 million in 2030, achieving a 14.4% CAGR in the growth period.

Since regular and federated models are the same, why is the latter becoming more popular? The answer is simple — its unique, decentralized approach to training preserves data privacy. In industries like banking and health care, strict privacy laws restrict information sharing and enforce hefty financial penalties for noncompliance.

The Role Privacy Plays

Since federated AI aggregates model updates instead of pooling raw data in a centralized location, it is much easier to prevent unauthorized access and tampering during training. Even if a hacker were to access the global model, it would be difficult to trace the updates back to the clients and exfiltrate their information.

As the AI adoption rate has risen, industries have not seen a correlated rise in cyberattacks targeting models. However, data poisoning and unauthorized access techniques are evolving. Novice hackers can even use prompt engineering — a no-code method that takes advantage of the user interface — to trick LLMs into revealing sensitive or proprietary information.

Simultaneously, model size is increasing exponentially, making it more challenging to determine whether hackers have tampered with training datasets. Businesses are seeking a way to protect themselves and their information, which is why they are turning to federated AI.

While around 50% of one survey’s respondents reported they’ve deployed AI or plan to do so by 2026, respondents reported their adoption rate would be higher if it were not hindered by concerns about security and low-quality data. However, federated learning can address and help overcome both of these primary pain points, leading to increased adoption of AI tools overall.

Federated AI’s Privacy-Preserving Benefits

The federated training process minimizes data exposure, reduces breach risk and improves privacy while scaling. You can reap these benefits whether you are in the finance, retail, education, manufacturing, energy or government sector.

Minimizes Data Exposure

This decentralized approach is particularly beneficial for industries like health care, finance and insurance because your client devices never share raw data. Whether you have multiple locations or vendors, you can collaboratively train a single central model without sharing sensitive information, thus minimizing the potential for exposure.

Reduces Data Breach Risk

Training happens locally on client devices, and the global model receives model updates, not raw data. This combination of factors makes large information transfers unnecessary, thereby reducing your risk of in-transit breaches.

Even if your database is relatively secure, few defenses can deter the most determined cybercriminals. Eliminating the need to transfer information entirely addresses this pain point. It is a foolproof method for protecting your digital assets.

Scalability Preserves Privacy

Scaling machine learning to billions of parameters requires a distributed training framework like federated learning. While scalability does not directly improve your privacy, it enables you to continue using this method without switching to a less secure alternative as your needs evolve.

Even if you need a large amount of data, you can still use this method instead of pooling datasets. You can continue relying on local computational resources and workloads distributed across client devices.

Features Worth Considering

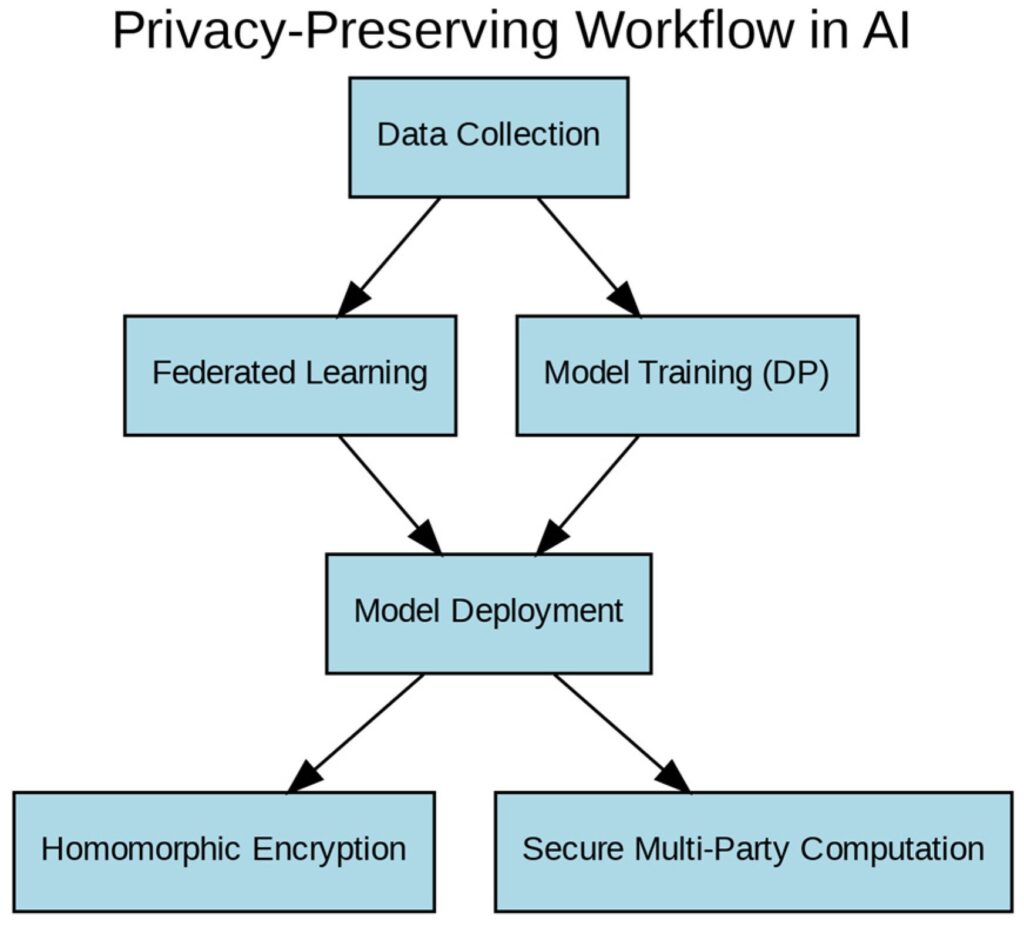

This machine learning technique is made even more secure by select privacy-preserving features, such as secure aggregation, differential privacy and homomorphic encryption. They can address issues like data leakage, which have kept some from adopting this framework.

Secure aggregation is used to compile model updates, which are sent to aggregators rather than being returned directly to clients. It involves computing the sum of data collected from multiple sources without disclosing individual outputs. This way, an attack on the central aggregator will not impact the connected devices.

Traditional secure aggregation methods for federated learning face high computation loads and numerous communication rounds. In 2021, researchers developed a modern version that only requires two rounds of communication per training iteration. It aggregates 500 models with more than 300,000 parameters in less than 0.5 seconds.

Differential privacy is a data minimization approach that relies on a mathematical framework. It adds a controlled amount of random noise to the output to preserve the privacy of individual assets. This way, multiple parties can train a model collaboratively without worrying about third-party data breaches.

Homomorphic encryption also helps prevent third-party data breaches. This cryptographic technique allows parties to perform computations on ciphertext without first decrypting it. Although it may be resource-intensive, it is effective. Even if a breach is successful, hackers can’t do anything with encrypted information.

The Future of Federated AI

While federated AI is unlikely to surpass general-purpose models in popularity for some time, its steady growth in market value signals a significant paradigm shift. Organizations that rushed to adopt the latest AI technology are realizing the risks of breaches and data poisoning outweigh the benefits of implementation.

No solution is a silver bullet. However, academics, AI engineers and data science professionals are already improving upon this machine learning framework’s features, such as the research team that developed a novel secure aggregation method. With these improvements, federated learning could become one of the premier methods for model training.