Artificial Intelligence (AI) has advanced rapidly in recent years, and one of the biggest breakthroughs is the rise of ollama models. These models are designed to simplify how developers and organizations build, run, and deploy large language models (LLMs) locally and securely.

Unlike traditional cloud-based systems that require heavy infrastructure, Ollama offers a lightweight, developer-friendly solution that empowers teams to experiment with AI models directly on their machines.

With the increasing demand for data privacy, efficiency, and flexibility, ollama models have become a game-changer for startups, researchers, and businesses.

Why Ollama is Transforming AI Development

The AI ecosystem is often dominated by large-scale cloud providers. While cloud AI platforms are powerful, they come with high costs, security concerns, and limited control for developers.

Ollama models change this by enabling local deployment of LLMs. Instead of relying on heavy cloud infrastructure, developers can install, fine-tune, and experiment with models right on their laptops or servers.

This innovation is revolutionizing AI development in three major ways:

- Cost-Effective: No expensive cloud compute required.

- Private & Secure: Data stays on your machine.

- Developer-Friendly: Easy setup and integration with workflows.

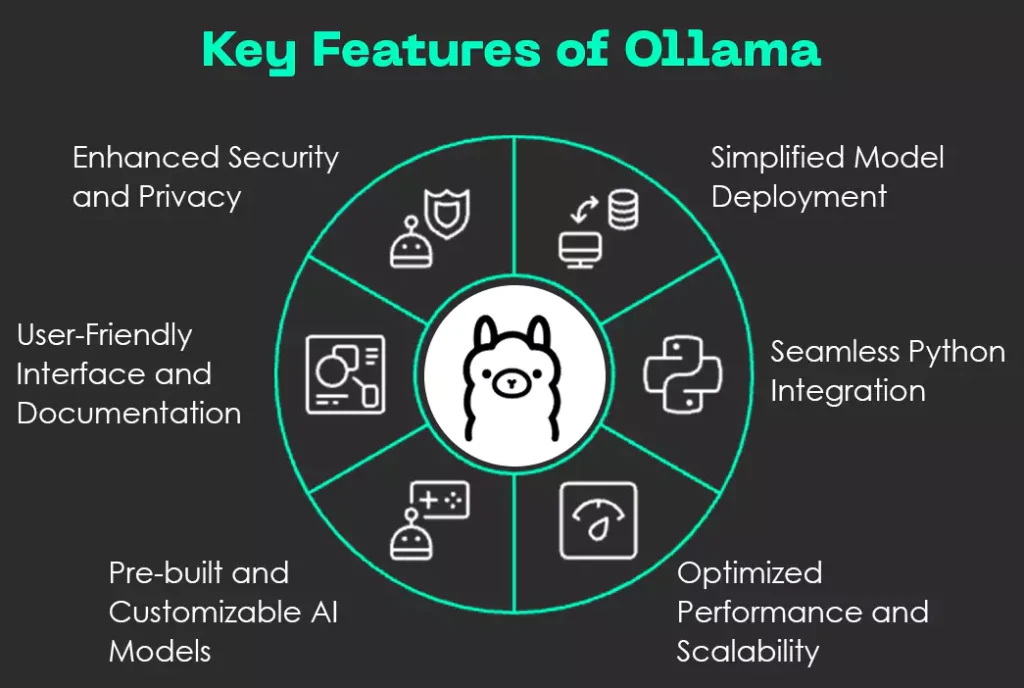

Core Features of Ollama Models

Here are some standout features that make ollama models unique:

- Local Deployment – Run models directly on your system without needing cloud GPUs.

- Pre-Trained Models – Ollama supports a wide library of pre-trained LLMs that can be used instantly.

- Custom Fine-Tuning – Developers can fine-tune models for specialized tasks.

- Lightweight and Flexible – Designed for developers who want agility and control.

- Cross-Platform Compatibility – Works seamlessly on macOS, Linux, and Windows environments.

- Model Variety – Supports multiple open-source models like LLaMA, Mistral, and GPT-style LLMs.

- Containerized Setup – Each model runs in an isolated environment, reducing dependency conflicts.

- Rapid Switching Between Models – Developers can switch between different models without heavy reconfiguration.

- Low-Latency Inference – Optimized for local hardware, offering faster response times compared to remote cloud calls.

- Custom Prompts and Templates – Users can design prompt structures tailored to specific business workflows.

- Resource Efficiency – Models are optimized to run even on systems with limited hardware, making AI accessible.

- Community-Driven Model Library – Access to a constantly growing repository of open-source and fine-tuned models.

- Offline Model Management – Download models once and use them without internet dependency.

- Easy Updates and Versioning – Quickly update models to the latest version or roll back to previous ones.

- Integration with Dev Tools – Works smoothly with tools like VS Code, Jupyter Notebooks, and automation frameworks.

- Embeddings Support – Generate embeddings locally for search, recommendation systems, and semantic analysis.

- Fine-Grained Control – Adjust hyperparameters, context length, and inference speed to balance performance and accuracy.

- Lightweight Installation – A single command setup without complex configurations.

- Extendability with Plugins – Support for plugins and third-party extensions to expand model capabilities.

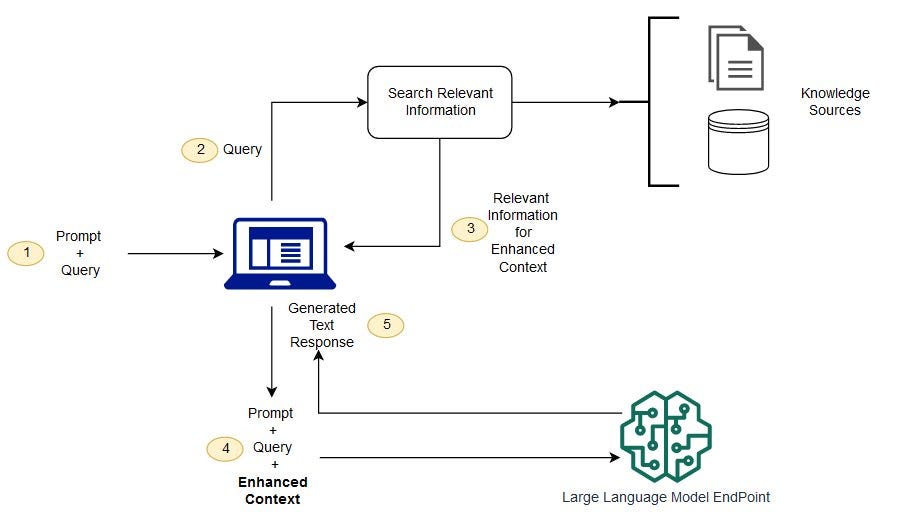

How Ollama Models Work

Understanding how ollama models function is key to grasping their power.

- Installation: Developers install Ollama like any other package, making setup extremely simple.

- Model Loading: Models are downloaded and run locally, often optimized for performance.

- Command-Line & API Support: Ollama provides both CLI and API integrations, making it easy to connect with other applications.

- Customization: Developers can train or fine-tune ollama models for specific industries (healthcare, finance, education, etc.).

Advantages of Using Ollama in AI Projects

Using ollama models offers numerous benefits:

- Enhanced Privacy: Data never leaves your machine.

- Lower Costs: Avoid expensive cloud AI usage bills.

- Faster Prototyping: Quickly experiment with models.

- Customizability: Tailor models to industry-specific needs.

- Scalability: From local testing to larger enterprise deployments.

Ollama Models vs Traditional AI Models

| Feature | Ollama Models | Traditional AI Models (Cloud-Based) |

| Deployment | Local (Laptop/Server) | Cloud (AWS, GCP, Azure) |

| Data Privacy | High – stays local | Moderate – data stored on servers |

| Cost | Low | High |

| Flexibility | Very flexible | Limited |

| Offline Use | Yes | No |

Popular Use Cases of Ollama Models

Some real-world scenarios where ollama models shine include:

- Chatbots & Virtual Assistants – Build intelligent conversational bots locally.

- Healthcare Research – Train models with sensitive patient data without privacy risks.

- Financial Analysis – Analyze market and transaction data securely.

- Education – Develop AI-powered tutoring systems that work offline.

- Content Creation – Use ollama models for blog writing, summarization, and translation.

Real-World Applications and Examples

- Startup Innovation: A small AI startup in Europe used ollama models to build a customer support chatbot without paying huge cloud costs.

- Medical Research: Hospitals are adopting ollama models to train AI on patient records securely.

- Personal Productivity: Freelancers use ollama models for note-taking, summarization, and brainstorming offline.

Integrating Ollama Models into Your Workflow

Here’s how you can integrate ollama models:

- Step 1: Install Ollama on your system.

- Step 2: Choose a model (like LLaMA, GPT, or Mistral).

- Step 3: Run commands via CLI or API.

- Step 4: Fine-tune for your specific needs.

- Step 5: Integrate with apps (Slack bots, Notion tools, automation scripts, etc.).

Challenges and Limitations of Ollama Models

While powerful, ollama models also come with challenges:

- Hardware Requirements: Running LLMs locally requires strong CPUs/GPUs.

- Model Limitations: Not as vast as cloud offerings yet.

- Scaling: Large-scale enterprise workloads may still need cloud infrastructure.

The Future of Ollama Models in AI

The future of ollama models looks bright as organizations prioritize data privacy, cost-efficiency, and customization. With the rise of edge AI and on-device intelligence, Ollama is set to become a standard tool in AI development.

Experts predict that ollama models will expand into industries like cybersecurity, IoT, and personalized AI companions.

Conclusion

In today’s AI-driven world, ollama models provide a revolutionary way for developers, researchers, and businesses to deploy large language models locally, securely, and cost-effectively.

By combining flexibility, privacy, and accessibility, Ollama has paved the way for next-generation AI development that doesn’t depend on costly cloud services.

If you’re a data scientist, developer, or AI enthusiast, it’s the right time to explore ollama models and unlock their potential in real-world projects.