Why Privacy in AI Matters

AI is everywhere, from the smartphone you touch every day to the cloud systems you will never physically see. Cloud platforms handle massive amounts of data, from personal information to financial records and business operations, so it is sensible to protect data not only when it is at rest or in transit but also during computation, such as during AI training and inference. GDPR, HIPAA, CCPA – these regulations all demand strict protection practices. Safeguarding data is a legal as well as the ethical obligation.

The training and the inference operations of AI rely on large datasets. These datasets often contain sensitive information. Without methods to protect the sensitive data, organizations face a higher risk of leakage, misuse along with a lack of compliance with regulations.

Finding the sweet spot between the data utility and privacy remains a major barrier to deploying AI in sectors like healthcare, finance, defense etc.,

This is where privacy-preserving AI techniques come into the picture. These methods allow AI models to train and perform inference without revealing sensitive information. In cloud based AI systems, the use of such methods means that the systems are both effective and compliant.

Let’s take a look at key privacy-preserving AI techniques that can be applied to AI systems that handle sensitive data, including but not limited to cloud-based AI systems.

Comparing Privacy-Preserving AI Techniques

Privacy-preserving AI methods come in many shapes and forms, each with its own strengths and quirks. Some work really well for specific scenarios, while others have trade-offs that might make you pause and think. I’ve laid out seven important techniques that should guide anyone who is trying to choose the right approach that fits their AI project.

| Technique | How It Works | Best Use Case | Challenges | Real-World Example |

| Differential Privacy (DP) | This technique adds statistical noise to datasets or model updates – it keeps individual data points private. DP offers a measurable privacy guarantee, but it also tries to keep data useful for analysis [1]. | Public data sharing or healthcare analytics are some of the examples. This technique is also handy for recommendation systems because you might want to get insights without exposing personal details. | A big issue here is the privacy utility tradeoff. Add too much noise, and an AI’s accuracy takes a hit. | Apple uses this approach in iOS to gather usage data for features like QuickType or emoji suggestions; they get the insights they need without knowing exactly what a person types [2]. |

| Homomorphic Encryption (HE) | This method encrypts data first making the AI models to work with the encrypted data without knowing the raw information [3]. | This approach is suitable for cloud based AI tasks – analyzing encrypted medical records to predict diagnoses without revealing patient information. | This is computationally heavy. Its operation on complex deep learning models is challenging if one has limited resources or a small budget. | Microsoft’s SEAL library allows developers to perform computations on encrypted data – it has been used for secure data analysis where privacy is important [3]. |

| Secure Multi-Party Computation (MPC) | The MPC allows multiple parties, such as companies or organizations, to compute together without having to share their private data. The idea relates to theoretical work by Goldwasser and others [4]. Since then, practical versions have also emerged [5], [6]. | This method helps with fraud detection and collaborative medical research as different groups pool insights without spilling secrets. | The process needs multiple parties and the coordination across parties adds to the complexity. It’s also not light on computing resources. | The banks ABN AMRO and Rabobank tested MPC to spot fraud patterns across institutions by using an encrypted approach like a secure version of PageRank to identify risks without sharing customer details. [5], [6]. |

| Federated Learning (FL) + Secure Aggregation | The training of AI models occurs on decentralized devices, such as phones or IoT gadgets, without having the raw data transmitted to a central server [7]. The addition of secure aggregation or Differential Privacy helps prevent leaks through model updates. | This method is suitable for mobile apps or IoT setups where data must stay local, like improving features on your smartphone. | A risk of privacy leaks through model updates remains, but techniques such as DP help. The coordination of all those devices is also difficult. | Google’s Gboard uses this method to improve predictive text. The training occurs on your phone, so your typing habits do not leave your device [8]. |

| Trusted Execution Environments (TEE) | The TEEs create secure areas within hardware. AI tasks can be run in secure areas without any access from outsiders [9]. These secure areas can act like a vault for your computations. | This technique is great for confidential cloud computing, where you need to process sensitive data in a secure way. | You’re tied to specific hardware and you have to trust that hardware provider. If that is a concern for you, it may not fit your needs. | Microsoft Azure uses Intel SGX to offer confidential computing environments. This keeps data safe during processing [9], [10]. |

| Zero-Knowledge Proofs (ZKP) | ZKPs allow you to prove something is true without revealing the details [11]. | This is useful for blockchain based AI or secure identity management. In these systems, verification can be performed without exposing private information. | The math behind ZKPs is complex. Also, the computational overhead slows operations. For quick, large tasks, it is often not practical. | Zcash uses zk-SNARKs to check private cryptocurrency transactions. The system does not show who sends what to whom [11], [12]. |

| Synthetic Data | This uses generative AI, such as GANs or VAEs, to produce synthetic/fake data that is similar to real data [13]. | Ideal for privacy-sensitive fields, such as healthcare or finance, where synthetic data can be used for testing or analysis without a risk of leaks. | Synthetic data should appear realistic, avoid bias, and still protect privacy. If it’s not good enough, the AI probably learns the wrong patterns. | Researchers have used PATE-GAN to get synthetic healthcare data, which lets them look at trends without a risk to patient privacy [13], [14]. |

The above comparison provides a clear idea of the techniques that fit your needs. Each method has its strengths and trade offs. – the correct choice depends entirely on your requirements.

Use Cases: Picking the Right Tool for the Job

Choosing the right privacy-preserving technique comes down to the following three factors:

I. Data Sensitivity,

II. Data Distribution, and

III. Computational Power.

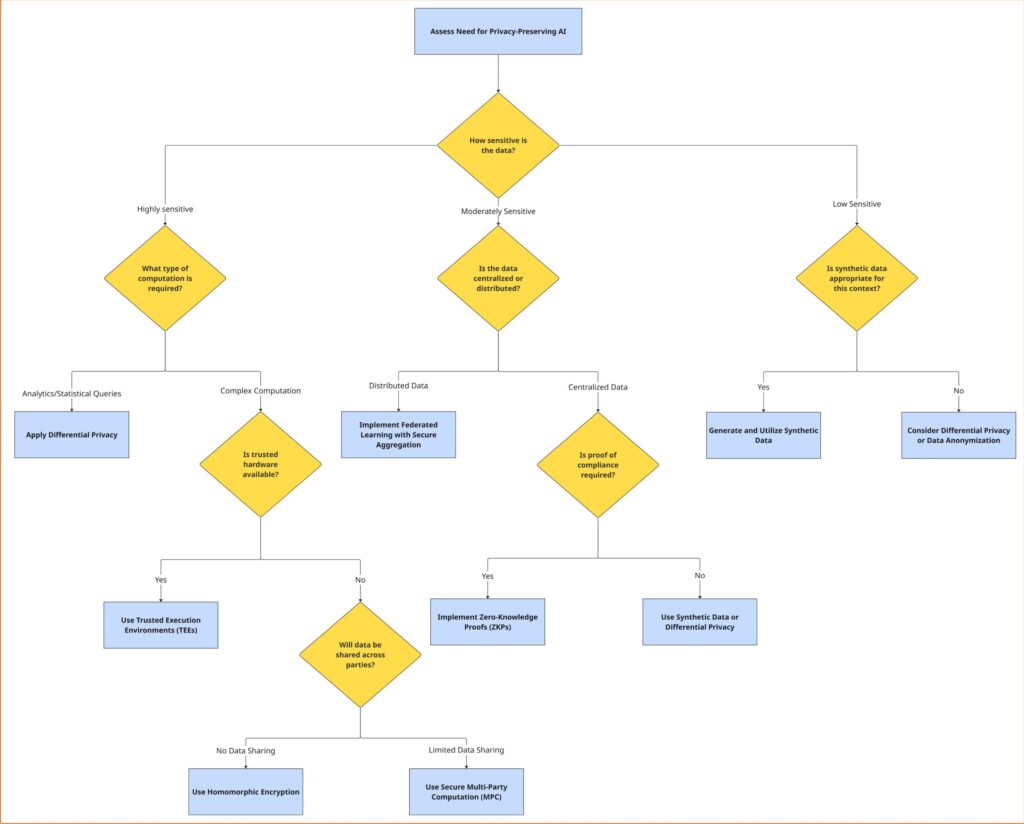

I’ve put together a flowchart that should help practitioners move through the decision-making process, matching real-world needs to the right techniques. Because, different protections are needed for a bank that processes customer transactions than a research lab that analyzes anonymized survey data.

Mixing and Matching for Better Security

Sometimes, a single technique isn’t enough. We can try to find a sweet spot between privacy, security, and performance by combining approaches.

For example,

I. A combination of Federated Learning with Differential Privacy allows AI models to train across decentralized devices like smartphones or hospital servers while protecting the privacy of the data [7], [1].

II. Using Homomorphic Encryption with Secure Multi-Party Computation (MPC) enables encrypted data to be processed securely across multiple parties who don’t need to trust each other with the data [3], [4].

III. Trusted Execution Environments (TEEs) with Zero-Knowledge Proofs (ZKPs) create secure enclaves to run AI computations (training/inference) and verify results without exposing sensitive details [9], [11].

These hybrid strategies can be tailored to specific needs, but they’re not one-size-fits-all.

Challenges and Where Things Are Headed

Privacy preserving AI has its own challenges. A major challenge is performance. Techniques such as Homomorphic Encryption or Secure Multi-Party Computation require heavy use of resources for computations. This slows down processes and increases costs, especially when dealing with very large datasets or complex models – they may work for smaller AI projects. Applying them to large models with billions of parameters quickly causes scalability problems. Even small delays impact time sensitive settings, such as real time fraud detection. Researchers are also exploring quantum resistant techniques in order to prepare for the day when quantum computers may crack today’s encryption. Privacy preserving AI will need to be ahead when that happens [15].

The privacy utility tradeoff is another issue. As an example, Differential Privacy (DP) adds statistical noise to protect individual data points – this addition improves privacy, but it can also reduce accuracy impacting accurate outcomes. Finding a good balance between security and performance is difficult and researchers are still figuring out the best ways to optimize it [1].

The new, light cryptographic algorithms and more efficient implementations of techniques such as Homomorphic Encryption and Differential Privacy caused these methods to be fast and also less resource heavy. So AI systems can train and run on sensitive data efficiently without compromising security, which makes them more practical for small companies or resource poor environments.

AI governance is also gaining a lot of attention. As these methods become complex, regulators and organizations are pushing for standard rules for ethical and legal use. A global framework where industries across sectors and countries agree on how to handle AI privacy could simplify compliance and build trust with users but getting that agreement is a really hard task.

Wrapping Up

As artificial intelligence grows within cloud-based systems, privacy-preserving methods to protect personal information are vital, they are no longer optional. Techniques involving training AI on many different devices or locations without moving all the data into one place [7], protecting individual contributions [1], or proving results without revealing details [11] give companies ways to deal with tricky risks and regulations. Often, combining various methods gives you the greatest opportunity to achieve good security with acceptable speed [3], [9], [5].

Designing AI with privacy as a core feature simplifies following rules and regulations, it also helps people trust sharing information. I know from experience that trust is really important. A while back, a friend quit a fitness app, feeling their personal health information could be compromised. Businesses protecting people’s information well will probably be trusted more, not only for following the law, but also for using artificial intelligence responsibly. The future presents challenges, yet with continued research privacy preserving AI methods could become essential for creating and deploying technology.

References

[1] C. Dwork, “Differential Privacy,” in Automata, Languages and Programming, ICALP 2006, pp. 1–12, 2006. doi:10.1007/11787006_1. Available: https://doi.org/10.1007/11787006_1

[2] Apple Machine Learning Journal, “Learning with Privacy at Scale,” 2017. Available: https://machinelearning.apple.com/2017/12/06/learning-with-privacy-at-scale.html

[3] Microsoft SEAL: Homomorphic Encryption Library. Microsoft Research. GitHub. Available: https://github.com/microsoft/SEAL

[4] S. Goldwasser, S. Micali, and A. Wigderson, “How to Play Any Mental Game,” in STOC 1987, pp. 218–229, 1987. doi:10.1145/28395.28420. Available: https://doi.org/10.1145/28395.28420

[5] TNO, ABN AMRO, and Rabobank, “Secure Collaborative Money Laundering Detection using Multi-Party Computation,” 2023. [Online]. Available: https://appl.ai/projects/money-laundering-detection

[6] Algemetric, “Secure Multi‑Party Computation in BFSI: Unlocking Collaborative Analytics for Risk & Compliance,” White Paper, May 2025. [Online]. Available: https://www.algemetric.com/wp-content/uploads/2025/05/MPC-White-Paper.pdf

[7] J. Konecny et al., “Federated Learning: Strategies for Improving Communication Efficiency,” arXiv preprint arXiv:1610.05492, 2016. Available: https://arxiv.org/abs/1610.05492

[8] Google Research, “Federated Learning: Collaborative Machine Learning without Centralized Training Data,” 2017. Available: https://research.google/pubs/pub46480/

[9] Intel Corporation, “Intel® Software Guard Extensions (Intel® SGX),” 2024. Available: https://www.intel.com/content/www/us/en/architecture-and-technology/software-guard-extensions.html

[10] Microsoft Azure Confidential Computing. Available: https://learn.microsoft.com/en-us/azure/confidential-computing/

[11] E. Ben-Sasson, A. Chiesa, D. Genkin, E. Tromer, and M. Virza, “SNARKs for C: Verifying Program Executions Succinctly,” Advances in Cryptology – CRYPTO 2013, LNCS 8043, pp. 90–108, 2013. doi:10.1007/978-3-642-40084-1_6.

[12] E. Ben-Sasson, A. Chiesa, C. Garman, M. Green, I. Miers, E. Tromer, and M. Virza, “Zerocash: Decentralized Anonymous Payments from Bitcoin,” Proceedings of the IEEE Symposium on Security and Privacy (S&P), pp. 459–474, 2014. doi:10.1109/SP.2014.36. Available: https://doi.org/10.1109/SP.2014.36

[13] X. Guo and Y. Chen, “Generative AI for Synthetic Data Generation: Methods, Challenges and the Future,” arXiv:2303.08945, 2023. Available: https://arxiv.org/abs/2303.08945

[14] J. Jordon, J. Yoon, and M. van der Schaar, “PATE-GAN: Generating Synthetic Data with Differential Privacy Guarantees,” International Conference on Learning Representations (ICLR), 2019 (OpenReview preprint posted Dec 2018). Available: https://openreview.net/forum?id=S1zk9iRqF7

[15] Mittal, H., & Jain, B., “Post-Quantum Cryptography: A Comprehensive Review of Past Technologies and Current Advances,” Proceedings of the First Global Conference on AI Research and Emerging Developments (G-CARED), May 2025, pp. 360–366. doi:10.63169/gcared2025.p52.

Authored by Abinandaraj Rajendran

Bio: Abinandaraj Rajendran, Senior Software Engineer specializing in AI/ML, focused on operationalizing cutting-edge innovations into scalable, production-ready solutions.