In statistical data analysis, assumptions often shape how we test hypotheses. While parametric tests rely heavily on data following a normal distribution, the real world doesn’t always cooperate. In many cases, data is skewed, ordinal, or limited by outliers—enter the Non Parametric T Test.

This powerful family of statistical tools provides flexibility when assumptions about data normality or variance equality are violated. Understanding this method can help analysts, researchers, and data scientists derive accurate conclusions even when traditional tests fail.

What is a Non Parametric T Test?

A Non Parametric T Test is a statistical method used to compare two or more groups when the underlying data does not meet the assumptions of normality or equal variances required by parametric t-tests. Unlike parametric tests, these do not rely on parameters like the mean or standard deviation but instead focus on ranks or medians.

In essence, a non parametric test is a distribution-free test, meaning it can be applied to data that does not fit the typical Gaussian (normal) curve.

Understanding the Need for Non Parametric Tests

Data in the real world often deviates from ideal conditions. Here are common scenarios where non parametric methods become essential:

- The data is ordinal (rank-based) instead of interval or ratio scale.

- The sample size is small, making it difficult to verify normal distribution.

- Outliers are present, heavily influencing mean values.

- The distribution is skewed or non-symmetric.

By applying non parametric techniques, analysts maintain the integrity of statistical conclusions without making unrealistic assumptions about data behavior.

Key Differences Between Parametric and Non Parametric Tests

| Feature | Parametric Tests | Non Parametric Tests |

| Data Type | Interval/Ratio | Ordinal/Ranked |

| Distribution | Requires Normal Distribution | No Distribution Requirement |

| Central Tendency | Mean | Median |

| Robustness | Sensitive to Outliers | Robust to Outliers |

| Examples | T-test, ANOVA | Mann–Whitney, Kruskal–Wallis, Wilcoxon |

This comparison highlights how non parametric t test alternatives allow broader applications, especially for irregular datasets.

When to Use a Non Parametric T Test

Non parametric tests are best suited for:

- Small sample sizes (n < 30)

- Ordinal or ranked data (e.g., satisfaction levels: poor, average, good)

- Non-normal data distributions

- Presence of extreme values or outliers

- Comparing medians instead of means

These tests are especially valuable in medical research, market research, and behavioral sciences, where data often fails to follow ideal statistical distributions.

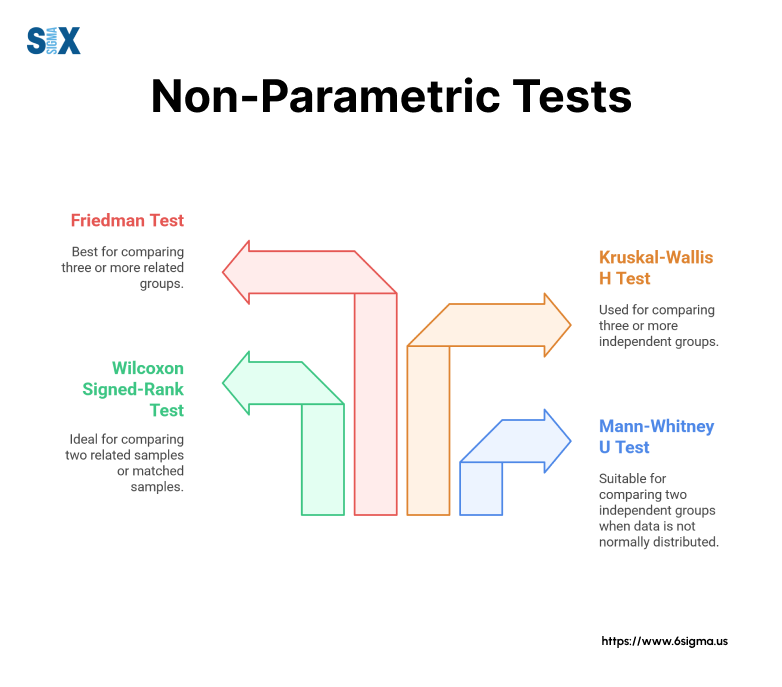

Common Types of Non Parametric Tests

- Mann–Whitney U Test – Compares two independent groups (alternative to independent t-test).

- Wilcoxon Signed-Rank Test – Compares paired or related samples (alternative to paired t-test).

- Kruskal–Wallis H Test – Compares more than two independent groups (alternative to ANOVA).

- Friedman Test – For repeated measures across multiple treatments.

The Mann–Whitney U Test

Concept

The Mann–Whitney U Test is a non parametric equivalent of the independent t-test. It compares whether two independent samples come from the same distribution.

Example

Suppose a company wants to test whether two different ad campaigns result in different levels of customer engagement. Since engagement scores are skewed, a Mann–Whitney U test can determine if one campaign statistically outperforms the other.

Formula

U = n₁n₂ + (n₁(n₁+1))/2 – R₁

Where R₁ = sum of ranks for sample 1.

The Wilcoxon Signed-Rank Test

Concept

Used when comparing two related samples, the Wilcoxon Signed-Rank Test checks whether their population mean ranks differ.

Real-World Example

A fitness app tests user satisfaction before and after launching a new interface. Since satisfaction scores are ordinal and not normally distributed, Wilcoxon’s test can reveal if the new design improved satisfaction.

The Kruskal–Wallis Test

When comparing more than two independent groups, the Kruskal–Wallis Test acts as a non parametric substitute for one-way ANOVA.

Example

Imagine analyzing customer ratings across three regional stores. If data does not meet ANOVA assumptions, Kruskal–Wallis identifies significant differences between regions.

Step-by-Step Example Using Real Data

Let’s say a hospital evaluates patient recovery times under three treatment methods (A, B, and C).

- Collect sample recovery times.

- Rank all data points together.

- Sum ranks for each treatment.

- Compute the Kruskal–Wallis H statistic.

- Compare H value against the chi-square critical value.

If H > critical value, it implies significant differences between at least one group.

Beyond the Basics — Why Non Parametric Tests Matter in Modern Analytics

In today’s data-driven era, the assumption of normality is becoming increasingly rare. With the explosion of unstructured and heterogeneous datasets—textual data, clickstream logs, or skewed financial returns—statisticians and data scientists are embracing non parametric testing as a robust, assumption-free foundation for inference.

Unlike parametric methods that rely on a fixed distribution (usually normal), non parametric t tests draw their power from data ranking and resampling. This enables analysts to detect significant differences even in noisy, irregular, or limited data environments.

The method is particularly valuable in predictive modeling pipelines, where preprocessing steps—like data transformation, outlier trimming, and normalization—can alter the true nature of the data. Non parametric tests minimize these risks by focusing on order rather than magnitude.

Mathematical Foundation: Rank-Based Inference

At the heart of the non parametric t test lies rank transformation. When data is converted into ranks (from lowest to highest), the test compares median tendencies rather than means. This approach effectively suppresses the influence of outliers.

Let’s define two independent samples:

X1,X2,…,Xn and Y1,Y2,…,Ym.

This relationship enables practitioners to derive p-values and confidence intervals with precision even without assuming a Gaussian shape.

Bootstrapping and Monte Carlo Integration

An advanced concept in non parametric inference is bootstrapping, which resamples the data with replacement to approximate the sampling distribution of a statistic. Bootstrapping allows analysts to estimate standard errors, confidence intervals, and test statistics without relying on distributional assumptions.

When combined with Monte Carlo simulations, bootstrapping enhances the robustness of non parametric testing, particularly for small or irregular samples. The resulting p-values are empirically derived, increasing reliability in real-world, messy datasets.

For instance, when comparing two marketing campaigns with only 20 responses each, applying bootstrap-based Mann–Whitney testing can yield highly accurate significance levels by simulating thousands of random resamples.

Integrating Non Parametric Tests into Machine Learning Workflows

Non parametric tests are not confined to classical statistics—they play an essential role in machine learning validation and model comparison.

Applications include:

- Feature Selection: Evaluating whether a particular feature significantly influences target distribution using rank-sum testing.

- Model Comparison: Using Wilcoxon signed-rank tests to assess if two predictive models (e.g., Random Forest vs. XGBoost) perform significantly differently across multiple datasets.

- Hyperparameter Optimization: Testing if model accuracy distributions vary across tuning configurations.

For example, in k-fold cross-validation, when comparing mean accuracies across folds, non parametric tests provide a robust alternative to ANOVA—particularly when accuracy metrics are non-normally distributed or contain outliers.

Effect Size Measurement in Non Parametric Analysis

Traditional significance testing provides a binary decision—significant or not significant. However, advanced analysts focus on effect size, which quantifies the magnitude of difference between groups.

In non parametric contexts, the rank-biserial correlation (r) is often used:

r=1−2U / n1n2

This statistic ranges from -1 to 1 and gives a more intuitive understanding of how strongly one distribution dominates another. It is especially useful in behavioral sciences and user experience (UX) studies, where practical significance matters more than just statistical significance.

Bayesian Non Parametric Approaches

An emerging frontier is Bayesian non parametric inference, where flexibility is achieved by modeling distributions using infinite-dimensional parameters.

For instance, Dirichlet Process Mixtures (DPMs) allow analysts to perform inference without specifying a fixed number of parameters or groups in advance.

While classical non parametric t tests rank and compare samples, Bayesian non parametrics infer latent structures — automatically adapting model complexity to the data. This approach is increasingly being used in A/B testing, time series segmentation, and genomic data analysis.

Real-World Industry Applications

Healthcare Analytics:

When patient recovery times, pain scores, or biomarker levels deviate from normality, non parametric tests like the Wilcoxon Signed-Rank or Mann–Whitney ensure valid clinical comparisons.

Example: Comparing pre- and post-treatment glucose levels among diabetic patients using the Wilcoxon test.

Finance:

Financial returns are often skewed and heavy-tailed. Analysts use the Mann–Whitney test to compare investment returns across asset classes when normality assumptions fail.

Marketing and UX Research:

Non parametric analysis plays a key role in A/B testing, customer preference ranking, and usability studies where data is ordinal.

Example: Comparing satisfaction scores between two mobile app interfaces using a Wilcoxon test.

Environmental Science:

To compare pollution levels across different regions, non parametric tests help account for irregular data patterns influenced by seasonality or measurement errors.

Advanced Interpretation: Beyond p-values

While the p-value indicates whether a difference exists, advanced interpretation involves examining distribution overlaps, median shifts, and visual diagnostics.

Analysts often pair non parametric results with:

- Boxplots to visualize median shifts

- Violin plots to observe distribution shape differences

- Rank histograms to ensure fairness in group comparisons

This combination of visual and statistical evidence provides a richer, more nuanced understanding of group differences—essential in high-stakes analytics like healthcare or financial modeling.

Practical Guidelines for Analysts

- Always visualize data distributions before deciding between parametric and non parametric approaches.

- Use Shapiro–Wilk or Kolmogorov–Smirnov tests to check normality.

- For small samples (n < 30) or skewed data, prefer non parametric t test alternatives.

- Combine with bootstrapping for increased confidence.

- Report effect sizes alongside p-values for comprehensive reporting.

Advantages of Non Parametric Tests

- No assumption of data normality

- Works with small datasets

- Resistant to outliers

- Can handle ordinal or ranked data

- Easy to interpret results through ranks and medians

Limitations and Challenges

- Less powerful when data is normally distributed

- Provides fewer insights into parameters like variance

- Ranking can result in information loss

- Not ideal for highly precise interval data

Applications in Real-Time Scenarios

Non parametric t test methods are widely applied in:

- Healthcare – Comparing treatment outcomes or patient recovery levels.

- Market Research – Analyzing customer satisfaction surveys.

- Education – Measuring student performance improvements.

- Finance – Comparing investment returns under non-normal conditions.

Comparison Table: Parametric vs Non Parametric Tests

| Criteria | Parametric | Non Parametric |

| Normal Distribution | Required | Not required |

| Outlier Sensitivity | High | Low |

| Common Tests | T-test, ANOVA | Mann–Whitney, Wilcoxon |

| Data Type | Continuous | Ordinal/Ranked |

How to Perform Non Parametric Tests in Python and R

In Python

Using scipy.stats library:

from scipy.stats import mannwhitneyu

group1 = [23, 45, 56, 34, 29]

group2 = [21, 44, 52, 33, 31]

stat, p = mannwhitneyu(group1, group2)

print("U-statistic:", stat)

print("p-value:", p)

In R

group1 <- c(23, 45, 56, 34, 29)

group2 <- c(21, 44, 52, 33, 31)

wilcox.test(group1, group2)

Interpretation of Results

- If p < 0.05 → Reject the null hypothesis.

- If p ≥ 0.05 → Fail to reject the null hypothesis.

This indicates whether differences between groups are statistically significant.

Real-Life Case Study: Market Research Example

A beverage company launches two packaging designs and collects customer preference rankings.

| Design | Rank |

| A | 1, 3, 2, 4, 5 |

| B | 2, 1, 3, 2, 4 |

Using the Wilcoxon test, analysts found p = 0.02, indicating a significant preference for Design B.

This real-world case demonstrates how non parametric testing provides insights even when data doesn’t meet normality assumptions.

Conclusion

The Non Parametric T Test is a crucial tool for real-world data analysis. By removing restrictive assumptions about data distribution, it allows researchers to test hypotheses reliably across diverse datasets. Whether it’s comparing treatments in healthcare or customer feedback in marketing, these methods maintain statistical rigor in uncertain conditions.

Mastering non parametric approaches enhances your ability to handle complex, messy, and real-world datasets with confidence and precision.

FAQ’s

What is the power of the t test?

The power of a t-test refers to the probability of correctly rejecting a false null hypothesis, indicating the test’s ability to detect a real difference or effect when one truly exists.

What is non-parametric for t-test?

A non-parametric alternative to the t-test is used when data does not follow a normal distribution; examples include the Mann-Whitney U test and Wilcoxon signed-rank test, which compare medians instead of means.

What is the importance of non-parametric tests?

Non-parametric tests are important because they don’t rely on assumptions about data distribution, making them ideal for analyzing ordinal, skewed, or small-sample data where traditional parametric tests may not be valid.

What is the power of a test formula?

The power of a test is calculated as Power = 1 – β, where β (beta) is the probability of making a Type II error — failing to reject a false null hypothesis. A higher power means a greater chance of detecting true effects.

Why is the t-test used?

The t-test is used to compare the means of two groups and determine whether the difference between them is statistically significant, especially when sample sizes are small and population variance is unknown.