Artificial intelligence has moved far beyond simple automation tools. Over the past Artificial intelligence has transitioned from rule-based logic systems to probabilistic deep learning architectures capable of reasoning, generating, and adapting. Among the most influential developments in this transformation is GPT AI, a class of large language models redefining intelligent automation across industries.

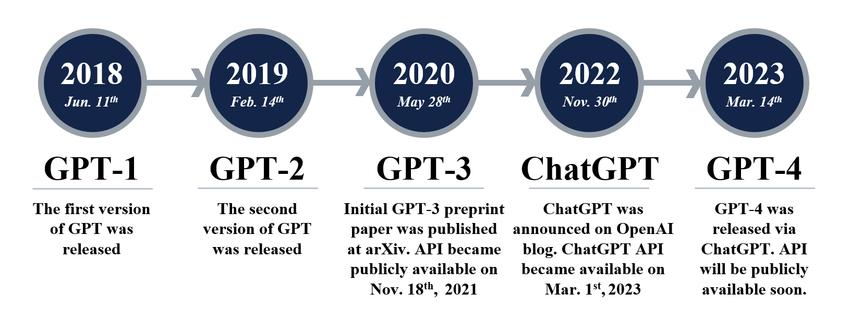

Enterprises, researchers, and developers now rely on transformer-based architectures to accelerate productivity, automate workflows, and enhance customer engagement. The progression from GPT 3 to GPT-4 research OpenAI systems and advanced APIs such as GPT-5-mini API illustrates how rapidly generative AI is evolving.

Understanding GPT AI Architecture

GPT AI is built on transformer neural networks first introduced in the paper “Attention Is All You Need” by Vaswani et al.

Core architectural elements include:

- Token embeddings

- Multi-head self-attention

- Layer normalization

- Feed-forward networks

- Probabilistic token prediction

These models are pre-trained on massive datasets and fine-tuned for specific tasks such as reasoning, summarization, coding, and multimodal understanding.

The Evolution from GPT 3 and GPT-3

GPT 3, sometimes written as GPT-3, marked a pivotal moment in AI research. It introduced:

- 175 billion parameters

- Strong few-shot learning

- Natural language generation at scale

Real-time example:

A SaaS company uses GPT 3 to auto-generate onboarding emails for thousands of customers. This reduces manual workload and improves personalization.

While GPT 3 focused primarily on language fluency, subsequent models improved reasoning depth, safety alignment, and multimodal input processing.

GPT-4 Research OpenAI and Breakthrough Capabilities

GPT-4 research OpenAI introduced more advanced reasoning, improved factual reliability, and enhanced alignment systems.

Notable improvements include:

- Better multi-step reasoning

- Improved mathematical problem solving

- Reduced hallucination rates

- Enhanced contextual awareness

GPT-4 research OpenAI also expanded capabilities beyond text, setting the stage for multimodal systems.

GPT-4o and Multimodal Intelligence

GPT-4o introduced multimodal intelligence, meaning the model can process:

- Text

- Images

- Audio inputs

This advancement allows applications such as:

- Visual question answering

- Image-based report generation

- Real-time conversational AI

Real-world example:

A healthcare platform integrates GPT-4o to analyze patient-uploaded medical images alongside textual reports, generating preliminary summaries for clinicians.

GPT-4V and Vision Capabilities

GPT-4V extends vision-language integration. It can:

- Interpret diagrams

- Extract information from charts

- Analyze scanned documents

- Provide accessibility assistance

Enterprise use case:

An insurance company processes damage claim photos using GPT-4V to generate structured summaries before human review.

GPT-4o LoRA and Model Adaptation

LoRA (Low-Rank Adaptation) enables efficient fine-tuning of large models like GPT-4o without retraining all parameters.

Benefits include:

- Reduced computational cost

- Domain-specific specialization

- Faster deployment cycles

For example:

A legal-tech firm fine-tunes GPT-4o using LoRA to specialize in contract clause analysis.

GPT-4o-2024-11-20 Versioning Significance

Version identifiers such as GPT-4o-2024-11-20 indicate iterative improvements in model performance, safety alignment, and efficiency.

Versioning ensures:

- Traceability

- Reproducibility

- Stable API deployment

- Enterprise-grade reliability

GPT-5-Mini API and Scalable Deployment

The GPT-5-mini API is designed for:

- Lightweight deployments

- Cost-efficient scaling

- Real-time inference

- High-volume applications

Use case:

An e-commerce chatbot handles millions of daily customer interactions using GPT-5-mini API for optimized latency and cost management.

GPT-5-Pro and Advanced Enterprise Reasoning

GPT-5-Pro focuses on:

- Advanced reasoning

- Structured problem solving

- Enterprise-level decision assistance

- Complex data interpretation

Example:

A financial analytics platform uses GPT-5-Pro to analyze market reports and generate risk summaries for institutional investors.

GPT o1 and Structured Reasoning

GPT o1 emphasizes reasoning-first architecture.

Capabilities include:

- Multi-step logical analysis

- Improved chain-of-thought processing

- Mathematical reasoning accuracy

Organizations leveraging GPT o1 can automate advanced technical documentation review processes.

Architectural Evolution of GPT AI

The transition from GPT-3 to modern GPT AI systems represents more than scaling parameters. It reflects architectural refinement, alignment improvements, multimodal capability, and inference optimization.

Transformer Architecture Refinements

While GPT AI models are based on transformer architecture, improvements include:

- More efficient attention mechanisms

- Better tokenization strategies

- Memory optimization layers

- Parallel processing acceleration

- Sparse attention adaptations for large context windows

These changes reduce latency while improving reasoning depth.

Scaling Laws and Model Efficiency

Research behind GPT AI demonstrates that performance scales predictably with:

- Parameter count

- Training data diversity

- Compute budget

However, modern advancements emphasize efficiency scaling, not just size scaling.

Instead of simply increasing parameters, new versions focus on:

- Optimized data sampling

- Synthetic data augmentation

- Reinforcement fine-tuning

- Curriculum-based training

This results in better performance without linear cost increases.

Training Methodologies Behind GPT AI

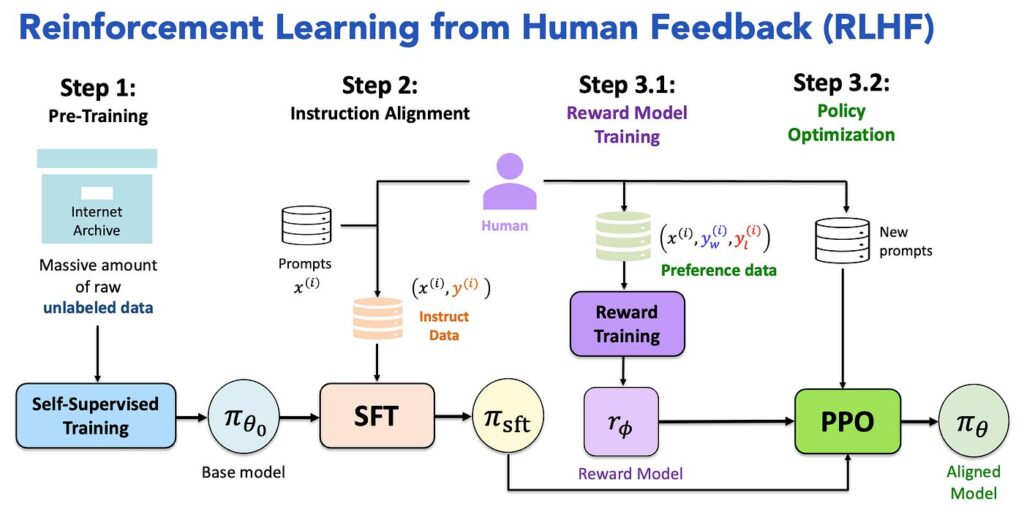

Modern GPT AI systems are not simply larger versions of earlier models. They incorporate multiple training phases that significantly improve alignment, reasoning, and reliability.

1. Pretraining at Scale

Large transformer models are pretrained on diverse corpora including:

- Licensed datasets

- Publicly available data

- Human-created synthetic data

- Multilingual text sources

Scaling laws demonstrate that performance improves predictably with increases in:

- Model parameters

- Training data size

- Compute power

This explains why progression from GPT 3 to GPT-4 research OpenAI models resulted in major capability jumps.

2. Reinforcement Learning from Human Feedback (RLHF)

RLHF improves alignment by:

- Collecting human preference data

- Ranking model responses

- Optimizing outputs for safety and usefulness

This significantly reduces harmful or misleading outputs and enhances instruction-following accuracy.

Real-world example:

A fintech company fine-tunes GPT AI to comply with regulatory compliance guidelines by training it with curated legal feedback data.

3. Constitutional and Alignment-Based Training

Recent research directions focus on:

- Self-evaluation loops

- Constitutional AI principles

- Automated critique systems

- Adversarial robustness testing

These mechanisms help GPT AI maintain safer outputs even under ambiguous or complex prompts.

Expanded Multimodal Capabilities

With GPT-4o and GPT-4V, multimodal processing has expanded beyond simple text and image interpretation.

Emerging multimodal functions include:

- Audio transcription and summarization

- Visual diagram reasoning

- Handwritten document interpretation

- Cross-modal reasoning (image + text + structured data)

Enterprise scenario:

A logistics company uploads shipment photos, delivery notes, and operational logs. GPT-4o synthesizes them into structured compliance reports.

Context Window Expansion and Long-Form Reasoning

One major innovation in advanced GPT AI systems is increased context window size.

This enables:

- Processing of entire research papers

- Large codebase analysis

- Multi-document legal review

- Long enterprise meeting transcripts

Example:

An AI-powered legal assistant uses GPT-5-Pro to analyze 200-page contracts and generate structured clause summaries with risk annotations.

API-Based Deployment Architectures

Modern GPT AI systems are primarily deployed through APIs rather than local installations.

Common Integration Pattern:

Client Application

→ Authentication Layer

→ Model API Endpoint

→ Response Processing Layer

→ Logging and Monitoring

Benefits:

- Scalable cloud infrastructure

- Enterprise-grade compliance

- Secure token-based access

- Version control (e.g., GPT-4o-2024-11-20)

Fine-Tuning and Domain Adaptation

Beyond LoRA, GPT AI customization can include:

- Prompt engineering frameworks

- Retrieval-Augmented Generation (RAG)

- Embedding-based knowledge injection

- Private dataset alignment

Retrieval-Augmented Generation (RAG)

RAG allows GPT AI to:

- Retrieve real-time company documents

- Inject structured knowledge

- Reduce hallucinations

- Provide verifiable outputs

Example:

A healthcare analytics platform integrates GPT AI with hospital databases to generate patient summaries based on verified medical records.

Comparison Across GPT Model Generations

Here is a structured comparison you can include:

GPT 3

- Strong generative text

- Few-shot learning

- Limited multimodal capability

GPT-4 Research OpenAI

- Improved reasoning

- Higher factual accuracy

- Better safety alignment

GPT-4o

- Multimodal (text + image + audio)

- Faster inference

- Lower latency

GPT-4V

- Advanced visual reasoning

- Chart and document interpretation

GPT-5-Mini API

- Lightweight, scalable deployment

- Optimized for high-volume use

GPT-5-Pro

- Deep analytical reasoning

- Enterprise-level decision support

- Advanced structured outputs

Performance Optimization Techniques

Organizations deploying GPT AI at scale focus on:

- Prompt optimization

- Token usage management

- Latency monitoring

- Caching frequent queries

- Cost forecasting

Real-world example:

An edtech platform reduces API costs by caching repeated FAQ responses generated via GPT-5-mini API.

Governance and Ethical AI Frameworks

Responsible deployment of GPT AI includes:

- Data privacy compliance (GDPR, HIPAA where applicable)

- Bias monitoring systems

- Human oversight layers

- Transparent AI documentation

- Risk mitigation protocols

Large enterprises create internal AI governance boards to evaluate GPT-based systems before deployment.

GPT AI in Research and Scientific Discovery

GPT AI systems are increasingly used in:

- Drug discovery

- Materials science research

- Climate modeling

- Academic literature summarization

Example:

Researchers use GPT AI to synthesize hundreds of academic papers into structured research insights, accelerating hypothesis generation.

Edge Computing and GPT AI

Emerging deployment models explore:

- Hybrid cloud-edge AI systems

- Lightweight model inference

- On-device inference for privacy-sensitive applications

Although large models require significant compute resources, optimized mini versions allow broader deployment flexibility.

Economic Impact of GPT AI

The economic implications include:

- Workforce augmentation

- Productivity acceleration

- Automation of repetitive tasks

- Creation of AI-driven job roles

- Reskilling initiatives

Industries most impacted:

- Customer support

- Software engineering

- Financial analysis

- Digital marketing

- Healthcare documentation

Emerging Research Trends

Future GPT AI advancements may include:

- Self-improving model architectures

- Improved reasoning transparency

- Real-time multimodal synthesis

- Lower carbon training footprints

- AI-human collaborative workflows

These trends suggest that GPT AI will evolve beyond language generation into a comprehensive intelligent automation framework.

Advanced Use Case Scenarios

Autonomous Workflow Assistance

GPT AI integrated with workflow systems can:

- Trigger task automation

- Generate reports automatically

- Provide decision recommendations

- Draft structured documentation

Enterprise Knowledge Graph Integration

Combining GPT AI with structured knowledge graphs enhances:

- Contextual reasoning

- Entity resolution

- Structured response generation

Technical Challenges Still Being Addressed

Even advanced models face:

- Energy consumption concerns

- Long-term reasoning reliability

- Interpretability issues

- Cross-domain generalization challenges

- Robustness under adversarial prompts

Continuous research addresses these issues.

Real-Time Business Applications

GPT AI enhances intelligent automation in:

Software Development

- Code generation

- Automated testing

- Documentation writing

Data Analytics

- Natural language to SQL

- Executive summary generation

- Dashboard interpretation

Customer Support

- AI chat systems

- Ticket routing

- Sentiment detection

Enterprise Knowledge Management

- Meeting summarization

- Policy analysis

- Workflow automation

Secure System Integration and Automation

While GPT AI enhances automation, it does not independently control operating systems. Instead, it integrates through:

- Secure APIs

- Cloud endpoints

- Access-controlled automation frameworks

- Human oversight layers

Enterprise architecture example:

User Interface → API Gateway → GPT Model → Validation Layer → Database → Audit Logs

This ensures compliance and security.

Research Foundations Behind OpenAI Models

OpenAI research focuses on:

- Scaling laws

- Alignment training

- Reinforcement learning from human feedback

- Safety guardrails

Academic and industry research collaborations continue to shape next-generation AI systems.

Performance and Limitations

Despite advances, GPT AI systems have limitations:

- Context window constraints

- Potential hallucinations

- Data bias sensitivity

- Dependency on prompt quality

- Compute-intensive infrastructure

Responsible deployment requires monitoring, evaluation, and human validation.

The Future of GPT AI

Future advancements may include:

- Autonomous AI agents with guardrails

- Improved multimodal reasoning

- Real-time learning systems

- Domain-specialized AI models

- Energy-efficient large-scale training

The convergence of GPT AI with robotics, IoT, and enterprise cloud platforms will redefine digital infrastructure globally.

Conclusion

From GPT 3 and GPT-3 to GPT-4 research OpenAI systems, GPT-4o multimodal intelligence, GPT-4V vision processing, GPT-5-mini API scalability, and GPT-5-Pro enterprise reasoning, the evolution of GPT AI represents one of the most significant advancements in modern artificial intelligence.

Organizations that strategically adopt GPT AI technologies can:

- Improve operational efficiency

- Automate complex workflows

- Enhance decision-making accuracy

- Scale customer engagement systems

- Accelerate innovation cycles

As research progresses, GPT AI will remain central to intelligent automation and next-generation enterprise transformation.

FAQ’s

What is GPT in AI?

GPT (Generative Pre-trained Transformer) is a type of AI language model that uses transformer architecture to understand and generate human-like text based on large amounts of pre-trained data.

How is GPT different from AI?

AI is a broad field focused on building intelligent systems, while GPT is a specific type of AI model designed for understanding and generating human-like text using transformer architecture.

What AI does GPT use?

GPT uses deep learning based on the Transformer architecture, a neural network model that leverages self-attention mechanisms to understand and generate human-like text.

Is GPT free to use?

Some versions of GPT are available for free with usage limits, while advanced or enhanced versions typically require a paid subscription or API access for higher limits and additional features.

What is a GPT system?

A GPT system is an AI-powered language system based on Generative Pre-trained Transformer models that can understand, generate, and respond to text using deep learning and transformer architecture.