Modern computational systems increasingly rely on optimization techniques that mimic nature. Long before machine learning models dominated the technology landscape, researchers explored biologically inspired approaches to solve complex problems. These approaches focus on adaptability, survival, and evolution. This foundation led to the development of genetic algorithms, a class of evolutionary computation methods capable of solving problems that traditional algorithms struggle with.

Optimization problems appear everywhere, from routing logistics and scheduling resources to tuning machine learning models. Traditional methods often fail when the search space is large, nonlinear, or poorly understood. Evolutionary intelligence offers an alternative by searching for good-enough solutions rather than perfect ones.

Understanding Genetic Algorithms

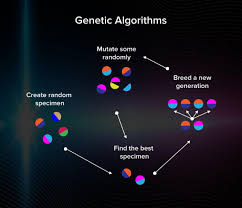

Genetic algorithms are search and optimization techniques inspired by natural selection and genetics. They operate on a population of potential solutions and iteratively improve them using principles derived from biological evolution.

Instead of exploring a single solution path, genetic algorithms maintain a diverse population. This population evolves over generations, gradually improving according to a defined fitness measure.

Key characteristics include:

- Population-based search

- Probabilistic transitions

- Fitness-driven evolution

- Adaptability to complex spaces

Biological Inspiration Behind Genetic Algorithms

Natural evolution is driven by survival of the fittest. Organisms with advantageous traits are more likely to reproduce, passing those traits to future generations. Over time, populations adapt to their environment.

Genetic algorithms replicate this idea computationally. Candidate solutions act as individuals, while the optimization goal serves as the environment. The better a solution performs, the higher its chance of being selected for reproduction.

This evolutionary process allows genetic algorithms to efficiently explore large and complex solution spaces.

Core Components of Genetic Algorithms

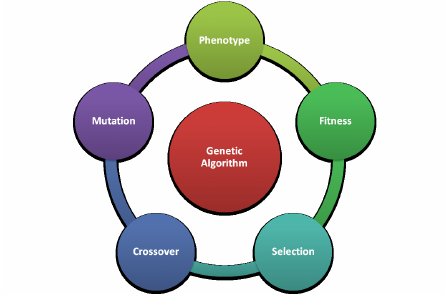

Every genetic algorithm is built upon a few fundamental components:

- Population: A set of candidate solutions

- Chromosomes: Encoded representations of solutions

- Genes: Individual parameters within a solution

- Fitness Function: Measures solution quality

- Operators: Selection, crossover, and mutation

Each component plays a critical role in guiding the evolutionary process toward optimal or near-optimal solutions.

How Genetic Algorithms Work Step by Step

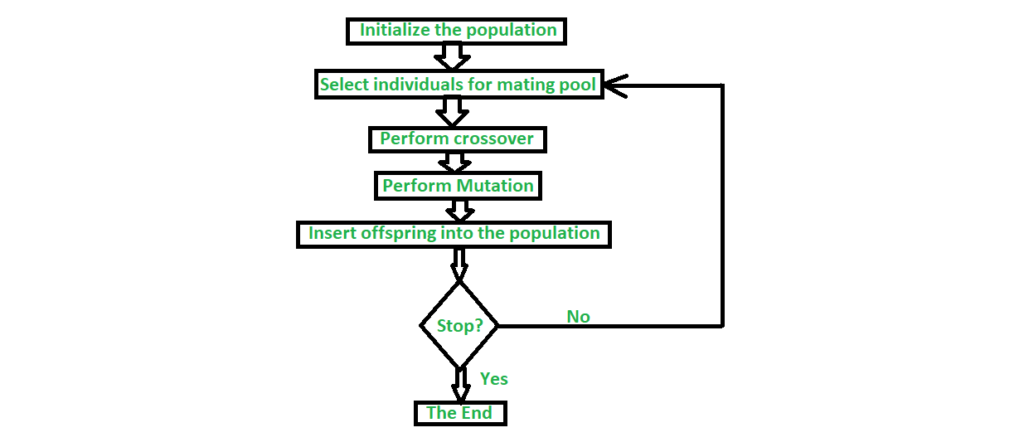

The typical workflow follows these stages:

- Initialize a random population

- Evaluate fitness of each individual

- Select parents based on fitness

- Apply crossover to generate offspring

- Apply mutation to introduce diversity

- Replace weak individuals with new ones

- Repeat until termination condition is met

This cycle continues until the algorithm converges or reaches a predefined stopping criterion.

Representation and Encoding Techniques

Encoding determines how solutions are represented. Common approaches include:

- Binary encoding

- Integer encoding

- Real-valued encoding

- Permutation encoding

The choice of encoding significantly affects performance. For example, scheduling problems often use permutation encoding, while parameter optimization may rely on real-valued encoding.

Fitness Functions and Selection Strategies

The fitness function defines what makes a solution good. It translates problem objectives into numerical values that guide evolution.

Common selection methods include:

- Roulette wheel selection

- Tournament selection

- Rank-based selection

Effective selection balances exploitation of good solutions with exploration of new possibilities.

Crossover and Mutation Operators

Crossover combines genetic material from two parents to produce offspring. Popular methods include:

- Single-point crossover

- Multi-point crossover

- Uniform crossover

Mutation introduces random changes to prevent premature convergence. Mutation rates must be carefully tuned to maintain diversity without disrupting progress.

Termination Criteria and Convergence

A genetic algorithm stops when one or more conditions are met:

- Maximum number of generations reached

- Fitness threshold achieved

- No improvement over several generations

Convergence does not always guarantee the global optimum, but often produces highly effective solutions.

Real-Time Example: Optimization Problems

Consider route optimization for delivery vehicles. Traditional algorithms struggle when constraints such as traffic, fuel costs, and delivery windows interact.

Genetic algorithms handle this by encoding routes as chromosomes and evolving them over generations. Fitness functions evaluate total distance, time, and cost. This approach is widely used in logistics companies.

Genetic Algorithms in Machine Learning

In machine learning, genetic algorithms are used for:

- Feature selection

- Hyperparameter optimization

- Neural network architecture search

They provide an alternative to grid search and random search, especially when parameter spaces are large and nonlinear.

Genetic Algorithms in Data Science

Data scientists apply genetic algorithms to clustering, anomaly detection, and rule discovery. Their flexibility allows adaptation to noisy and high-dimensional datasets.

Genetic Algorithms in Engineering Design

Engineering problems often involve conflicting objectives. Genetic algorithms support multi-objective optimization, enabling trade-offs between cost, performance, and durability.

Applications include:

- Structural design

- Aerodynamic optimization

- Manufacturing process tuning

Genetic Algorithms in Finance and Trading

Financial models use genetic algorithms to optimize portfolios, trading strategies, and risk management frameworks. Their adaptability makes them suitable for dynamic markets.

Variants of Genetic Algorithms

Traditional genetic algorithms work well for many optimization problems, but modern applications often demand faster convergence, better accuracy, and adaptability to complex constraints. To address these challenges, several advanced variants of genetic algorithms have been developed. These variants modify selection, crossover, mutation, or population strategies to improve performance across different problem domains.

1. Adaptive Genetic Algorithms

Adaptive genetic algorithms dynamically adjust parameters such as mutation rate, crossover probability, and population size during execution.

Why adaptation matters

Fixed parameters may work well initially but can cause:

- Premature convergence

- Loss of population diversity

- Slow exploration in later generations

Key characteristics

- Mutation rate increases when diversity decreases

- Crossover rate adapts based on fitness variance

- Population evolves differently at each stage

Real-world example

In hyperparameter tuning for deep learning models, adaptive genetic algorithms help explore wide parameter spaces early and fine-tune configurations in later generations.

2. Multi-Objective Genetic Algorithms

Many real-world problems require optimizing multiple conflicting objectives simultaneously. Multi-objective genetic algorithms generate a set of optimal trade-off solutions rather than a single solution.

Popular techniques

- NSGA-II (Non-Dominated Sorting Genetic Algorithm)

- SPEA (Strength Pareto Evolutionary Algorithm)

Core idea

Instead of one best solution, these algorithms compute a Pareto front, where no solution dominates another across all objectives.

Use cases

- Cost vs performance optimization

- Energy consumption vs accuracy in machine learning models

- Risk vs return in financial portfolios

3. Hybrid Genetic Algorithms (Memetic Algorithms)

Hybrid genetic algorithms combine global search capabilities of genetic algorithms with local optimization techniques such as gradient descent or hill climbing.

How they work

- Genetic algorithm explores the search space

- Local search refines elite individuals

- Improved individuals re-enter the population

Advantages

- Faster convergence

- Higher solution quality

- Reduced stagnation

Practical application

Used extensively in scheduling problems, vehicle routing, and neural network weight optimization.

4. Parallel Genetic Algorithms

Parallel genetic algorithms distribute populations across multiple processors or machines to improve computational efficiency.

Types of parallelism

- Master–slave model

- Island model

- Cellular model

Benefits

- Faster execution

- Increased population diversity

- Scalability for big data problems

Real-world scenario

Large-scale bioinformatics optimization and genome sequencing problems benefit significantly from parallel genetic algorithms.

5. Elitist Genetic Algorithms

Elitism ensures that the best individuals in each generation are preserved and passed directly to the next generation.

Why elitism helps

- Prevents loss of high-fitness solutions

- Improves convergence reliability

- Stabilizes evolutionary progress

Risk

Excessive elitism can reduce diversity and cause premature convergence, so balance is essential.

6. Constraint-Handling Genetic Algorithms

Many optimization problems include hard constraints, such as limited resources or strict design rules.

Common approaches

- Penalty functions

- Repair operators

- Feasibility-based selection

Example

In engineering design optimization, constraint-handling genetic algorithms ensure solutions meet safety, cost, and material constraints simultaneously.

7. Co-Evolutionary Genetic Algorithms

Co-evolutionary genetic algorithms evolve multiple populations simultaneously, where each population influences the evolution of others.

Two types

- Competitive co-evolution (predator–prey models)

- Cooperative co-evolution (sub-problem decomposition)

Applications

- Game strategy optimization

- Robotics and multi-agent systems

- Neural architecture search

8. Real-Coded Genetic Algorithms

Instead of binary encoding, real-coded genetic algorithms represent solutions using floating-point values, making them more suitable for continuous optimization.

Advantages

- Higher precision

- Faster convergence

- More natural representation

Use case

Common in control systems, signal processing, and financial modeling.

Mathematical Intuition Behind Genetic Algorithms

Genetic algorithms operate by approximating a probabilistic search process over a fitness landscape. Selection biases the population toward high-fitness regions, while crossover and mutation maintain exploration.

Key concepts include:

- Fitness proportional selection

- Schema theorem

- Exploration vs exploitation trade-off

Understanding these principles helps in designing more efficient algorithms.

Genetic Algorithms vs Other Optimization Techniques

| Technique | Strength | Weakness |

| Genetic Algorithms | Global optimization | Computationally expensive |

| Gradient Descent | Fast convergence | Local minima |

| Simulated Annealing | Escapes local minima | Slow cooling |

| Particle Swarm Optimization | Simple implementation | Premature convergence |

Genetic algorithms are preferred when search spaces are complex, discontinuous, or non-differentiable.

Common Pitfalls and How to Avoid Them

- Premature convergence → Increase mutation or population diversity

- Overfitting → Use cross-validation in fitness evaluation

- High computation cost → Use parallel or hybrid approaches

Ethical and Practical Considerations

As genetic algorithms are increasingly used in finance, healthcare, and security, ethical considerations include:

- Bias amplification

- Transparency of optimization objectives

- Reproducibility of results

Responsible design and evaluation are essential.

Future Directions of Genetic Algorithms

Modern research explores:

- Genetic algorithms combined with deep learning

- AutoML and neural architecture search

- Quantum-inspired genetic algorithms

- Self-adaptive evolutionary systems

These advancements position genetic algorithms as a core component of next-generation AI systems.

Genetic Algorithms in Healthcare and Bioinformatics

In healthcare, genetic algorithms assist in:

- Gene sequence alignment

- Drug discovery

- Medical image analysis

Advantages of Genetic Algorithms

- Handles complex, nonlinear problems

- Works without gradient information

- Highly parallelizable

- Flexible and adaptable

Limitations and Challenges

Despite their strengths, genetic algorithms face challenges:

- Computational cost

- Parameter tuning complexity

- Risk of premature convergence

Understanding these limitations is essential for effective implementation.

Comparison with Traditional Optimization Methods

Traditional methods often require strict assumptions. Genetic algorithms are more flexible, though sometimes slower. The choice depends on problem complexity and constraints.

Hybrid Models and Advanced Variants

Modern systems combine genetic algorithms with other techniques such as neural networks and swarm intelligence to improve efficiency and accuracy.

Tools and Libraries for Implementation

Popular libraries include:

These tools simplify implementation and experimentation.

Best Practices for Practical Usage

- Define clear fitness functions

- Maintain population diversity

- Tune crossover and mutation rates

- Monitor convergence behavior

Future Scope of Evolutionary Algorithms

As computational power grows, genetic algorithms will continue to play a vital role in optimization, especially in autonomous systems and adaptive AI.

Conclusion

Genetic algorithms represent a powerful approach to solving complex optimization problems. By leveraging evolutionary principles, they provide flexible, robust, and scalable solutions across domains. As AI systems grow more sophisticated, evolutionary computation will remain a cornerstone of intelligent problem-solving.

FAQ’s

What is a generic algorithm in AI?

A genetic algorithm is an optimization technique inspired by natural selection that evolves solutions through selection, crossover, and mutation to solve complex problems efficiently.

What are the main components of a genetic algorithm?

The main components of a genetic algorithm are population initialization, fitness function, selection, crossover, mutation, and termination criteria, which guide the evolution of optimal solutions.

What are four techniques used in genetic algorithms?

Four common techniques used in genetic algorithms are selection, crossover (recombination), mutation, and elitism, which help evolve and improve solutions over generations.

How are genetic algorithms used in optimization problems?

How are genetic algorithms used in optimization problems?

Genetic algorithms solve optimization problems by evolving a population of candidate solutions, using fitness-based selection, crossover, and mutation to gradually converge on the best solution.

What is a genetic algorithm?

A genetic algorithm is a search and optimization technique inspired by natural evolution, where solutions evolve through selection, crossover, and mutation to find optimal or near-optimal results.