Machine learning systems are increasingly expected to extract structure from massive volumes of unlabeled data. In many real-world scenarios, manually engineered features are insufficient or impractical.

Deep learning addresses this challenge through automatic representation learning, where models discover meaningful patterns without explicit supervision. One of the most foundational architectures enabling this capability is the autoencoder.

Why Feature Learning Matters

Raw data is often noisy, high-dimensional, and redundant. Learning compact representations improves:

- Model efficiency

- Generalization capability

- Noise robustness

- Interpretability of hidden structure

Feature learning allows systems to understand data rather than memorize it.

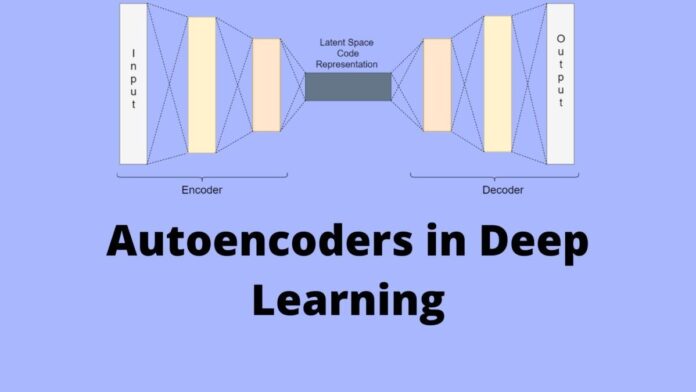

What Is an Autoencoder

An autoencoder is a neural network trained to reconstruct its own input. Instead of predicting an external label, it learns to compress and then reconstruct data.

This compression forces the model to capture the most important underlying patterns.

Unlike traditional dimensionality reduction techniques, autoencoder architectures can model non-linear relationships effectively.

Core Architecture of Autoencoders

An autoencoder consists of three main components:

- Encoder

- Latent representation

- Decoder

The encoder maps input data into a lower-dimensional space. The decoder reconstructs the original input from this compressed representation.

Encoding and Decoding Process

During encoding, the model removes redundancy and noise while preserving essential information. The latent space acts as a learned feature representation.

During decoding, the network attempts to rebuild the original data as accurately as possible.

This reconstruction constraint guides learning.

Autoencoder Training Objective

The training objective minimizes the difference between the original input and the reconstructed output.

Common optimization goals include:

- Mean squared error

- Binary cross-entropy

- Reconstruction likelihood

The objective encourages meaningful compression rather than memorization.

Loss Functions Used in Autoencoders

Choice of loss depends on data type:

- Continuous values: mean squared error

- Binary inputs: cross-entropy

- Probabilistic outputs: likelihood-based loss

Proper loss selection improves convergence and representation quality.

Types of Autoencoders

Several variants exist to address different learning goals.

Basic Autoencoders

Learn compact representations with minimal constraints.

Denoising Autoencoders

Trained to reconstruct clean input from corrupted data.

Sparse Autoencoders

Encourage sparsity in latent representations.

Variational Autoencoders

Learn probabilistic latent distributions.

Denoising Autoencoders Explained

Denoising autoencoders improve robustness by reconstructing clean data from noisy input.

This makes them effective for:

- Signal restoration

- Image enhancement

- Feature robustness

Noise injection forces the model to focus on meaningful structure.

Sparse Autoencoders

Sparse autoencoders introduce constraints that limit neuron activation.

Benefits include:

- Improved interpretability

- Reduced redundancy

- Better feature disentanglement

They are commonly used in biological data analysis.

Variational Autoencoders

Variational autoencoders model latent space as a probability distribution.

This enables:

- Data generation

- Smooth interpolation

- Controlled sampling

They are widely used in generative modeling tasks.

Convolutional Autoencoders

Designed for image data, convolutional autoencoders preserve spatial relationships.

Applications include:

- Image compression

- Medical imaging

- Visual anomaly detection

Real-World Applications of Autoencoders

Autoencoders are applied across industries.

Finance

Fraud detection and risk modeling.

Healthcare

Medical image reconstruction and anomaly detection.

Manufacturing

Predictive maintenance using sensor data.

Autoencoder in Image Compression

Instead of storing raw images, compressed latent representations reduce storage cost.

Benefits:

- Reduced file size

- Retained visual quality

- Efficient transmission

Autoencoder in Anomaly Detection

By learning normal patterns, autoencoders highlight deviations.

Common use cases include:

- Network intrusion detection

- Equipment failure prediction

- Fraud identification

High reconstruction error often signals anomalies.

Autoencoder in Recommendation Systems

Latent representations capture user preferences and item similarity.

They support:

- Personalized recommendations

- Cold-start problem mitigation

- Latent factor modeling

Autoencoder in Natural Language Processing

Autoencoders learn semantic embeddings for text data.

Applications include:

- Text compression

- Sentence similarity

- Document clustering

Understanding Autoregressive Models

Autoregressive models predict future values based on past observations.

They model sequential dependency explicitly and are widely used in:

- Time series forecasting

- Language modeling

- Signal processing

Autoencoder vs Autoregressive Approaches

| Aspect | Autoencoder | Autoregressive |

| Objective | Reconstruction | Prediction |

| Data flow | Parallel | Sequential |

| Generative control | Implicit | Explicit |

| Training speed | Faster | Slower |

Each approach serves different modeling needs.

When to Use Autoencoders

Autoencoders are effective when:

- Labels are unavailable

- Compression is needed

- Noise reduction is required

- Feature extraction is the goal

When Autoregressive Models Perform Better

Autoregressive models excel in:

- Sequential prediction

- Language generation

- Time-dependent forecasting

Choosing the correct approach depends on task objectives.

Autoencoders in Machine Learning Pipelines

Autoencoders are often used as preprocessing layers.

They enhance:

- Downstream classifier performance

- Feature quality

- Model robustness

Mathematical Intuition Behind Autoencoders

At a mathematical level, an autoencoder learns a function that maps input data to itself through a constrained intermediate representation. This constraint forces the network to discard irrelevant information while retaining meaningful structure.

The encoder learns a function:

f(x) = h

The decoder learns a function:

g(h) = x̂

Where:

- x is the original input

- h is the latent representation

- x̂ is the reconstructed output

The optimization objective minimizes the reconstruction error between x and x̂.

This process makes autoencoders powerful non-linear generalizations of classical dimensionality reduction techniques.

Autoencoders vs Principal Component Analysis

Although both autoencoders and PCA aim to reduce dimensionality, their capabilities differ significantly.

Key differences include:

- PCA is linear, while autoencoders model non-linear relationships

- PCA has a closed-form solution, autoencoders require iterative training

- Autoencoders scale better to complex data such as images and text

- PCA components are orthogonal, autoencoder representations are flexible

In practice, autoencoders often outperform PCA on high-dimensional, non-linear datasets.

Undercomplete vs Overcomplete Autoencoders

Undercomplete autoencoders restrict the latent space to fewer dimensions than the input, enforcing compression.

Overcomplete autoencoders allow larger latent spaces but rely on regularization to prevent identity mapping.

Regularization techniques include:

- Sparsity constraints

- Weight decay

- Noise injection

- Contractive penalties

The choice depends on data complexity and learning goals.

Contractive Autoencoders Explained

Contractive autoencoders penalize sensitivity of the latent representation to input changes.

This encourages robustness and smoothness in representation learning.

Applications include:

- Robust feature extraction

- Stability under noisy inputs

- Improved generalization

They are especially useful when data is subject to measurement error.

Autoencoders for Dimensionality Reduction in Big Data

In big data environments, traditional methods struggle with scale and complexity.

Autoencoders provide:

- Scalable compression

- Distributed learning capability

- Online and incremental learning

- Reduced storage and transmission costs

Industries handling massive sensor or log data benefit significantly.

Autoencoders in Cybersecurity

Cybersecurity systems often rely on anomaly detection.

Autoencoders learn normal system behavior and flag deviations.

Use cases include:

- Network intrusion detection

- Malware behavior analysis

- Authentication anomaly detection

They are particularly effective in environments with limited labeled attack data.

Autoencoders in Healthcare and Bioinformatics

Medical data is high-dimensional and noisy.

Autoencoders assist in:

- Medical image reconstruction

- Gene expression analysis

- Disease subtype discovery

- Patient risk stratification

Latent representations often reveal clinically meaningful patterns.

Autoencoders in Speech and Audio Processing

Speech signals contain redundant and noisy components.

Autoencoders enable:

- Noise reduction

- Speech enhancement

- Feature extraction for recognition systems

- Audio compression

They improve robustness in real-world audio environments.

Latent Space Interpretation and Visualization

Understanding latent space behavior is critical.

Common techniques include:

- t-SNE or UMAP visualization

- Latent traversal analysis

- Clustering latent embeddings

Well-structured latent spaces indicate effective learning.

Autoencoder Regularization Techniques

Regularization prevents trivial identity mapping.

Popular approaches include:

- Dropout

- L1 and L2 penalties

- Kullback–Leibler divergence

- Noise-based regularization

Proper regularization balances compression and reconstruction quality.

Training Stability and Optimization Challenges

Autoencoder training can suffer from:

- Vanishing gradients

- Overfitting

- Mode collapse in generative variants

- Poor convergence

Solutions include careful initialization, batch normalization, and learning rate scheduling.

Autoencoders in Semi-Supervised Learning

Autoencoders often serve as pretraining models.

Workflow:

- Train autoencoder on unlabeled data

- Use encoder outputs as features

- Train supervised model on labeled subset

This approach improves performance when labeled data is scarce.

Relationship Between Autoencoders and Self-Supervised Learning

Autoencoders are early forms of self-supervised learning.

They generate their own training signal through reconstruction.

Modern self-supervised techniques extend this idea using:

- Contrastive objectives

- Masked prediction

- Multi-view learning

Autoencoders laid the foundation for these methods.

Autoencoders and Representation Transfer

Learned representations can be transferred across tasks.

Benefits include:

- Faster convergence

- Reduced training cost

- Improved generalization

Transfer learning using autoencoders is common in industrial applications.

Performance Evaluation Metrics

Beyond reconstruction error, evaluation may include:

- Downstream task performance

- Latent space clustering quality

- Anomaly detection precision

- Compression ratio

Evaluation should align with deployment goals.

Autoencoders in Edge and Embedded Systems

Resource-constrained environments require efficient models.

Autoencoders enable:

- On-device anomaly detection

- Data compression before transmission

- Reduced bandwidth usage

Lightweight architectures are often deployed at the edge.

Ethical and Practical Considerations

While powerful, autoencoders can:

- Encode biases present in data

- Obscure decision logic

- Leak sensitive information through reconstruction

Responsible deployment requires careful data governance.

Autoencoders for Multimodal Learning

Modern applications increasingly rely on data from multiple modalities such as text, images, audio, and sensor streams. Autoencoders are well-suited for learning joint representations across different data types.

In multimodal autoencoders, separate encoders process each modality and merge representations into a shared latent space. The decoder reconstructs each modality from this unified representation.

Key benefits include:

- Cross-modal representation alignment

- Improved robustness to missing data

- Enhanced generalization across modalities

This approach is widely used in healthcare diagnostics, autonomous systems, and recommendation engines.

Cross-Domain Representation Learning

Autoencoders enable knowledge transfer across domains by learning domain-invariant features.

Examples include:

- Training on simulated data and deploying on real-world systems

- Transferring representations from one geographic region to another

- Adapting industrial models across different machines

Cross-domain autoencoders reduce data labeling costs and improve scalability.

Autoencoders in Industrial Internet of Things

Industrial environments generate continuous streams of sensor data. Autoencoders help manage this data efficiently.

Applications include:

- Predictive maintenance

- Fault detection in machinery

- Energy consumption optimization

- Process quality monitoring

Autoencoders can operate continuously and adapt to changing conditions.

Autoencoders for Feature Selection

High-dimensional datasets often contain irrelevant or redundant features.

Autoencoders implicitly perform feature selection by:

- Compressing input data

- Highlighting dominant patterns

- Reducing noise and redundancy

This improves downstream model performance and interpretability.

Autoencoders in Graph and Network Data

Graph autoencoders extend traditional architectures to relational data.

Use cases include:

- Social network analysis

- Link prediction

- Knowledge graph embedding

- Fraud detection in transaction networks

Graph-based autoencoders learn structure-aware embeddings.

Autoencoders in Recommender Systems at Scale

Large-scale recommendation systems use autoencoders to model user-item interactions.

Benefits include:

- Capturing latent preferences

- Handling sparse interaction matrices

- Supporting collaborative filtering

Autoencoders scale effectively with distributed training.

Autoencoders for Data Imputation

Missing values are common in real-world datasets.

Autoencoders reconstruct missing features by learning correlations between variables.

Applications include:

- Healthcare records

- Financial transaction logs

- Sensor networks

This approach often outperforms traditional imputation techniques.

Comparison of Autoencoder Variants

| Variant | Primary Purpose | Typical Use Case |

| Denoising | Noise robustness | Signal restoration |

| Sparse | Feature disentanglement | Interpretability |

| Variational | Generative modeling | Data synthesis |

| Contractive | Stability | Robust representation |

| Convolutional | Spatial learning | Image analysis |

Understanding these variants helps in architectural selection.

Autoencoders and Contrastive Learning

Modern self-supervised learning techniques build upon autoencoder principles.

Contrastive learning focuses on learning representations by distinguishing similar and dissimilar samples rather than reconstructing inputs.

Key differences:

- Autoencoders reconstruct data

- Contrastive methods learn invariance

- Hybrid approaches combine both

Such hybrid models achieve state-of-the-art performance in representation learning.

Energy-Based Autoencoders

Energy-based models interpret reconstruction error as an energy function.

Low energy indicates familiar patterns, while high energy signals anomalies.

Applications include:

- Security monitoring

- System health diagnostics

- Quality control

Energy-based approaches offer interpretability advantages.

Autoencoders in Financial Risk Modeling

Financial institutions use autoencoders to analyze complex risk patterns.

Applications include:

- Credit risk assessment

- Market anomaly detection

- Fraudulent transaction identification

Latent representations reveal hidden financial behaviors.

Autoencoders and Model Explainability

Explainability remains a challenge.

Interpretability techniques include:

- Feature attribution analysis

- Latent neuron visualization

- Reconstruction sensitivity analysis

Understanding representations improves trust and governance.

Training Autoencoders at Scale

Large-scale training introduces challenges.

Best practices include:

- Distributed training frameworks

- Mixed-precision computation

- Efficient batching strategies

- Monitoring latent drift

Scalability is essential for enterprise deployment.

Autoencoders in Online Learning Systems

In dynamic environments, data evolves over time.

Online autoencoders adapt incrementally, supporting:

- Real-time anomaly detection

- Continuous learning

- Drift adaptation

They are essential for streaming data scenarios.

Autoencoders and Continual Learning

Continual learning avoids catastrophic forgetting.

Autoencoders support this by:

- Preserving latent representations

- Regularizing parameter updates

- Supporting replay mechanisms

This is critical for long-lived systems.

Autoencoders in Robotics and Control

Robotic systems rely on sensory data processing.

Autoencoders help:

- Compress sensory inputs

- Detect abnormal behavior

- Support adaptive control

They enable efficient perception and decision-making.

Security Considerations in Autoencoder Deployment

Autoencoders can be vulnerable to adversarial manipulation.

Risks include:

- Adversarial reconstruction attacks

- Model inversion

- Latent space exploitation

Robust training and access control mitigate risks.

Evaluating Latent Space Quality

Beyond reconstruction accuracy, latent space evaluation includes:

- Cluster separation

- Semantic consistency

- Stability across runs

Good latent spaces improve downstream performance.

Hybrid Autoencoder-Autoregressive Architectures

Combining autoencoders with autoregressive models leverages strengths of both.

Examples include:

- Latent space encoding followed by sequential prediction

- Noise reduction before autoregressive forecasting

- Generative pipelines with latent conditioning

These hybrids are common in speech, video, and time series modeling.

Extended Industry Case Study

A manufacturing plant deployed autoencoders for equipment monitoring.

Results included:

- Reduced unplanned downtime

- Early fault detection

- Improved maintenance scheduling

This demonstrates real-world business impact.

Research Trends and Open Challenges

Current research explores:

- Disentangled latent spaces

- Multimodal generative modeling

- Better interpretability

- Reduced training complexity

These challenges define future directions.

Future Directions of Autoencoder Research

Ongoing research explores:

- Hybrid autoencoder-autoregressive models

- Disentangled representation learning

- Energy-based autoencoders

- Multimodal autoencoders

These directions aim to improve interpretability and control.

Extended Example: Autoencoder for Anomaly Detection

Workflow overview:

- Normalize input features

- Train autoencoder on normal data

- Compute reconstruction error

- Set anomaly threshold

- Monitor deviations

This approach is widely used in production monitoring systems.

How Autoencoders Complement Autoregressive Models

In practice, autoencoders and autoregressive models are often combined.

Examples include:

- Autoencoder-based feature extraction with autoregressive forecasting

- Latent space modeling followed by sequence prediction

- Noise reduction before autoregressive modeling

This hybrid approach improves robustness and performance.

Practical Implementation Overview

Typical workflow includes:

- Data normalization

- Architecture design

- Loss selection

- Model training

- Latent evaluation

Common Mistakes and Pitfalls

- Over-compression

- Insufficient regularization

- Poor loss selection

- Ignoring data distribution

Best Practices for Training Autoencoders

- Use validation reconstruction error

- Monitor latent space behavior

- Apply regularization techniques

- Scale input features properly

Final Takeaways

Autoencoder architectures have become foundational tools in modern deep learning. By enabling unsupervised representation learning, they unlock value from unlabeled data and support tasks ranging from compression to anomaly detection.

Understanding how autoencoder models differ from autoregressive approaches helps practitioners select the right tool for the right problem. When used correctly, they form the backbone of scalable, intelligent systems.

FAQ’s

Are autoencoders representation learning?

Yes, autoencoders are a form of representation learning, as they automatically learn compact, meaningful features from data by encoding and reconstructing inputs.

Is autoencoder better than PCA?

Autoencoders can outperform PCA when data relationships are nonlinear and complex, while PCA is simpler and more effective for linear patterns and smaller datasets.

What is the main purpose of an autoencoder?

The main purpose of an autoencoder is to learn efficient, compressed representations of data by encoding inputs and reconstructing them, enabling tasks like dimensionality reduction, denoising, and anomaly detection.

Are autoencoders AI?

Yes, autoencoders are part of artificial intelligence, specifically within machine learning and deep learning, as they learn patterns and representations from data automatically.

What are some real-world applications of autoencoders?

Autoencoders are used in image denoising, anomaly detection, data compression, recommendation systems, and feature extraction, helping systems learn efficient representations from complex data.