In today’s digital-first world, businesses rely heavily on data-driven decision-making. But raw data is rarely clean, consistent, or reliable. This is where data profiling steps in as a game-changer.

Data profiling is not just about checking what’s inside a dataset—it’s about understanding its structure, content, and quality to unlock hidden insights and improve trust in data. From business intelligence dashboards to AI-driven models, every system depends on high-quality data, and data profiling makes that possible.

In this blog, we’ll dive deep into what is data profiling, explore its importance, methodologies, and best practices, while also looking at real-world applications that show its transformative power.

Understanding the Concept of Data Profiling

At its core, data profiling is the process of analyzing, reviewing, and summarizing datasets to assess their quality, accuracy, and consistency.

It involves examining data at multiple levels—whether within a single column, across rows, or between multiple tables. Think of it as a data health check-up before the data is put into production systems like ETL pipelines, machine learning models, or reporting dashboards.

Example: A retail company runs data profiling on its customer database and discovers that 7% of entries have missing phone numbers and 3% contain invalid email formats. Identifying this early prevents poor customer engagement campaigns.

Why Data Profiling is Important in Modern Businesses

Businesses operate in a data-driven economy. If the data is incomplete, inconsistent, or inaccurate, the resulting decisions can cost millions.

Key reasons why data profiling matters

- Ensures high-quality input for analytics and AI models.

- Improves data governance and compliance with regulations like GDPR and HIPAA.

- Reduces ETL pipeline failures caused by unexpected data formats.

- Helps organizations build trust in their data assets.

Key Objectives of Data Profiling

The main goals include:

- Detecting anomalies like null values, duplicates, or outliers.

- Assessing completeness of data records.

- Verifying conformity with defined rules or business standards.

- Measuring accuracy against external trusted sources.

- Supporting metadata management for better data documentation.

Types of Data Profiling

- Column Profiling – Examines values in a single column to calculate distributions, min/max values, and frequency.

- Cross-Column Profiling – Checks dependencies across multiple columns (e.g., “if age < 18, employment status should not be full-time”).

- Cross-Table Profiling – Ensures consistency across related tables by validating foreign keys and joins.

- Rule-Based Profiling – Applies custom business rules to validate correctness (e.g., postal codes must follow a country’s format).

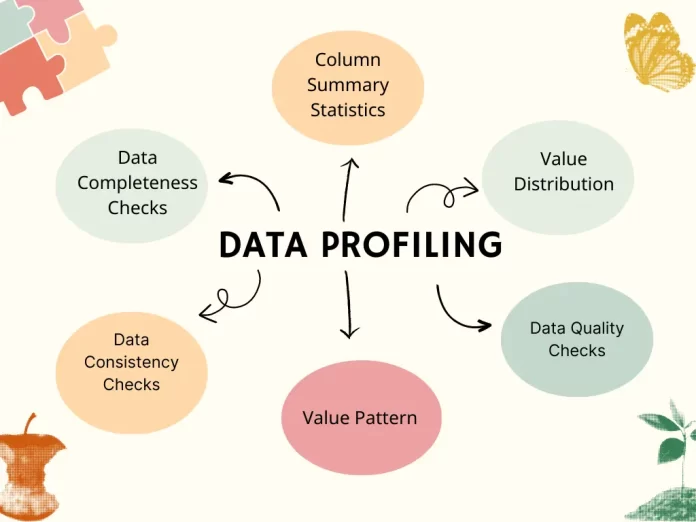

Core Techniques Used in Data Profiling

- Pattern recognition (e.g., emails should contain @domain.com).

- Statistical summaries (mean, median, standard deviation).

- Outlier detection for unusual data points.

- Data type validation (ensuring dates, integers, or strings follow the right format).

Data Profiling vs Data Quality

Though closely related, they’re not the same:

- Data Profiling → Discovery and assessment of data.

- Data Quality → The outcome of data profiling, reflecting reliability and accuracy.

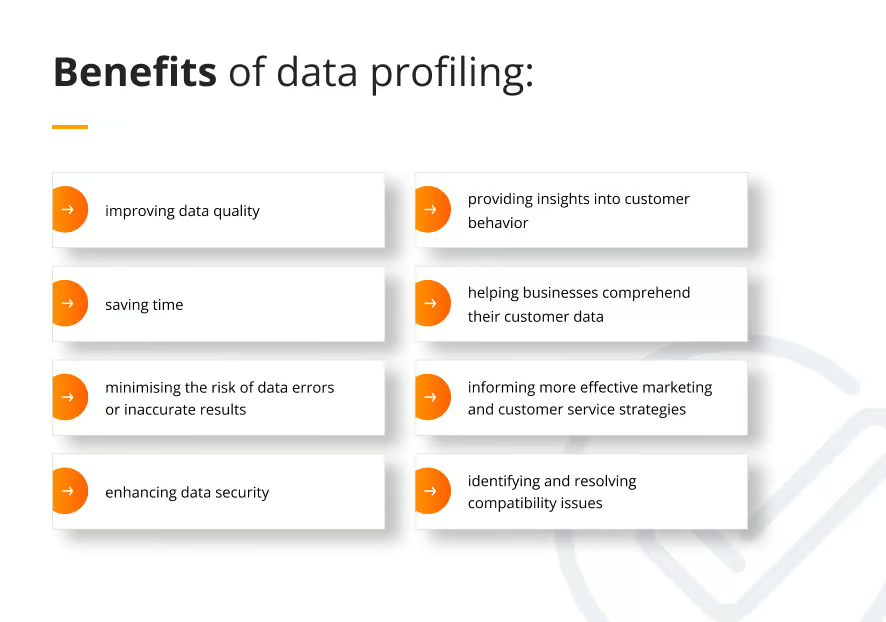

Benefits of Data Profiling

- Enhances decision-making accuracy.

- Identifies and fixes hidden data issues early.

- Reduces risks of compliance violations.

- Saves time in ETL pipeline development.

- Builds organizational trust in analytics and reporting.

Real-World Applications of Data Profiling

- Healthcare: Ensuring patient records are complete and accurate to avoid misdiagnoses.

- Finance: Detecting fraud by identifying anomalies in transaction records.

- E-commerce: Cleaning product catalogs for accurate search results and recommendations.

- Telecom: Validating customer records before network migrations.

- Retail: Cleansing product catalogs for better recommendations.

- Government: Ensuring census data integrity.

Challenges in Data Profiling

- Large-scale data volumes in big data pipelines.

- Unstructured data formats like images or text.

- Dynamic datasets that change in real time.

- Integration complexities across multiple platforms.

Best Practices for Implementing Data Profiling

- Start with business rules tied to outcomes.

- Automate profiling as part of ETL workflows.

- Use data visualization to interpret anomalies.

- Collaborate with both technical and business stakeholders.

Data Profiling in ETL and Data Warehousing

In ETL, data profiling helps:

- Define transformation rules.

- Prevent pipeline failures.

- Ensure data is ready for warehousing and analytics.

Tools and Technologies for Data Profiling

- Open-source tools: Talend Open Studio, Apache Griffin

- Enterprise tools: Informatica Data Quality, IBM InfoSphere

- Cloud-based tools: Google Cloud Dataprep, AWS Glue DataBrew

Role of Machine Learning and AI in Data Profiling

ML enhances profiling by:

- Automating anomaly detection.

- Predicting missing values intelligently.

- Learning data patterns to continuously improve validation rules.

Data Profiling and Regulatory Compliance

With regulations like GDPR, HIPAA, and CCPA, data profiling ensures organizations meet requirements for:

- Data accuracy.

- Consent-driven data usage.

- Audit trails and reporting.

Future of Data Profiling in Big Data and AI

As data grows exponentially, future profiling will involve:

- Real-time profiling with streaming data (Kafka, Spark).

- AI-driven automation for anomaly detection.

- Integration with data governance frameworks for enterprise trust.

Real-Time Example

A global e-commerce platform uses data profiling to validate shipping addresses across millions of records. Profiling revealed that 12% of international addresses were incomplete, which caused shipping delays. After fixing the issue, delivery success rates improved by 18%.

Data Profiling in Data Mesh and Data Fabric Architectures

- With enterprises shifting to data mesh and data fabric, data profiling is no longer centralized—it’s domain-driven.

- Each business unit owns its data pipelines and must embed profiling within their microservices.

- Profiling in data fabric ecosystems helps ensure semantic consistency across hybrid multi-cloud systems.

Integration of Data Observability with Profiling

- Modern profiling is merging into data observability platforms like Monte Carlo, Bigeye, and Acceldata.

- Profiling metrics (null ratios, drift, schema mismatches) become part of end-to-end observability dashboards.

- This allows proactive detection of issues like data drift and pipeline breakages before they hit downstream AI systems.

Augmented Data Profiling with Generative AI

- LLMs (Large Language Models) are being used to auto-generate profiling summaries for non-technical stakeholders.

- Example: Instead of reading 200-column profiling reports, business users can query:

“Which columns have the most missing data in the customer dataset?” - AI then provides human-readable insights directly from profiling results.

Streaming Data Profiling for Real-Time Systems

- Traditional profiling worked on batch data, but IoT, fintech, and e-commerce require real-time profiling.

- Tools like Apache Flink + Debezium enable profiling on event streams to catch anomalies in milliseconds.

- Example: Fraud detection systems profile transaction flows in real-time to spot unusual payment behaviors.

Semantic Data Profiling

- Goes beyond syntactic checks (format, type, nulls) → looks at semantic meaning.

- Example: A column labeled “date_of_birth” should not contain values like 2050.

- This ensures business rule consistency, not just technical validation.

Profiling in Privacy-Preserving Data Management

- With differential privacy and federated learning, profiling must avoid exposing sensitive patterns.

- Advanced profiling tools now use privacy-aware algorithms to summarize datasets without leaking PII.

- Example: Healthcare profiling tools anonymize patient-level data but still provide aggregate insights.

Data Profiling and Explainable AI (XAI)

- Profiling is being integrated into explainability workflows for AI models.

- If a model is biased, profiling helps identify data imbalance (e.g., 80% male / 20% female in training).

- Profiling reports can be used as model audit documentation for regulators.

Automated Data Contracts with Profiling

- Profiling now contributes to data contracts between producers and consumers.

- Example: A finance team guarantees that transaction_id will always be unique and numeric.

- Profiling continuously validates these contracts, triggering alerts when violations occur.

Profiling for Data Lakehouses (Databricks, Snowflake)

- As lakehouses unify structured + unstructured data, profiling must handle:

- JSON, Parquet, and Avro formats.

- Semi-structured attributes like nested schemas.

- JSON, Parquet, and Avro formats.

- AI-driven profiling tools (Great Expectations, Soda Core) are now built directly into lakehouse ecosystems.

Advanced Metrics Beyond Nulls and Duplicates

Next-gen profiling measures include:

- Entropy and cardinality ratios for detecting skewed distributions.

- Drift detection between historical and current datasets.

- Referential integrity scores across multi-source data.

- Outlier clustering using ML-based density estimation.

Case Study: Netflix Data Profiling

- Netflix handles petabytes of user interaction data.

- Data profiling is automated using Apache Spark + custom ML rules.

- Profiling ensures:

- Accurate personalization (e.g., avoiding duplicate movie IDs).

- Real-time consistency checks for recommendation engines.

- Accurate personalization (e.g., avoiding duplicate movie IDs).

The Role of Blockchain in Data Profiling

- Blockchain-based metadata registries ensure tamper-proof profiling reports.

- Example: In financial audits, regulators can trust that profiling metrics weren’t manipulated by internal teams.

Cross-Domain Data Profiling for Multi-Cloud Environments

- Modern enterprises operate across AWS, Azure, and Google Cloud.

- Data profiling tools must now support federated queries across clouds without moving data.

- Example: A retail company running sales on AWS Redshift and supply chain on Google BigQuery needs unified profiling to maintain data quality across both.

Graph-Based Data Profiling

- Traditional profiling is tabular, but data today is also graph-based.

- Profiling on graph databases (Neo4j, TigerGraph) checks:

- Node connectivity ratios.

- Orphan nodes.

- Relationship anomalies.

- Node connectivity ratios.

- Example: In fraud detection graphs, profiling can reveal “unusually isolated nodes,” which may be false accounts.

Automated Metadata Enrichment through Profiling

- Profiling results can feed into data catalogs (Collibra, Alation, DataHub).

- Example: Instead of manually tagging “customer_id” as a primary key, profiling auto-detects uniqueness and updates catalog metadata.

Integration with MLOps Pipelines

- Profiling is now a pre-deployment stage in MLOps workflows.

- Before training an ML model, profiling ensures:

- No label leakage.

- Balanced class distributions.

- Consistent schema across training & inference data.

- No label leakage.

- Example: An e-commerce ML model for predicting customer churn fails if profiling doesn’t catch missing subscription_end_date.

Data Profiling in Industry-Specific Regulations

- Healthcare (HIPAA): Profiling ensures no PHI (Protected Health Information) leaks into analytics environments.

- Banking (Basel III, AML): Profiling checks ensure consistent transaction categorization to prevent fraud.

- Retail (GDPR, CCPA): Profiling helps detect PII columns that need anonymization before analytics.

Temporal Data Profiling

- Goes beyond static profiling → focuses on time-evolution of datasets.

- Example: Profiling sales data across months to detect seasonality anomalies (e.g., sudden drops in December sales that should usually peak).

- Useful for time-series forecasting validation.

Collaborative & Crowdsourced Data Profiling

- New tools allow teams to annotate profiling results collaboratively.

- Example: A marketing analyst marks “campaign_code” as invalid in one dataset → that feedback is shared across the entire data team.

- Reduces repeated effort in large enterprises.

Cognitive Data Profiling with NLP

- Uses Natural Language Processing (NLP) to automatically interpret column names & descriptions.

- Example: Column “dob” gets auto-tagged as date_of_birth and checked for valid age ranges.

- Helpful when working with unstructured legacy datasets.

Event-Driven Profiling with Data Quality Alerts

- Instead of scheduled jobs, profiling can be triggered by events.

- Example: If a new file lands in a data lake, profiling kicks in instantly → raises alerts if 20% rows are blank.

- Used in real-time monitoring pipelines.

Integration with Synthetic Data Generation

- Profiling is now linked with synthetic data engines.

- If sensitive values are found, profiling tools can trigger synthetic data replacements for testing purposes.

- Example: A healthcare team profiles patient data → automatically replaces PHI with synthetic values for model testing.

Quantum Data Profiling (Research Stage)

- With quantum computing, massive data profiling tasks could be accelerated.

- Quantum algorithms could help identify anomalies in petabyte-scale datasets faster than classical systems.

- Still experimental, but early prototypes exist in IBM Q and Google Quantum AI labs.

Case Study: Uber’s Data Profiling Evolution

- Uber built its own profiling system, Data Quality Monitoring (DQM).

- Automatically profiles ride, trip, and GPS datasets.

- Detects issues like missing coordinates or duplicate trip IDs in real time.

- Ensures smooth operation for millions of daily rides.

Future Trends in Data Profiling

- Profiling + Auto-Remediation → AI-driven fixes, not just detection.

- Profiling as a Service (PaaS) → Cloud-native APIs for instant integration.

- Profiling for IoT Data → Handling sensor streams with billions of records daily.

- Explainable Profiling → Providing human-readable explanations of data anomalies.

Future of Data Profiling – Self-Healing Data Pipelines

- Profiling is moving toward self-healing systems:

- Detect → Fix → Learn → Improve.

- Detect → Fix → Learn → Improve.

- Example: If profiling finds 3% of addresses missing ZIP codes, ML-based rules can auto-correct by referencing external APIs (like Google Maps).

Conclusion

Data profiling is no longer optional—it is the foundation of reliable, trustworthy, and actionable data. Whether it’s for AI model accuracy, business intelligence dashboards, or compliance, the ability to understand and improve data quality defines success in the digital economy.

By integrating automation, AI, and governance frameworks, organizations can ensure their data assets are not only usable but also future-ready.