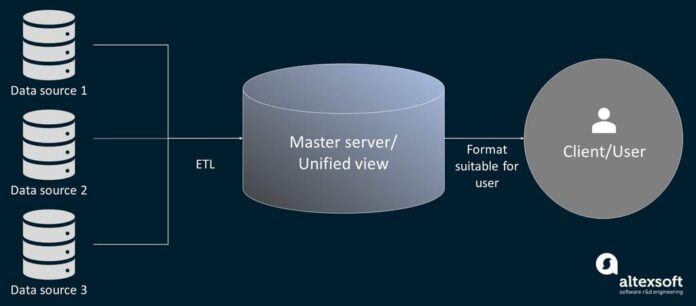

Every organization today relies on data to make decisions, improve customer experiences, and drive growth. However, data often comes from multiple sources—databases, applications, APIs, IoT devices, and cloud platforms. Without a proper mechanism to process, clean, and unify this information, businesses face inconsistent insights and delayed decision-making.

That’s where ETL comes in. By extracting, transforming, and loading data into a centralized system, ETL ensures businesses have clean, structured, and usable data for analytics and reporting.

What is ETL? Breaking Down Extract, Transform, Load

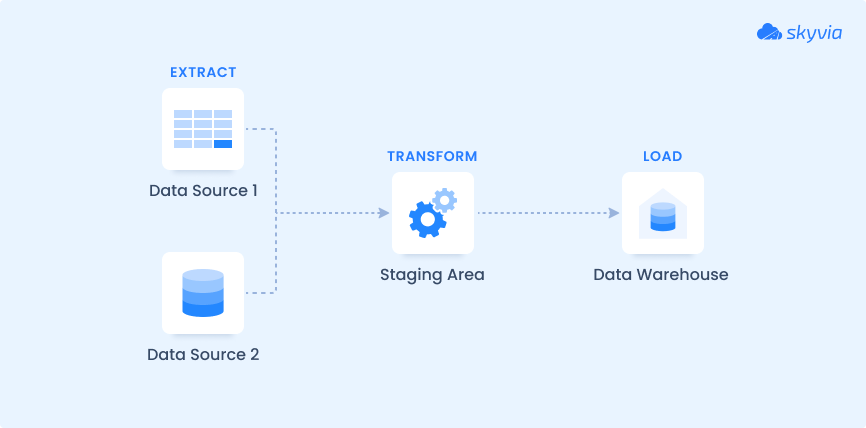

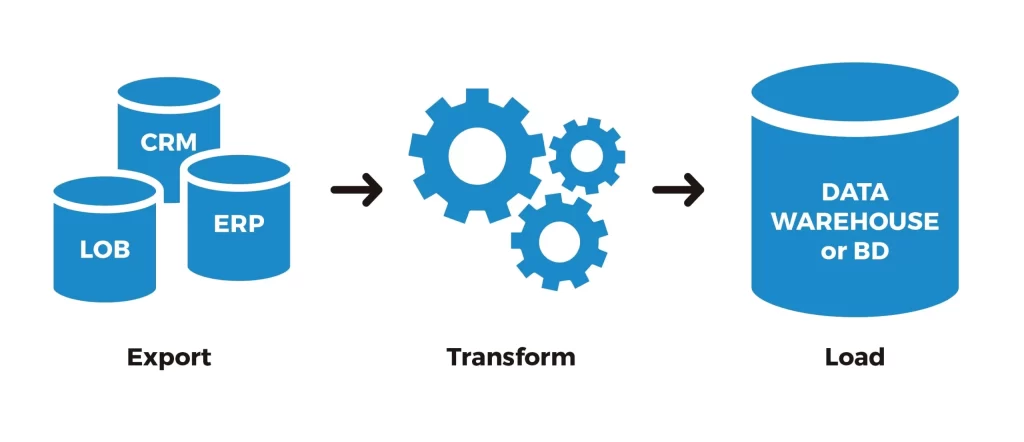

ETL stands for:

- Extract – Pulling data from multiple sources such as databases, CRMs, flat files, APIs, or streaming platforms.

- Transform – Cleaning, enriching, and reformatting data to make it consistent, accurate, and analysis-ready.

- Load – Storing the transformed data into a data warehouse, data lake, or database for reporting and analytics.

Evolution of ETL: From Legacy Systems to Modern Cloud Platforms

Originally, ETL was a batch processing technique where data was processed in scheduled intervals (e.g., nightly jobs). Today, ETL has evolved into real-time and cloud-native pipelines, making it suitable for fast-moving industries like eCommerce, banking, and healthcare.

Transition milestones:

- 1980s–1990s: ETL used for simple database migrations.

- 2000s: Introduction of enterprise data warehouses (Informatica, IBM DataStage).

- 2010s: Cloud-based ETL platforms (AWS Glue, Google Dataflow, Azure Data Factory).

- 2020s: ETL integrated with AI/ML for predictive analytics and real-time streaming ETL.

Core Components of ETL

Extract

- Pulls data from structured, semi-structured, and unstructured sources.

- Sources: SQL databases, JSON/XML files, APIs, IoT sensors, etc.

Transform

- Cleans, validates, and standardizes data.

Examples: Removing duplicates, handling missing values, applying business rules, joining multiple datasets.

Load

- Inserts transformed data into a target system like Snowflake, Redshift, BigQuery, or Hadoop.

- Modes: Full Load (overwrite entire dataset) or Incremental Load (only new/updated records).

Why is ETL Important in Data Management?

ETL plays a crucial role because it:

- Provides single source of truth for organizations.

- Enhances data quality by standardizing and cleaning raw inputs.

- Improves decision-making with faster, accurate insights.

- Enables scalability for analytics, AI, and reporting platforms.

ETL vs ELT: Understanding the Difference

While ETL has been the standard for decades, modern systems introduced ELT (Extract, Load, Transform).

- ETL: Transform happens before loading into the warehouse. Best for traditional warehouses.

- ELT: Data is loaded first and then transformed within the warehouse. Best for cloud-native systems like Snowflake or BigQuery.

Popular ETL Tools in the Market

- Apache NiFi – Open-source, real-time ETL tool.

- Talend – Known for enterprise-level data integration.

- Informatica PowerCenter – Legacy but still dominant in large corporations.

- AWS Glue – Serverless ETL tool integrated with Amazon’s ecosystem.

- Google Cloud Dataflow – Cloud-native ETL with streaming support.

Real-Time Examples of ETL in Action

- Banking: Fraud detection by consolidating transaction data.

- Healthcare: Patient record integration across hospitals.

- Retail: Customer purchase history for targeted promotions.

- Travel: Flight data processing for dynamic pricing models.

Advantages of Using ETL for Businesses

- Improves data reliability

- Enhances compliance with regulations (GDPR, HIPAA)

- Boosts analytics efficiency

- Reduces manual intervention

- Supports scalability in cloud environments

Best Practices for Building an ETL Pipeline

- Define clear data requirements.

- Automate ETL pipelines to reduce errors.

- Monitor ETL jobs with logging and alerts.

- Use incremental loads for efficiency.

- Ensure security with encryption and access controls.

ETL in Big Data and Cloud Environments

With the rise of Hadoop, Spark, and cloud warehouses, ETL pipelines now handle petabytes of data in real-time. Cloud ETL platforms provide elastic scaling and pay-as-you-go pricing, making them ideal for startups and enterprises alike.

ETL in Machine Learning and AI Workflows

ETL prepares high-quality training datasets by:

- Extracting raw logs and sensor data.

- Cleaning anomalies and missing values.

- Loading into ML frameworks like TensorFlow or PyTorch.

Common Use Cases of ETL

- Customer analytics dashboards

- Supply chain optimization

- Marketing campaign tracking

- Financial compliance reporting

- IoT data processing

ETL Pipeline Architecture Explained

An ETL pipeline isn’t just about moving data. It’s a well-structured architecture designed for scalability, efficiency, and resilience.

Key components include:

- Source Layer – Data comes from CRMs, ERPs, logs, APIs, IoT devices.

- Staging Layer – Temporary storage before transformation.

- Transformation Layer – Data cleaning, aggregation, enrichment.

- Destination Layer – Final storage in a data warehouse or data lake.

- Orchestration Layer – Tools like Apache Airflow or Luigi schedule and manage workflows.

- Monitoring Layer – Tracks performance, errors, and latency.

ETL in Real-Time Streaming vs Batch Processing

ETL has two main approaches:

- Batch ETL – Data is processed in bulk at scheduled times.

- Example: Retail companies process daily sales every night.

- Example: Retail companies process daily sales every night.

- Streaming ETL – Data is processed continuously in near real-time.

- Example: Uber processes driver and rider GPS updates instantly for pricing.

Security and Compliance in ETL

Since ETL handles sensitive business data, security is critical.

Best practices:

- Encrypt data in transit and at rest.

- Implement role-based access control (RBAC).

- Ensure compliance with regulations:

- GDPR (EU Data Protection)

- HIPAA (Healthcare Data)

- PCI-DSS (Financial Transactions)

- GDPR (EU Data Protection)

Common Mistakes to Avoid in ETL Projects

Even experienced teams fall into traps. Avoid:

- Overcomplicating transformations (keep it simple).

- Ignoring error handling → leads to pipeline failures.

- Poor documentation → makes debugging impossible.

- Hardcoding configurations instead of using metadata-driven pipelines.

- Skipping data quality checks → garbage in, garbage out.

ETL Performance Optimization Techniques

For large-scale systems, ETL can get slow and costly. Here’s how to optimize:

- Use parallel processing to speed up transformations.

- Implement partitioning and indexing in target databases.

- Reduce I/O by compressing data before loading.

- Leverage in-memory processing with Apache Spark.

- Schedule ETL jobs during low traffic windows to avoid performance bottlenecks.

ETL vs Data Virtualization vs API Integration

Sometimes ETL isn’t the only choice. Businesses must decide between:

- ETL → Best for structured, historical analysis.

- Data Virtualization → Real-time queries across multiple systems without moving data.

- API Integration → Connects SaaS platforms for operational workflows.

ETL Testing and Quality Assurance

Before putting ETL pipelines into production, testing ensures accuracy.

Types of ETL Testing:

- Data Completeness Testing – Ensure no records are lost.

- Data Transformation Testing – Verify transformations apply correctly.

- Performance Testing – Ensure large datasets load within time limits.

- Regression Testing – Confirm updates don’t break existing workflows.

ETL for Data Science & Machine Learning

ETL isn’t just for BI teams. Data scientists also depend on it:

- Prepares feature engineering datasets.

- Handles time-series data preprocessing for forecasting.

- Loads clean data into ML pipelines.

Example: A predictive maintenance system uses ETL to extract sensor logs, transform them into feature-rich datasets, and load them into an ML training framework.

Industry-Specific ETL Use Cases

- Retail: Customer 360° dashboards (integrating POS, CRM, and eCommerce).

- Healthcare: Centralizing EMRs (Electronic Medical Records).

- Finance: Risk modeling using ETL pipelines for credit scoring.

- Telecom: Call detail records (CDRs) processing for billing.

- Logistics: Supply chain optimization with real-time shipment tracking.

Future of ETL in AI-Driven Data Engineering

The next generation of ETL will be:

- AI-Augmented – Automated schema mapping and anomaly detection.

- Serverless ETL – Tools like AWS Glue reducing infrastructure costs.

- ETL + DataOps Integration – Agile workflows with CI/CD for data pipelines.

- Self-Service ETL – Business analysts building ETL pipelines without coding.

- Streaming-First ETL – Kafka and Spark Streaming powering real-time use cases.

Future of ETL: Trends & Predictions

- AI-powered ETL: Automated anomaly detection and schema mapping.

- Real-Time Streaming ETL: Faster decisions for industries like fintech and eCommerce.

- Low-Code/No-Code ETL: Democratizing ETL for business users.

- Integration with DataOps: Agile data engineering workflows.

The Role of Metadata in ETL

Metadata (data about data) is the backbone of ETL pipelines. It describes source structures, transformations, and target schemas.

Types of Metadata in ETL:

- Business Metadata – Business definitions, KPIs, glossaries.

- Technical Metadata – Source tables, columns, indexes, data types.

- Operational Metadata – Job logs, execution times, error rates.

ETL Workflow Automation

Modern organizations cannot rely on manual ETL runs. They need workflow automation.

Automation benefits:

- Schedules ETL jobs (e.g., daily, hourly, real-time).

- Reduces manual errors.

- Enables event-driven pipelines (e.g., when a new file arrives in storage).

Tools : - Apache Airflow → Workflow orchestration.

- Luigi → Dependency management.

- Prefect → Cloud-native orchestration.

ETL in Cloud-Native Environments

Cloud has transformed ETL:

- AWS Glue → Serverless ETL with pay-as-you-go pricing.

- Google Cloud Dataflow → Handles batch + streaming pipelines.

- Azure Data Factory → Enterprise-grade orchestration.

ETL and ELT – Choosing the Right Strategy

Sometimes companies confuse ETL with ELT.

- ETL (Extract → Transform → Load) → Transformations happen before loading.

- ELT (Extract → Load → Transform) → Data is loaded first, then transformed inside a powerful data warehouse like Snowflake or BigQuery.

Use ETL when transformations are heavy.

Use ELT when leveraging the scalability of modern cloud warehouses.

ETL Error Handling and Recovery Mechanisms

Data pipelines fail. Smart teams plan for it:

- Retry mechanisms – Auto-retry failed jobs.

- Error logging – Store faulty records for later review.

- Checkpointing – Restart pipeline from last successful stage.

- Dead-letter queues – Handle bad data without blocking the pipeline.

ETL Monitoring and Observability

Without monitoring, ETL is a black box.

Key metrics to track:

- Throughput (rows per second).

- Latency (time taken from source to destination).

- Error Rate (% of records rejected).

- Resource Utilization (CPU, memory, storage).

Tools: Prometheus, Grafana, Datadog for ETL observability.

ETL in Big Data Ecosystems

ETL for big data is different from traditional ETL.

- Tools like Hadoop, Spark, and Hive handle petabyte-scale data.

- MapReduce-based ETL allows distributed transformations.

- Data Lakes (S3, HDFS, ADLS) serve as staging layers.

ETL in Data Warehousing vs Data Lakes

- Data Warehousing ETL → Structured, curated, cleaned data (Snowflake, Redshift, BigQuery).

- Data Lake ETL → Raw, unstructured, semi-structured data (logs, IoT, JSON files).

Many modern organizations use a Lakehouse model (Databricks) that combines both.

Low-Code and No-Code ETL Solutions

Not every team has data engineers. Businesses now rely on no-code ETL tools.

Examples :

- Talend – Drag-and-drop ETL builder.

- Hevo Data – Fully automated pipeline.

- Stitch – Quick SaaS integration.

- Fivetran – Managed ELT with connectors.

These tools reduce complexity and let analysts build ETL workflows.

The Future of ETL: ETL + AI = Intelligent Data Pipelines

AI is reshaping ETL in three key ways:

- Automated schema mapping – AI detects relationships between sources and targets.

- Anomaly detection – ML models flag outliers in data pipelines.

- Self-healing ETL – Pipelines auto-correct issues without human intervention.

Challenges of ETL and How to Overcome Them

- Data Quality Issues → Solve with validation rules.

- Scalability Problems → Use cloud-native ETL pipelines.

- Latency in Real-Time Needs → Adopt streaming ETL.

- High Costs → Optimize with open-source tools.

Conclusion

ETL remains a cornerstone of modern data strategy, enabling organizations to turn raw, messy data into actionable intelligence. As businesses adopt cloud, AI, and big data, ETL will evolve but continue to play a key role in ensuring data quality, accessibility, and scalability.For organizations aiming to stay competitive, mastering ETL pipelines is no longer optional—it’s essential.

FAQ’s

What is ETL in SQL?

ETL in SQL stands for Extract, Transform, Load, a process used to extract data from multiple sources, transform it into a usable format, and load it into a database or data warehouse for analysis and reporting.

What are the 5 steps of the ETL process?

The 5 steps of the ETL process are: Extracting data from multiple sources, Cleansing to remove errors or duplicates, Transforming it into the required format, Loading the processed data into a target system, and Validating to ensure accuracy and consistency.

What does ETL mean?

ETL means Extract, Transform, Load—a data integration process that collects data from different sources, transforms it into a consistent format, and loads it into a target database or data warehouse for analysis and reporting.

Which language is best for ETL?

The best language for ETL depends on the complexity of the data workflows and the environment. Python is the most popular choice today because of its rich libraries (Pandas, PySpark, SQLAlchemy) and ease of use for automation.

Is ETL related to AI?

Yes, ETL is related to AI because clean, well-structured data is the foundation of any AI or machine learning model. ETL processes extract raw data, transform it into usable formats, and load it into data warehouses or pipelines, ensuring that AI systems have high-quality, consistent data for training and analysis.