Machine learning models rely heavily on understanding patterns and relationships in data. Among the most intuitive and interpretable models are tree-based algorithms. These algorithms simulate a decision-making process similar to how humans make decisions.

Tree algorithms are not just limited to one model. They encompass several types such as decision trees, random forests, gradient-boosted trees, and more. However, the foundation lies in understanding the basic structure: the decision tree.

What is a Decision Tree?

A decision tree is a supervised learning algorithm used for both classification and regression tasks. It breaks down a dataset into smaller subsets while at the same time developing an associated tree structure.

Each internal node represents a decision based on a feature, each branch represents the outcome of that decision, and each leaf node represents a final label or output.

Why Use Decision Trees in Machine Learning?

Decision trees offer various benefits:

- Interpretability: Easy to visualize and understand.

- Non-Linearity: Can capture non-linear relationships.

- Feature Importance: Automatically ranks features by importance.

- No Need for Feature Scaling: Unlike SVM or KNN.

Real-world example: Banks use decision trees to determine credit approval based on age, income, and credit score.

Components of a Decision Tree

- Root Node: The top node of the tree.

- Decision Nodes: Nodes that split the data.

- Leaf/Terminal Nodes: Nodes that represent the output.

- Branches: Connects nodes and shows the flow.

from sklearn.tree import DecisionTreeClassifier

clf = DecisionTreeClassifier()

Types of Trees in Machine Learning

There are several trees used across machine learning algorithms:

- Binary Trees: Each node has two children.

- Multiway Trees: Nodes can have more than two children.

- Balanced Trees: All leaf nodes are at the same level.

- Unbalanced Trees: Leaf nodes are at varying levels.

- Regression Trees: Used when the target is continuous.

- Classification Trees: Used when the target is categorical.

Types of Decision Trees

Different decision tree models serve different purposes:

- ID3 (Iterative Dichotomiser 3): ID3 uses Information Gain to determine the best attribute to split at each node.

It works well for categorical data and creates compact trees.

However, it may overfit on noisy datasets due to its greedy nature. - C4.5: C4.5 improves ID3 by using Gain Ratio instead of Information Gain.

It handles both continuous and categorical attributes efficiently.

C4.5 also prunes the tree after construction to reduce overfitting. - CART (Classification and Regression Tree): CART can perform both classification and regression tasks.

It uses Gini Impurity for classification and variance reduction for regression.

Unlike ID3/C4.5, CART builds binary trees (each node has only two branches). - CHAID: CHAID uses Chi-square statistical tests to determine the best splits.

It is well-suited for multi-way splits, unlike binary-only trees.

Often used in marketing and social sciences due to its statistical robustness. - Random Forest: Random Forest is an ensemble method combining multiple decision trees.

It uses bagging (Bootstrap Aggregation) and random feature selection to improve accuracy.

Highly resistant to overfitting and works well with large datasets. - Gradient Boosted Trees: Gradient Boosting builds trees sequentially, where each tree corrects the previous one. It uses loss functions and gradient descent to minimize prediction error. Extremely powerful for tasks requiring high prediction accuracy, though slower to train.

Decision Tree Splitting Techniques

Splitting is the process of dividing a node into two or more sub-nodes. It is based on certain criteria:

- Gini Index: Measures the impurity.

- Entropy and Information Gain: Based on information theory.

- Chi-Square: Statistical significance.

- Reduction in Variance: Used in regression trees.

Example:

clf = DecisionTreeClassifier(criterion="entropy")

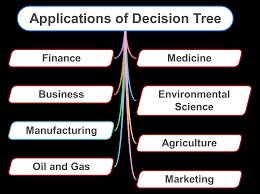

Real-Time Applications of Decision Trees

Decision trees are widely used in industries due to their transparency:

- Healthcare: Diagnosing diseases based on symptoms.

- Finance: Fraud detection and loan eligibility.

- Marketing: Predicting customer churn.

- Retail: Product recommendation systems.

Example: E-commerce sites use decision trees to recommend products based on user behavior.

Advantages and Disadvantages

Pros:

- Simple to understand and interpret.

- Handles both numerical and categorical data.

- Requires little data preprocessing.

Cons:

- Prone to overfitting.

- Can be unstable with small variations in data.

- Biased with imbalanced datasets.

Decision Trees vs Other Algorithms

| Feature | Decision Tree | Logistic Regression | Neural Network |

| Interpretability | High | Medium | Low |

| Training Time | Fast | Medium | Slow |

| Handles Non-Linearity | Yes | No | Yes |

| Overfitting Risk | High | Low | Medium |

Visualizing Decision Trees with Python

Visualization improves interpretability. You can use libraries like graphviz or plot_tree from sklearn.

from sklearn.tree import plot_tree

import matplotlib.pyplot as plt

plot_tree(clf, filled=True)

plt.show()

How Does a Decision Tree Work?

A decision tree works by recursively splitting the dataset into subsets based on feature values that best separate the classes or minimize prediction error. The algorithm evaluates all features, selects the best possible split based on a mathematical criterion (like Gini or Entropy), and builds a hierarchical tree structure.

Process:

- Start with the entire dataset.

- Choose the best feature and threshold to split the data.

- Create two or more branches based on the split.

- Repeat the splitting process recursively on each branch (subtree).

- Stop when a stopping condition is met (max depth, minimum samples, pure node).

- Assign a final output label/value at the leaf nodes.

Key idea:

Each step of the tree reduces uncertainty and improves class separation.

Training and Visualizing a Decision Tree

Training a Decision Tree

Using scikit-learn:

from sklearn.tree import DecisionTreeClassifier

clf = DecisionTreeClassifier(criterion=’gini’, max_depth=5)

clf.fit(X_train, y_train)

Visualizing the Tree

You can visualize using plot_tree or graphviz.

from sklearn.tree import plot_tree

import matplotlib.pyplot as plt

plt.figure(figsize=(12,8))

plot_tree(clf, filled=True, feature_names=features, class_names=classes)

plt.show()

Visualization helps understand:

- How decisions are made

- Which features matter the most

- Whether the model is overfitting

Information Gain and Gini Index in Decision Trees

Decision trees use impurity measures to select the best split.

Entropy & Information Gain

Used in ID3, C4.5, and sometimes CART.

Entropy

Measures impurity or disorder in a dataset.

Entropy=−∑pilog2pi

Lower entropy = purer node.

Information Gain

Measures how much entropy is reduced after splitting.

Information Gain=Entropy(parent)−∑NniEntropy(childi)

The split with the highest information gain is chosen.

Gini Index

Used in CART (Classification and Regression Trees).

Gini=1−∑pi2

- Gini is computationally faster.

- Gini favors large, dominant classes.

Understanding Decision Tree with Real-Life Use Case

Use Case: Loan Approval in Banking

Banks use decision trees to automate loan approvals.

Features:

- Income

- Age

- Employment status

- Credit score

- Existing loans

Process:

- First split: credit score

- Second split: income

- Third split: employment status

Example decision:

- If credit score > 720 → Approve

- If credit score < 650 → Reject

- Else: check income & existing loans

Decision trees mimic human-like logical steps, making them ideal for risk assessment.

Industry Applications:

- Healthcare diagnosis

- Fraud detection

- Insurance risk scoring

- Customer segmentation

- Industrial quality checks

Decision Tree Terminologies

| Term | Meaning |

| Root Node | First node where the split begins |

| Split | Division of data based on a feature |

| Branch | Outcome of a split |

| Internal Node | Node that splits further |

| Leaf/Terminal Node | Final decision/output |

| Pruning | Reducing tree size to avoid overfitting |

| Impurity | Measure of randomness (Gini/Entropy) |

| Information Gain | Improvement from a split |

How Decision Tree Algorithms Work?

Decision tree algorithms follow a top-down, greedy approach known as Recursive Binary Splitting or Divide and Conquer.

Step-by-Step Working:

- Evaluate all features and compute impurity.

- Choose the feature producing the best split.

- Partition the dataset.

- Recurse on the partitions.

- Stop based on:

- Max depth

- Min samples per leaf

- Pure nodes

- Max depth

- Perform pruning (optional).

Algorithms do NOT backtrack—they use a greedy strategy.

Decision Tree Assumptions

Decision trees assume:

- Data is split based on feature purity.

- Features are independent (no multicollinearity).

- Larger depth increases accuracy but risks overfitting.

- Same feature can be used multiple times across different levels.

- Numerical and categorical values can be processed without scaling.

Trees do NOT assume:

- Linear relationships

- Normal distribution

- Equal variance

How Do Decision Trees Use Entropy?

Entropy helps measure how mixed the classes are at a node.

- Pure node: Entropy = 0

- Mixed node: Entropy increases

Decision trees compute entropy before and after splitting.

Goal:

Choose the feature that produces the largest entropy reduction, i.e., highest information gain.

Example:

- If splitting by “Credit Score” reduces disorder the most → chosen as the root.

Structure and Components of Decision Trees

A decision tree resembles a flowchart:

Root Node

/ \

Decision Decision

Node Node

/ \ / \

Leaf Leaf Leaf Leaf

Core Components:

- Root Node: Initial feature selected for split

- Decision Nodes: Intermediate splits

- Edges/Branches: Possible outcomes

- Leaves: Final predictions

Algorithms for Building Decision Trees

1. ID3

- Uses information gain

- Handles categorical data

2. C4.5

- Uses gain ratio

- Supports both continuous & categorical

- Handles missing values

3. CART

- Uses Gini index

- Builds binary trees

- Supports regression & classification

4. CHAID

- Uses chi-square test

- Multiway splits

5. QUEST

- Fast, unbiased splitting

6. Ensemble Variants

- Random Forest: Many trees + majority voting

- Gradient Boosted Trees (XGBoost, LightGBM): Sequentially trained trees

Computational Complexity, Optimization, and Parallelization

Time Complexity

Building a decision tree:

O(n⋅d⋅log n)

Where

- n = number of samples

- d = number of features

Prediction time:

O(depth)

Space Complexity

O(n⋅depth)

Optimization Techniques

- Pruning (cost-complexity pruning)

Reduces tree size & prevents overfitting. - Feature selection

Removes redundant features. - Hyperparameter tuning

- max_depth

- min_samples_split

- min_samples_leaf

- max_leaf_nodes

- max_depth

- Handling imbalanced data

- Class weights

- SMOTE

- Class weights

Parallelization

- Random Forests parallelize naturally because each tree is built independently.

- XGBoost uses:

- Histogram-based splits

- Block compression

- GPU acceleration

- Histogram-based splits

Parallelization improves:

- Speed

- Scalability

- Efficiency

Mathematical Foundation Behind Decision Trees

Splitting Criteria Mathematics

Handling Missing Values in Decision Trees

Advanced tree algorithms (like C4.5, XGBoost) can:

Assign Missing Values to Both Branches

Both directions are tried; best gain is used.

Learn “default directions”

E.g., XGBoost automatically learns which direction missing values should go.

Surrogate Splits

Used in CART:

- If primary split feature is missing,

- The model uses a correlated surrogate feature.

This allows trees to handle incomplete datasets effectively.

Dealing with Categorical and High-Cardinality Features

Decision trees naturally support categorical variables using multiway splits.

For high-cardinality categories (e.g., thousands of unique values):

- Algorithms like CatBoost use ordered target statistics

- XGBoost converts categories using one-hot encoding (but can be inefficient)

- LightGBM uses Gradient-based One-Side Sampling (GOSS) and Exclusive Feature Bundling (EFB) to optimize categorical splits

Regularization and Overfitting Control in Decision Trees

Decision trees easily overfit, so advanced regularization includes:

Pre-pruning

- Max depth

- Min samples split

- Min samples leaf

- Max leaf nodes

Post-pruning (Cost-Complexity Pruning)

Used in CART:

Rα(T)=R(T)+α∣T∣

Where:

- R(T) = misclassification error

- |T| = number of leaf nodes

- α = complexity penalty

Trees with highest cost are pruned first.

L1/L2 Regularization in Boosted Trees

Boosted trees use advanced regularization terms:

Obj=Loss+Ω(f)

Ω(f)=γT+1 / 2 λ∣∣w∣∣2

XGBoost became famous because of this regularization.

Interpretability and Explainability Techniques

Trees are interpretable by default, but further techniques help:

Partial Dependence Plots (PDPs)

Show how a tree’s prediction changes with a feature.

SHAP (SHapley Additive exPlanations)

Breaks down predictions of:

- Decision Trees

- Random Forest

- Gradient Boosted Trees

SHAP values for trees are computed in polynomial time (TreeSHAP algorithm).

ICE (Individual Conditional Expectation)

Shows personalized predictions.

Best Practices in Decision Tree Modeling

- Prune the tree to reduce overfitting.

- Use ensemble methods like Random Forest.

- Perform cross-validation.

- Tune hyperparameters (max depth, min samples split).

Challenges and How to Overcome Them

- Overfitting: Use pruning and ensemble techniques.

- Data Imbalance: Use SMOTE or weighted classes.

- Scalability: Use optimized libraries like XGBoost.

Final Thoughts

Understanding decision trees is essential for any data scientist. Their simplicity, versatility, and applicability across domains make them a foundational machine learning algorithm.

From banking and healthcare to e-commerce and manufacturing, decision trees power intelligent decisions every day. By mastering their structure, types, and applications, you unlock one of the most potent tools in machine learning.

FAQ’s

What are the types of decision tree algorithms?

The main types of decision tree algorithms include Classification Trees (CART), Regression Trees, ID3, C4.5, C5.0, and CHAID, each designed to handle different kinds of prediction tasks and data structures.

What is a decision tree in deep learning?

A decision tree is a structured model that splits data into branches based on feature values, and while not a deep learning model itself, it’s often used alongside neural networks for interpretable, rule-based decision-making.

What is the depth of a decision tree?

The depth of a decision tree is the number of levels from the root to the deepest leaf node, representing how many consecutive splits the model makes before reaching a final decision.

What are the 7 types of decision-making?

The 7 types of decision-making are strategic, tactical, operational, programmed, non-programmed, individual, and group decision-making, each used for different levels and complexities of organizational choices.

What is a decision tree called?

A decision tree is also called a tree-based model, often referred to as a Classification or Regression Tree (CART) depending on whether it predicts categories or numeric values.