Artificial Intelligence (AI) and Machine Learning (ML) are revolutionizing industries worldwide. From self-driving cars to fraud detection systems, ML applications have become part of our daily lives.

Behind these applications are powerful machine learning frameworks—pre-built libraries, tools, and interfaces that make building and deploying ML models faster, easier, and more efficient.

Without these frameworks, developers would need to build algorithms from scratch, manage complex data pipelines, and optimize hardware manually.

Why Machine Learning Frameworks Matter in AI Development

Machine learning frameworks provide the foundation for AI projects.

Benefits of Frameworks:

- Accelerated Development: Pre-built algorithms save time.

- Scalability: Handle massive datasets efficiently.

- Hardware Optimization: Use GPUs, TPUs, and distributed systems effectively.

- Community Support: Active open-source ecosystems.

- Flexibility: Allow integration with multiple programming languages.

Core Features of Modern Machine Learning Frameworks

- Pre-built ML algorithms for regression, classification, clustering.

- Support for neural networks and deep learning.

- Visualization tools for better understanding of training models.

- Cross-platform compatibility (Windows, Linux, cloud).

- Integration with big data tools (Hadoop, Spark).

- Deployment-ready pipelines for production.

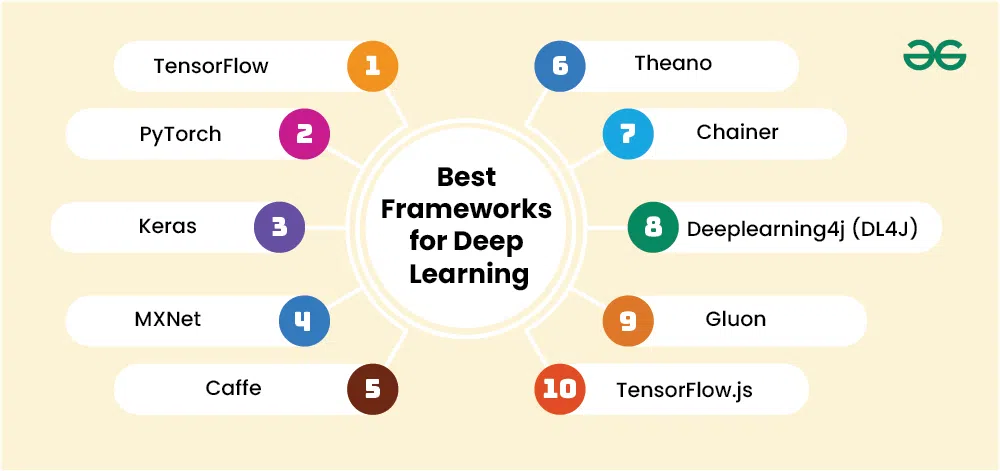

Top Machine Learning Frameworks Explained

Let’s dive into the most popular machine learning frameworks shaping the AI world.

TensorFlow

- Developed by Google Brain.

- Supports deep learning, reinforcement learning, and neural networks.

- Ideal for large-scale applications.

PyTorch

- Developed by Facebook’s AI Research (FAIR).

- Known for flexibility and dynamic computation graphs.

- Preferred in research and prototyping.

Scikit-learn

- Best for beginners.

- Contains pre-built ML algorithms for regression, classification, clustering.

- Lightweight and integrates well with NumPy and Pandas.

Keras

- High-level API built on TensorFlow.

- Known for ease of use.

- Great for rapid prototyping.

Apache Spark MLlib

- Distributed machine learning framework.

- Excellent for big data analytics.

- Supports clustering, classification, and collaborative filtering.

XGBoost & LightGBM

- Gradient boosting frameworks for structured data.

- Extremely fast and accurate.

- Dominant in Kaggle competitions.

MXNet

- Backed by Amazon Web Services (AWS).

- Scales across multiple GPUs and servers.

- Great for cloud-native ML solutions.

H2O.ai

- Open-source framework focused on enterprise solutions.

- Provides AutoML capabilities.

- Used in financial risk assessment and healthcare analytics.

Real-Time Applications of Machine Learning Frameworks

- Healthcare: Predicting diseases (PyTorch, TensorFlow).

- Finance: Fraud detection (XGBoost, LightGBM).

- E-commerce: Personalized recommendations (Spark MLlib).

- Transportation: Self-driving cars (TensorFlow, PyTorch).

- Marketing: Customer segmentation (Scikit-learn).

Comparing Machine Learning Frameworks: Key Insights

| Framework | Best For | Industry Use Case |

| TensorFlow | Deep learning | Google Translate, image recognition |

| PyTorch | Research & AI prototyping | Tesla autonomous cars |

| Scikit-learn | Beginners, quick ML tasks | Kaggle projects |

| Spark MLlib | Big Data ML | Airbnb recommendations |

| XGBoost/LightGBM | Structured data | Banking fraud detection |

How to Choose the Right Machine Learning Framework

- Project Size: TensorFlow for large projects, Scikit-learn for small ones.

- Complexity: PyTorch for research, Keras for quick prototyping.

- Data Type: Spark for big data, XGBoost for structured datasets.

- Team Skills: Choose based on team’s expertise.

Role of Machine Learning Frameworks in Deep Learning

Frameworks like TensorFlow and PyTorch make it possible to train deep neural networks for tasks like:

- Natural Language Processing (NLP).

- Computer Vision.

- Reinforcement Learning.

Advanced Architectures Supported by Machine Learning Frameworks

Modern ML frameworks aren’t just about training models; they enable specialized architectures for complex tasks:

- Transformers: Frameworks like PyTorch and TensorFlow natively support Hugging Face libraries for NLP (e.g., BERT, GPT).

- Graph Neural Networks (GNNs): Frameworks like DGL and PyTorch Geometric extend ML frameworks to analyze networks (e.g., social networks, protein structures).

- Federated Learning: TensorFlow Federated allows distributed training without moving sensitive data (critical for healthcare and finance).

- Reinforcement Learning Environments: OpenAI Gym integrates with TensorFlow and PyTorch for robotics and gaming AI.

Cloud-Native and Distributed Machine Learning Frameworks

Scaling ML to billions of parameters requires distributed training. Frameworks integrate with:

- Horovod (Uber): Works with TensorFlow & PyTorch for distributed deep learning.

- Ray: Supports distributed reinforcement learning and hyperparameter tuning.

- KubeFlow: Cloud-native ML on Kubernetes, used in enterprise AI pipelines.

- MLflow: Open-source platform for ML lifecycle management (tracking, deployment, reproducibility).

Hardware Acceleration and Framework Optimization

Machine learning frameworks deeply integrate with specialized hardware:

- GPUs (NVIDIA CUDA + cuDNN): Most frameworks are GPU-accelerated.

- TPUs (Google): TensorFlow integrates with TPUs for faster training.

- FPGAs (Intel): Used in low-latency inference for finance and edge devices.

- ONNX (Open Neural Network Exchange): Allows interoperability of models across frameworks and hardware.

Automated Machine Learning (AutoML) with Frameworks

Frameworks are moving towards self-optimizing models:

- H2O.ai AutoML: Builds multiple models automatically.

- AutoKeras (built on Keras/TensorFlow): Simplifies deep learning experiments.

- TPOT (Scikit-learn-based): Automates feature engineering + pipeline creation.

- Google Cloud AutoML: Enterprise AutoML powered by TensorFlow.

Monitoring, Explainability, and Responsible AI

As ML frameworks move into enterprise, monitoring and explainable AI (XAI) are essential.

- TensorFlow Model Analysis (TFMA): Helps evaluate fairness and performance.

- SHAP and LIME (Scikit-learn, PyTorch): Used for interpretability.

- IBM AI Fairness 360 (AIF360): A toolkit that integrates with frameworks to measure bias.

- Evidently AI: Monitors model drift in production.

Hybrid and Multi-Framework Workflows

In practice, companies don’t use just one framework. Instead, they create hybrid workflows:

- Data preprocessing with Scikit-learn → Model training with TensorFlow/PyTorch → Deployment with ONNX/MLflow.

- Spark MLlib for large-scale preprocessing → XGBoost for tabular ML.

- PyTorch Lightning to scale models → exported to TensorFlow Serving for deployment.

Edge AI and Tiny Machine Learning Frameworks

Not all AI runs in the cloud. Edge frameworks make ML possible on IoT and mobile:

- TensorFlow Lite: Optimized for mobile/edge deployment.

- PyTorch Mobile: Lightweight version of PyTorch for smartphones.

- TinyML (TensorFlow Micro): Runs ML models on microcontrollers.

- Edge Impulse: Cloud platform for building ML models for wearables, robotics.

Integration of Machine Learning Frameworks with Data Engineering Tools

Machine learning models are only as good as the pipelines that feed them. Modern ML frameworks increasingly integrate with data engineering ecosystems:

- Apache Spark + MLlib: Enables distributed preprocessing at scale.

- Delta Lake with TensorFlow/PyTorch: Ensures reliable, versioned data pipelines.

- Apache Kafka + ML frameworks: Real-time data ingestion for streaming ML models.

- Snowpark (Snowflake): Direct integration with frameworks like Scikit-learn for in-database ML.

Advanced Deployment of Machine Learning Frameworks

After training, frameworks need deployment strategies:

- TensorFlow Serving: Optimized for deploying TensorFlow models in production.

- TorchServe: A model-serving library built for PyTorch.

- ONNX Runtime: Ensures framework-agnostic deployment.

- Docker + Kubernetes (K8s): Most enterprises containerize ML models and orchestrate them with Kubernetes.

- MLOps pipelines: Integration with CI/CD tools like Jenkins or GitHub Actions.

Machine Learning Frameworks in High-Performance Computing (HPC)

When enterprises need petabyte-scale training:

- MPI (Message Passing Interface): Frameworks extend distributed training to HPC clusters.

- Slurm workload managers: Used with TensorFlow for job scheduling on supercomputers.

- GPU clusters with InfiniBand networking: Allow ultra-fast model synchronization across nodes.

Security Concerns in Machine Learning Frameworks

Frameworks must address security issues such as:

- Adversarial Attacks: Tiny perturbations in inputs can fool models.

- Data Poisoning: Malicious data injected into training pipelines.

- Model Extraction Attacks: Hackers reverse-engineer models deployed with frameworks.

- Differential Privacy: Implemented in frameworks like TensorFlow Privacy.

Compliance and Regulations in ML Framework Usage

Framework adoption is influenced by regulations:

- GDPR (Europe): Requires explainability in AI models, supported by Scikit-learn interpretability add-ons.

- HIPAA (US Healthcare): ML frameworks used in healthcare must comply with privacy protections.

- EU AI Act: Will soon regulate deployment of frameworks for high-risk applications like credit scoring.

Cross-Language Interoperability of ML Frameworks

Modern frameworks allow cross-language compatibility:

- TensorFlow.js: Runs ML models in JavaScript directly in browsers.

- PyTorch with C++ (LibTorch): Allows embedding ML models in C++ applications.

- R + TensorFlow/PyTorch bindings: Enables data scientists in R environments to leverage deep learning.

- Java with Deeplearning4j: Enterprise Java-based framework.

Benchmarking and Performance Tuning of Frameworks

Different frameworks excel in different workloads:

- TensorFlow vs PyTorch: TensorFlow often preferred for production; PyTorch for research flexibility.

- XGBoost vs LightGBM: XGBoost is robust, LightGBM is faster for large-scale tabular data.

- Spark MLlib vs Scikit-learn: MLlib is better for distributed big data, Scikit-learn for prototyping.

- TPU-optimized TensorFlow vs GPU-optimized PyTorch: Depends on hardware availability.

Specialized Machine Learning Frameworks

Beyond mainstream frameworks, niche frameworks power specialized industries:

- H2O.ai: Enterprise AutoML platform.

- CNTK (Microsoft Cognitive Toolkit): Used in speech recognition research.

- Theano (historical): Pioneer in deep learning frameworks, now replaced by PyTorch/TensorFlow.

- Torch Audio and Torch Vision: PyTorch extensions for specific modalities.

- FastAI: Built on top of PyTorch, optimized for rapid experimentation.

Real-Time Inference and Low-Latency Frameworks

Frameworks are evolving for real-time use cases like fraud detection, autonomous driving, and high-frequency trading:

- TensorRT (NVIDIA): Optimizes deep learning inference on GPUs.

- ONNX Runtime with Intel OpenVINO: Enables low-latency inference on CPUs.

- Triton Inference Server: A multi-framework model serving system.

Machine Learning Frameworks and Generative AI

The explosion of generative AI relies on ML frameworks:

- PyTorch: Dominates generative models like Stable Diffusion and GPT architectures.

- TensorFlow: Powers image generation tools and enterprise-level generative AI models.

- Hugging Face Transformers: Built on PyTorch/TensorFlow for LLMs and diffusion models.

- JAX + Flax: Gaining popularity for training large-scale foundation models.

The Future of Machine Learning Frameworks

The next generation of frameworks will be:

- Quantum-Ready: Frameworks like PennyLane integrate ML with quantum computing.

- Energy-Efficient: ML frameworks optimized for sustainable AI (Green AI).

- Multi-modal: Support for text, images, audio, video in unified models (e.g., OpenAI’s CLIP built with PyTorch).

- Foundation Model Friendly: Hugging Face + PyTorch powering LLMs with trillions of parameters.

- Generative AI Integration: Frameworks evolving to support stable diffusion, GANs, and foundation models at enterprise scale.

Future of Machine Learning Frameworks in AI and Data Science

- Integration with AutoML for automated model building.

- Cloud-native frameworks for enterprise deployment.

- Edge computing frameworks for IoT devices.

- Explainable AI (XAI) support for transparency.

- Quantum ML frameworks in the future.

Limitations and Challenges of Using Frameworks

- Steep learning curve for beginners.

- Hardware requirements (GPUs/TPUs).

- Compatibility issues with older systems.

- Lack of standardization across frameworks.

Real-World Case Studies

- Netflix: Uses ML frameworks for content recommendation.

- Uber: Uses PyTorch for route optimization.

- Amazon: Uses MXNet for Alexa voice recognition.

- Airbnb: Uses Spark MLlib for large-scale personalization.

Conclusion & Key Takeaways

The world of machine learning frameworks is vast and evolving. From TensorFlow and PyTorch powering cutting-edge AI research to XGBoost and Spark MLlib transforming business applications, these frameworks are the backbone of modern AI solutions.

- Choose your framework based on project needs, team expertise, and scalability requirements.

- Real-time examples show that frameworks are driving innovation in healthcare, finance, e-commerce, and beyond.

- The future promises AutoML, cloud-native ML, and explainable AI.

FAQ’s

What are machine learning frameworks?

Machine learning frameworks are software libraries and tools that provide pre-built components, algorithms, and infrastructure to simplify building, training, and deploying machine learning models efficiently.

What is the most popular ML framework?

The most popular ML framework is TensorFlow, widely used for deep learning and large-scale machine learning, though PyTorch is also a top choice for research and production due to its flexibility and ease of use.

What are the 4 types of machine learning models?

The four types of machine learning models are Supervised Learning, Unsupervised Learning, Semi-Supervised Learning, and Reinforcement Learning, each designed to solve different kinds of data-driven problems.

What are the four basics of machine learning?

The four basics of machine learning are data collection & preparation, model selection, training & evaluation, and deployment & monitoring, which together form the foundation for building effective ML systems.

Which ML framework does OpenAI use?

OpenAI primarily uses PyTorch as its main machine learning framework, especially for training and deploying large-scale models like GPT, due to its flexibility and strong support for research and production.