Text data is everywhere—from research papers and news articles to customer reviews and social media conversations. But analyzing this text manually is nearly impossible when dealing with millions of documents. This is where topic modeling steps in.

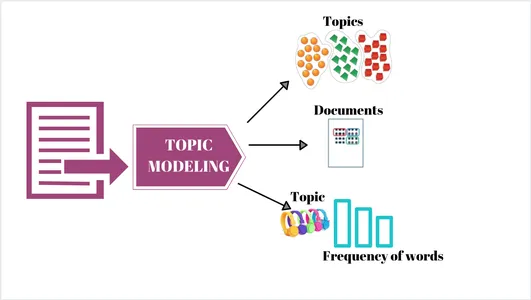

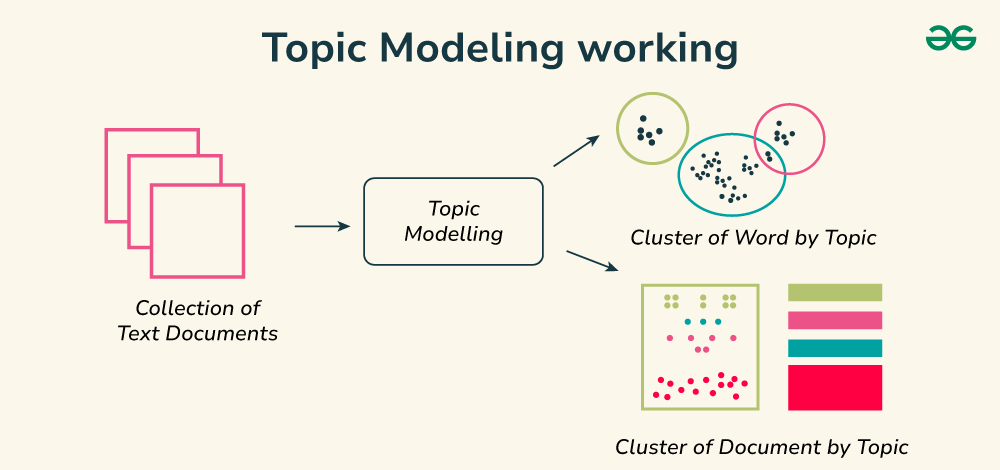

Topic modeling is an unsupervised machine learning technique used to identify hidden themes or patterns within large collections of documents. Instead of manually reading each document, algorithms automatically group similar words and phrases into topics.

This ability to extract meaningful insights from unstructured data makes topic modeling one of the most powerful tools in natural language processing (NLP).

Why Topic Modeling Matters in Today’s Data-Driven World

The digital age produces enormous amounts of text data every second. Businesses, governments, researchers, and social platforms need ways to organize this content efficiently.

Key reasons why topic modeling is important:

- Information Overload: Billions of documents are generated daily. Topic modeling helps reduce noise and categorize data effectively.

- Unsupervised Approach: Unlike supervised models, topic modeling does not require labeled training data.

- Actionable Insights: From customer sentiments to research trends, topic modeling makes hidden information visible.

- Scalability: Algorithms can analyze millions of documents in seconds.

For example, Amazon uses topic modeling to understand product reviews, identify recurring customer complaints, and recommend solutions. Similarly, scientific journals use it to discover emerging research trends across thousands of publications.

Core Concepts Behind Topic Modeling

Before diving into algorithms, it’s important to understand the building blocks of topic modeling:

- Corpus: A collection of documents.

- Document: An individual text, such as a paragraph, review, or article.

- Vocabulary: The set of all unique words in the corpus.

- Topic: A collection of words that frequently occur together, representing a hidden theme.

Example: If you analyze a corpus of news articles, topic modeling might reveal clusters like:

- Topic 1: “stock, market, trading, investment”

- Topic 2: “election, vote, candidate, policy”

- Topic 3: “disease, vaccine, hospital, health”

Different Types of Topic Modeling Techniques

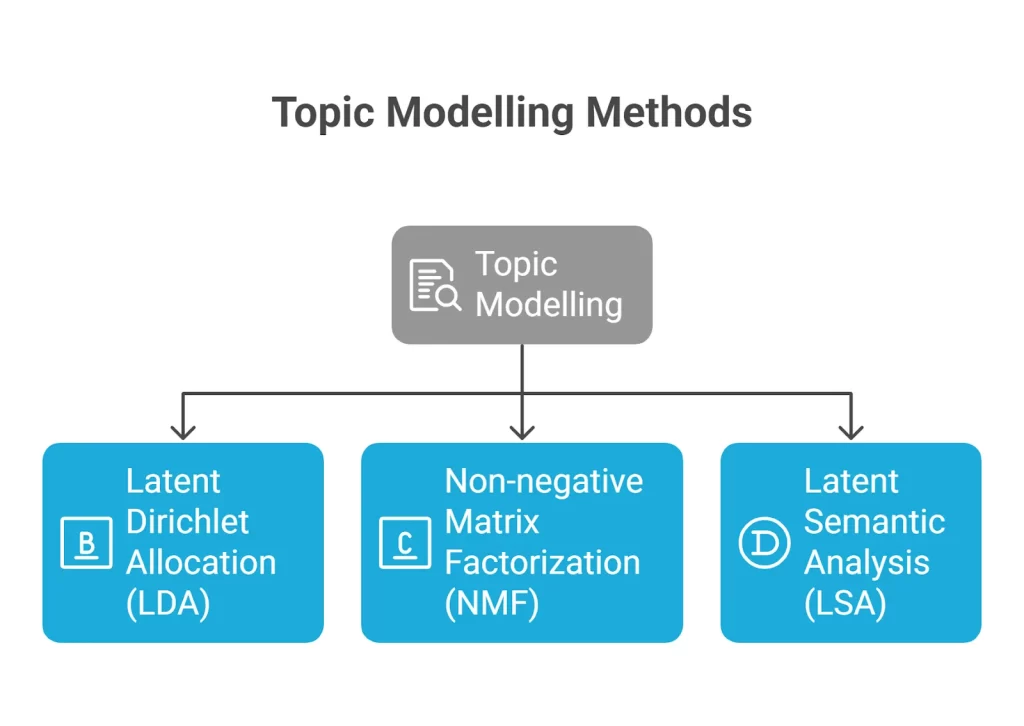

Latent Dirichlet Allocation (LDA)

The most widely used method. LDA assumes:

- Each document is made of multiple topics.

- Each topic is represented by a distribution of words.

For instance, a news article might be 70% politics and 30% economics.

Non-negative Matrix Factorization (NMF)

NMF decomposes the document-term matrix into two smaller matrices, capturing the relationship between documents and topics. It is particularly effective for short texts.

Latent Semantic Analysis (LSA)

Uses singular value decomposition to uncover semantic relationships between words. LSA is great for information retrieval and search engines.

Neural Topic Modeling

Deep learning-based models like BERTopic or Neural Variational Document Model (NVDM) use embeddings and transformers (e.g., BERT) to extract more context-rich topics.

Key Steps in Building a Topic Modeling Pipeline

To perform topic modeling effectively, follow these steps:

- Data Collection: Gather a corpus (tweets, reviews, articles).

- Preprocessing:

- Remove stopwords

- Tokenization

- Lemmatization/stemming

- Vectorization (TF-IDF, Bag-of-Words)

- Remove stopwords

- Model Training: Apply algorithms like LDA or NMF.

- Evaluation: Use metrics such as coherence score and perplexity.

- Visualization: Use tools like pyLDAvis to interpret results.

Real-Time Examples of Topic Modeling in Action

- Customer Feedback: E-commerce platforms analyze reviews to discover top customer pain points.

- Healthcare: Topic modeling on patient records helps identify emerging disease patterns.

- Legal Tech: Law firms use it to scan thousands of legal documents for recurring arguments.

- Social Media Monitoring: Brands monitor Twitter conversations to identify trending themes.

Case Example: During the COVID-19 pandemic, topic modeling was used on social media data to analyze public sentiment about vaccines, lockdowns, and healthcare systems.

Tools and Libraries for Topic Modeling

- Python Libraries:

- Gensim (LDA, LSI)

- Scikit-learn (NMF, LSA)

- BERTopic (neural embeddings)

- Gensim (LDA, LSI)

- Visualization Tools:

- pyLDAvis

- t-SNE plots

- pyLDAvis

- Cloud Platforms:

- AWS Comprehend

- Google Cloud NLP

- Azure Cognitive Services

- AWS Comprehend

Advanced Topic Modeling Approaches

As datasets grow, advanced approaches go beyond traditional LDA:

- Dynamic Topic Modeling: Tracks how topics evolve over time (e.g., tracking AI research trends).

- Hierarchical Dirichlet Process (HDP): Automatically decides the number of topics.

- Contextual Topic Modeling: Combines embeddings from BERT with topic clustering.

- Interactive Topic Modeling: Human-in-the-loop refinement for better interpretability.

Evaluating Topic Modeling Beyond Coherence Scores

Most beginners rely on coherence score or perplexity to measure the quality of topics. But advanced practitioners go beyond these metrics:

- Human Judgment Studies: Asking domain experts to label or validate discovered topics.

- Word Intrusion & Topic Intrusion Tests: Introduced by Chang et al. (2009), where humans identify “odd” words in topics or “out-of-place” documents in clusters.

- Stability Testing: Running topic modeling multiple times on different random seeds or subsets to check reproducibility.

- Downstream Task Performance: Evaluating how well topics improve recommendation, classification, or summarization tasks.

Key Insight: A topic model is only “good” if it supports the end goal, whether that’s business intelligence, discovery, or prediction.

Combining Topic Modeling with Word Embeddings

Traditional models like LDA treat words as discrete tokens, ignoring semantic similarity. However, combining word embeddings (Word2Vec, GloVe, BERT) with topic modeling provides richer insights.

- Embedding-based Clustering: Instead of using Bag-of-Words, you cluster dense word embeddings to form semantically coherent topics.

- BERTopic: A cutting-edge method that uses BERT embeddings + UMAP for dimensionality reduction + HDBSCAN clustering to build contextual topics.

- Contextualized Topic Models (CTM): Use BERT or RoBERTa embeddings alongside probabilistic topic modeling for highly interpretable results.

Real-time Example: Customer support logs can be clustered using BERTopic to discover nuanced themes like “payment failure” vs. “refund process,” which classical LDA might merge.

Dynamic and Temporal Topic Modeling

Topics are not static — they evolve with time. Dynamic topic modeling helps track these changes.

- Dynamic LDA (Blei & Lafferty, 2006): Models how topic distributions change over sequential time windows.

- Applications:

- Tracking political discourse across elections.

- Analyzing scientific literature trends (e.g., rise of deep learning in AI papers post-2012).

- Monitoring consumer behavior before and after product launches.

- Tracking political discourse across elections.

Case Study: During the COVID-19 pandemic, dynamic topic modeling on Twitter showed how conversations shifted from “lockdowns” → “vaccines” → “variants.”

Hierarchical Topic Modeling for Granularity

Sometimes, a single topic is too broad. Hierarchical topic modeling allows drilling down into subtopics.

- Hierarchical Dirichlet Process (HDP): Extends LDA without fixing the number of topics in advance.

- hLDA (Hierarchical LDA): Produces a tree-like structure where topics split into finer-grained subtopics.

Example:

- Topic: “Technology”

- Subtopic 1: “Artificial Intelligence”

- Sub-subtopic: “Neural Networks”

- Sub-subtopic: “Reinforcement Learning”

- Sub-subtopic: “Neural Networks”

- Subtopic 2: “Cybersecurity”

- Subtopic 1: “Artificial Intelligence”

This is especially valuable in knowledge management systems and digital libraries.

Interactive and Human-in-the-Loop Topic Modeling

Purely unsupervised models often produce ambiguous topics. Human feedback can refine results.

- Seeded LDA: Users provide seed words (e.g., “economy, trade” for finance topics), guiding the model.

- Topic-in-the-Loop Systems: Allow users to merge, split, or rename topics iteratively.

- Applications: Academic literature review tools, enterprise text-mining platforms.

This is crucial when working with domain-specific corpora like medicine or law, where domain experts must validate interpretations.

Visualization Beyond pyLDAvis

While pyLDAvis is popular, advanced visualization methods help scale interpretability:

- t-SNE or UMAP Visualizations: Map document embeddings to 2D for topic clusters.

- Topic Word Clouds: Highlight most frequent words per topic.

- Temporal Heatmaps: Track how topic importance changes over time.

- Graph-Based Topic Maps: Represent topics as nodes connected by shared vocabulary.

Business Example: A retail company might visualize seasonal topic trends (e.g., “holiday shopping” peaking in December, “back-to-school” in August).

Scalability and Big Data Topic Modeling

Standard LDA struggles with millions of documents. To scale:

- Online LDA: Processes data incrementally (ideal for streaming data like tweets).

- Parallelized Implementations: Gensim and Spark MLlib allow distributed topic modeling across clusters.

- GPU-Accelerated Models: Neural topic models benefit from deep learning frameworks like PyTorch and TensorFlow.

Case Study: News aggregators like Google News use scalable topic modeling to cluster millions of daily articles into thematic groups.

Applications in Cutting-Edge Fields

a) Healthcare and Medicine

- Identifying emerging disease trends in patient records.

- Mining medical research for drug discovery.

b) Finance

- Detecting hidden themes in earnings call transcripts.

- Uncovering investor sentiment shifts from financial news.

c) Cybersecurity

- Topic modeling on cybersecurity incident reports helps detect common attack vectors.

d) Legal Domain

- Analyzing case law documents to reveal recurring arguments.

e) Education

- Mining student feedback surveys to identify key learning challenges.

Ethical Considerations in Topic Modeling

With great power comes responsibility:

- Bias Amplification: Preprocessing decisions (like stopword lists) can skew results.

- Interpretability Issues: Mislabeling topics may mislead decision-making.

- Data Privacy: Sensitive domains (like healthcare records) require anonymization.

- Over-automation Risks: Blindly trusting models without human oversight can lead to flawed insights.

The Future: Topic Modeling Meets Large Language Models (LLMs)

The next frontier is combining traditional topic modeling with LLMs like GPT and BERT.

- Prompt-based Topic Discovery: Using LLMs to summarize or cluster documents into themes.

- Hybrid Models: LLMs generate embeddings → clustering methods (e.g., k-means, HDBSCAN) form topics.

- Conversational Topic Exploration: Imagine querying a chatbot: “What were the top 3 emerging research themes in AI papers last year?” → powered by topic modeling + LLM.

This future trend will make topic modeling interactive, scalable, and deeply contextual.

Key Takeaways (Advanced Layer)

- Move beyond coherence scores: use stability, human judgment, and downstream evaluation.

- Embeddings + topic modeling (BERTopic, CTM) create more meaningful clusters.

- Dynamic & hierarchical models capture how topics evolve and break into subtopics.

- Human-in-the-loop systems ensure domain relevance.

- Big data scaling + GPU acceleration make topic modeling feasible for enterprise.

- The future lies in merging topic modeling with LLMs for smarter discovery systems.

Advantages and Limitations of Topic Modeling

Advantages

- Unsupervised learning (no labels required).

- Works on large text datasets.

- Helps with text summarization, trend detection, and classification.

Limitations

- Topics may be difficult to interpret.

- Requires careful preprocessing.

- Results can vary with parameter tuning.

- Short texts (like tweets) pose challenges without embeddings.

Topic Modeling in Business Applications

Businesses leverage topic modeling for multiple purposes:

- Customer Support: Identifying common complaint categories.

- Market Research: Understanding competitor strategies.

- Brand Reputation Management: Monitoring reviews and social media.

- Content Recommendation: Suggesting articles or products based on topics.

Example: Netflix applies topic modeling to categorize shows by themes beyond simple genres—like “crime with psychological twist” or “romantic comedy with workplace setting.”

Topic Modeling in AI and Machine Learning

Topic modeling plays a crucial role in:

- Document clustering

- Feature extraction for ML pipelines

- Sentiment analysis (combined with classification)

- Knowledge discovery in large databases

In chatbots, topic modeling helps identify user intent by clustering recurring patterns in conversations.

Comparison: Topic Modeling vs Text Classification

| Feature | Topic Modeling | Text Classification |

| Approach | Unsupervised | Supervised |

| Data Requirement | No labels needed | Requires labeled dataset |

| Output | Hidden topics | Predefined categories |

| Example | Discovering new themes in research | Categorizing emails as spam/ham |

Best Practices for Optimizing Topic Modeling Results

- Preprocess thoroughly (stopwords, lemmatization).

- Tune hyperparameters (number of topics, iterations).

- Use domain-specific stopword lists.

- Validate using coherence score.

- Visualize results for interpretability.

Future of Topic Modeling in Natural Language Processing

The future lies in combining deep learning embeddings with probabilistic models. Hybrid approaches improve contextual understanding. With the rise of LLMs (Large Language Models), topic modeling will evolve into more interactive, human-centered analysis, making unstructured text even more meaningful.

Conclusion

Topic modeling has transformed how we extract knowledge from text. From research to business intelligence, its applications are vast and evolving. As algorithms improve, topic modeling will continue to unlock hidden narratives in the data that power our digital world.

FAQ’s

What is meant by Topic Modelling?

Topic modeling is a machine learning technique that automatically discovers hidden themes or topics within large collections of text, helping to organize, summarize, and understand unstructured data.

What is an example of a topic model?

An example of a topic model is Latent Dirichlet Allocation (LDA), which identifies clusters of words that frequently occur together to reveal hidden topics in documents.

Can LLM be used for topic modeling?

Yes — Large Language Models (LLMs) can be used for topic modeling by clustering or summarizing text into themes without predefined assumptions, often providing more context-aware and coherent topics compared to traditional methods like LDA.

Is topic modeling an NLP technique?

Yes — topic modeling is an NLP technique used to automatically uncover hidden topics or themes within large collections of text data.

How to name topics in topic modelling?

In topic modeling, topics are named by interpreting the top keywords with the highest probability in each topic and assigning a label that best represents the underlying theme. This process is usually manual, though domain knowledge and visualization tools (like pyLDAvis or word clouds) help in naming topics more accurately.